3481

AUTO-DCE-MRI: A Deep-Learning Augmented Liver Imaging Framework for Fully-Automated Multiphase Assessment and Perfusion Mapping1Center for Advanced Imaging Innovation and Research (CAI2R) and Bernard and Irene Schwartz Center for Biomedical Imaging, Department of Radiology, New York University School of Medicine, New York, NY, United States, 2Department of Radiology, University of Wisconsin School of Medicine, Madsion, WI, United States

Synopsis

This work proposes and tests a novel dynamic contrast-enhanced liver MRI framework called AUTO-DCE-MRI, which allows for simultaneous multiphase assessment and automated perfusion mapping from a single continuous free-breathing data acquisition. A deep convolutional neural network is trained to automatically select the abdominal aorta and the main portal vein. For low temporal-resolution multiphase assessment, the contrast bolus information is extracted from the aorta to guide image reconstruction of desired contrast phases. For high temporal-resolution perfusion analysis, the arterial/venous input functions are generated from the automatically selected regions in the aorta and main portal vein for pharmacokinetic modeling.

INTRODUCTION:

Conventional clinical liver DCE-MRI requires breath-held acquisitions of multiple 3D image-sets following contrast injection. To ensure accurate acquisitions of desired contrast phases, a fluoroscopic triggering or a separate timing step is performed first, from which the contrast bolus information is obtained from a manually placed ROI in the aorta. In liver perfusion analysis, it is also important to identify the abdominal aorta and main portal vein, so that the required arterial input function (AIF) and venous input function (VIF) can be generated for pharmacokinetic modeling. These requirements have led to complex liver imaging protocols that significantly increase scan time and reduce post-processing performance. Although many novel imaging methods have been proposed recently to improve this imaging pipeline1-5, selection of the vessels, particularly the portal vein, still requires user interaction and remains a complicated process5. In this work, we propose a novel framework called AUTO-DCE-MRI that allows for simultaneous free-breathing multiphase assessment and automated perfusion mapping of the liver, combining a continuous data acquisition, motion-suppressed sparse reconstruction, and deep learning-based automatic selection of the abdominal aorta and main portal vein for perfusion analysis.METHODS:

Imaging Framework: Figure 1 shows the overall framework of our proposed method. A stack-of-stars golden-angle radial sampling scheme is employed for continuous free-breathing data acquisitions (a); and a respiratory motion signal is extracted from the centers of radial k-space6 (b). For multiphase assessment, the abdominal aorta is first selected automatically using a pre-trained deep convolutional neural network (c), from which the contrast bolus information is obtained to guide reconstruction of desired contrast phases (d) using a respiratory motion suppressed, or soft-gated sparse reconstruction algorithm2,7. For perfusion analysis, a high temporal-resolution image-series is reconstructed from the same raw data (e), and both the aorta and main portal vein are automatically selected using the convolutional neural network (f). AIF and VIF are then automatically generated for pharmacokinetic modeling (g).

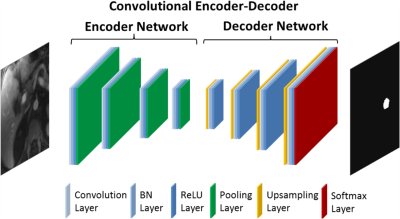

Deep Learning-Based Vessel Selection: Figure 2 shows the convolutional encoder-decoder (CED) network8 used in this study. The encoder network is used for extracting desired image features, and the decoder network is a reverse process of the encoder, consisting of up-sampling layers to recover high resolution pixel-wise labels. The final layer of the decoder network is a multi-class classifier for producing voxel-wise class probabilities. A two-step training strategy is implemented to identify the aorta and main portal vein separately. Namely, the CED network is first initialized to perform two-class segmentation of the aorta from the background, and then reused but with a network initialized from the pre-trained weights for the aorta to identify a desired section of the main portal vein. The network is trained using multiple-class cross-entry loss as image loss and using Stochastic Gradient Descent (SGD) algorithm with a learning rate of 0.01 for a total of 50 epochs.

Framework Evaluation: In this IRB-approved study, a total of 44 liver DCE datasets (5 volunteers and 39 patients) previously acquired using a stack-of-stars golden-angle radial sequence were retrospectively collected to test the performance of this new framework. 39 datasets (3 volunteers and 36 patients) were randomly selected and the abdominal aorta and main portal vein were manually segmented in each dataset for training. The remaining 5 datasets (2 volunteers and 3 patients) were used for evaluation and each dataset was reconstruction twice with ~15s (for multiphase assessment) and ~3s temporal resolution (for perfusion analysis using a dual-input single compartment model9). Perfusion analysis was performed twice in one testing case, one with automatically generated AIF/VIF and the other one with manually generated AIF/VIF.

RESULTS:

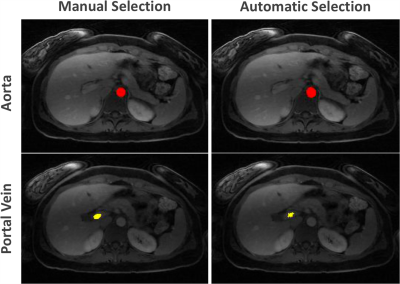

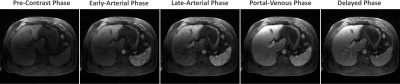

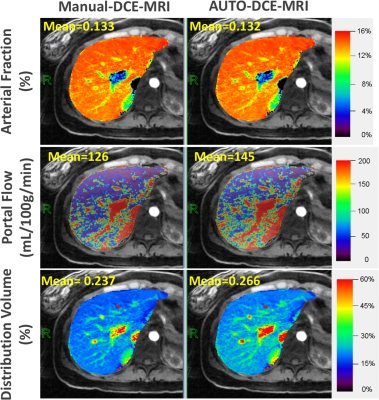

Automatic selection of the aorta and main portal vein was successful in all the testing cases. One representative example is shown in Figure 3. The RMSE of the signal intensity curves was 0.011±0.0045 and 0.007±0.0049 between automatic and manual selection for the aorta and portal vein, respectively. Figure 4 shows reconstructed images in different contrast phases reconstructed for multiphase assessment. Figure 5 compares perfusion maps generated using AIF and VIF obtained from conventional manual processing (left: Manuel-DCE-MRI) and the proposed automated processing (right: AUTO-DCE-MRI).DISCUSSION:

AUTO-DCE-MRI enables automated qualitative and quantitative assessment of the liver. One of the main reasons for improved performance is the utilization of a neural network to select the aorta and portal vein, so that the extracted information can guide image reconstruction or can be used for perfusion analysis. Increasing the number of training datasets could further improve the reliability of vessel selection. This will be further optimized and the framework will be systematically evaluated in a large number of datasets to validate its robustness.Acknowledgements

This work was supported in part by the NIH (P41 EB017183 and R01 EB018308) and was performed under the rubric of the Center for Advanced Imaging Innovation and Research (CAI2R), an NIBIB Biomedical Technology Resource Center.References

[1] Zhang T et al. J Magn Reson Imaging. 2014 Jul;40(1):13-25. doi: 10.1002/jmri.24333. Epub 2013 Oct 11.

[2] Zhang T et al. J Magn Reson Imaging. 2015 Feb;41(2):460-73. doi: 10.1002/jmri.24551. Epub 2013 Dec 21.

[3] Feng L et al. Magn Reson Med. 2014 Sep;72(3):707-17. doi: 10.1002/mrm.24980. Epub 2013 Oct 18

[4] Chandarana H et al. Invest Radiol. 2013 Jan;48(1):10-6. doi: 10.1097/RLI.0b013e318271869c.

[5] Chen Y et al. Invest Radiol. 2015 Jun;50(6):367-75. doi: 10.1097/RLI.0000000000000135.

[6] Feng L et al. Magn Reson Med. 2016 Feb;75(2):775-88. doi: 10.1002/mrm.25665. Epub 2015 Mar 25.

[7] Cheng JY et al. J Magn Reson Imaging. 2015 Aug;42(2):407-20. doi: 10.1002/jmri.24785. Epub 2014 Oct 20.

[8] Liu F et al. Magn Reson Med. 2017 Jul 21. doi: 10.1002/mrm.26841. [Epub ahead of print]

[9] Hagiwara M Radiology. 2008 Mar;246(3):926-34. doi: 10.1148/radiol.2463070077. Epub 2008 Jan 14.

Figures