3376

Assessment of the generalization of learned image reconstruction and the potential for transfer learning1Center for Biomedical Imaging, New York University School of Medicine, New York, NY, United States, 2Center for Advanced Imaging Innovation and Research (CAI2R), New York University School of Medicine, New York, NY, United States, 3Institute of Computer Graphics and Vision, Graz University of Technology, Graz, Austria

Synopsis

The goal of this study is to assess the influence of image contrast, SNR and image content on the generalization of machine learning in MR image reconstruction. Experiments are performed with patient data from clinical knee MR exams as well as synthetic data created from a public image database. It shows that while SNR is a critical parameter, trainings can be generalized towards a range of SNR values. It also demonstrates that transfer learning can be used successfully to fine-tune trainings from synthetic data to a particular target application using only a very small number of training cases.

Introduction

It was recently demonstrated that deep learning1 has great potential for MR image reconstruction2-7. One open question regarding the success of these approaches is generalization towards deviations between training and test data. This is critical for medical imaging because in contrast to computer vision, where millions of training examples are available in public databases8,9, availability of training data is generally limited. The goal of this study is to assess the influence of image contrast, SNR and image content on the generalization of learned image reconstruction, and to demonstrate the potential for transfer learning10.Methods

40 consecutive patients referred for diagnostic knee MRI were enrolled in this IRB approved study. Fully sampled rawdata were acquired on a clinical 3T system (Siemens Skyra) using a 15-channel knee coil. Coronal 2D TSE proton-density weighted (PDw) data with and without fat suppression (FS) were acquired. A third dataset was generated by adding noise to the PDw k-space data such that the SNR corresponded to the lower SNR of the FS data.

Synthetic training data were generated using 200 images from the Berkeley segmentation database9 (BSDS). Images were modulated with a synthetic sinusoidal phase, modulated with coil sensitivity maps estimated from our knee training data and Fourier transformed. Different levels of complex Gaussian noise between the range of [SNRPDwFS, SNRPDw] was added to this synthetic multi-channel k-space data. Figure 1 shows an overview of all training data.

All rawdata were undersampled retrospectively using a regular Cartesian undersampling pattern with an acceleration factor of 4 and 24 reference lines for the estimation of coil sensitivity maps (using ESPIRiT11) at the center of k-space.

Our neural network architecture was designed according to2, using 10 stages with 24 complex 11×11 convolution kernels. 150 epochs were used for training. Data were separated into 10 cases for training and 10 cases for testing. Separate trainings were performed using PDw, PDw FS, PDw with additional noise and a mix of PDw and PDw FS (each 5 patients). In the second experiment trainings were performed with synthetic data of different noise levels. Finally, transfer learning was performed by fine-tuning a baseline training from synthetic data with a 1/10th subset of the knee data for another 150 epochs.

Results and Discussion

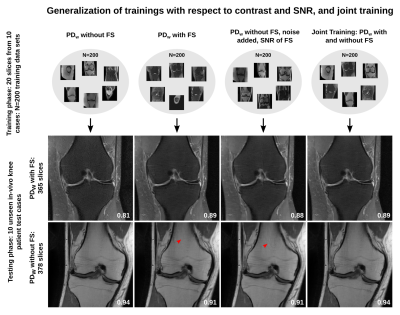

Results of the assessment of generalization with respect to contrast and SNR are shown in Figure 2. Applying a network trained from higher SNR data leads to noise in the reconstructed images, while applying a training from lower SNR leads to blurred images with residual artifacts. This can be related to the influence of the regularization parameter in compressed sensing12, because the parameter that balances resolution, residual artifacts and noise amplification is learned from the training data in a machine learning approach. Results were similar for trainings from PDw data with additional noise and trainings from the PDw FS data. This demonstrates that while SNR is a critical parameter that has to be consistent between training and testing, image contrast is less relevant. Joint training results from both contrasts are identical to using the same sequence for training and testing, demonstrating that trainings can be designed to generalize with respect to changes in SNR and contrast, under the condition that the corresponding heterogeneity is included in the training data.

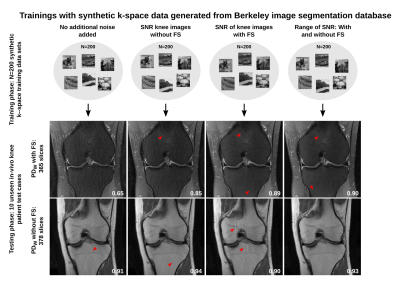

The influence of SNR can be reproduced with synthetic data (Figure 3). Residual aliasing artifacts are present in these results. Our hypothesis is that since we use regular Cartesian sampling aliasing artifacts are coherent, and therefore depend on the particular structure of the image content.

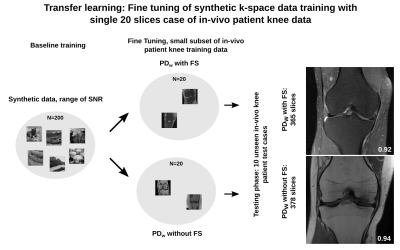

When fine-tuning a baseline synthetic data training with a small subset of knee MRI data, aliasing artifacts are reduced (Figure 4).

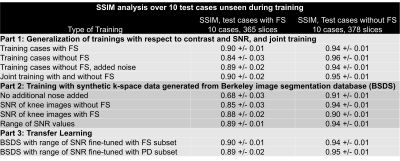

The quantitative analysis of the structural similarity index (SSIM) to the fully sampled reference over all slices of all test cases is consistent with the results from individual slices (Figure 5).

Conclusion

This study presents insights into the generalization ability of learned image reconstruction with respect to deviations in the acquisition settings between training and testing for a clinically representative set of test cases. It shows that while SNR is a critical parameter, trainings can be generalized towards a range of SNR values. It also demonstrates that while the particular image content of MR images is relevant to remove aliasing artifacts, transfer learning can be used successfully to fine-tune trainings to a particular target application using only a very small number of training cases. This could be of particular importance for applications like dynamic or contrast enhanced imaging, where the acquisition of large numbers of training data is particularly challenging.Acknowledgements

We acknowledge grant support from NIH P41 EB017183 and NIH R01 EB000447, FWF START project BIVISION Y729, the ERC Horizon 2020 program, ERC starting grant ”HOMOVIS” 640156, as well as hardware support from Nvidia corporation.References

[1] Y. LeCun, Y. Bengio, and G. Hinton, “Deep Learning,” Nature, vol. 521, no. 7553, pp. 436–444, 2015.

[2] K. Hammernik, T. Klatzer, E. Kobler, M. P. Recht, D. K. Sodickson, T. Pock and F. Knoll. “Learning a Variational Network for Reconstruction of Accelerated MRI Data”. Magnetic Resonance in Medicine (in Press, 2017).

[3] S. Wang, Z. Su, L. Ying, X. Peng, S. Zhu, F. Liang, D. Feng, and D. Liang, “Accelerating Magnetic Resonance Imaging Via Deep Learning,” in IEEE International Symposium on Biomedical Imaging (ISBI), 2016, pp. 514–517.

[4] K. Kwon, D. Kim, H. Seo, J. Cho, B. Kim, and H. W. Park, “Learning-based Reconstruction using Artificial Neural Network for Higher Acceleration,” in Proceedings of the International Society of Magnetic Resonance in Medicine (ISMRM), 2016, p. 1081.

[5] E. Gong, G. Zaharchuk, and J. Pauly, “Improving the PI+CS Reconstruction for Highly Undersampled Multi-Contrast MRI Using Local Deep Network,” in Proceedings of the International Society of Magnetic Resonance in Medicine (ISMRM), 2017, p. 5663.

[6] J. Schlemper, J. Caballero, J. Hajnal, A. Price, and D. Rueckert, “A Deep Cascade of Convolutional Neural Networks for MR Image Reconstruction,” in Proceedings of the International Society of Magnetic Resonance in Medicine (ISMRM), 2017, p. 643.

[7] B. Zhu, J. Liu, B. Rosen, and M. Rosen, “Neural Network MR Image Reconstruction with AUTOMAP: Automated Transform by Manifold Approximation,” in Proceedings of the International Society of Magnetic Resonance in Medicine (ISMRM), 2017, p. 640.

[8] J. Deng, W. Dong, R. Socher, L. J. Li, K. Li and L. Fei-Fei. “ ImageNet: A Large-Scale Hierarchical Image Database”. CVPR 2009.

[9] D. Martin, C. Fowlkes, D. Tal and J. Malik. “ A Database of Human Segmented Natural Images and its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics”. Proc. 8th Int'l Conf. Computer Vision, 416-423, (2001).

[10] L. Y. Pratt. "Discriminability-based transfer between neural networks" NIPS Conference: Advances in Neural Information Processing Systems 5. 204-211 (1993).

[11] M. Uecker, P. Lai, M. J. Murphy, P. Virtue, M. Elad, J. M. Pauly, S. S. Vasanawala and M. Lustig. “ESPIRiT - An Eigenvalue Approach to Autocalibrating Parallel MRI: Where SENSE meets GRAPPA." Magnetic Resonance in Medicine: 71:990-1001 (2014).

[12] M. Lustig, D. Donoho and J. M.Pauly. "Sparse MRI: The application of compressed sensing for rapid MR imaging." Magnetic Resonance in Medicine: 58:1182-1195 (2007).

Figures

A selection of fully sampled reference reconstructions from training data and images used to generate synthetic training data:

a) Data from a coronal proton density weighted (PDw) 2D TSE sequence for knee imaging with and without fat-suppression. These two datasets show substantial differences in terms of image contrast and SNR.

b) Processed and synthetic data: Left: Knee data from the PDw sequence without fat-suppression with additional noise added to the complex multi-channel k-space data such that the SNR is comparable to the lower SNR fat-suppressed data. Right: Completely synthetic k-space data, generated from images from the Berkeley segmentation database.

Assessment of generalization with respect to contrast and SNR (showing SSIM to fully sampled reference):

When applying a training from high SNR PDw data to lower SNR PDw FS data, a substantial level of noise is present in the reconstructions.

Applying the low SNR PDw FS training to higher SNR PDw data leads to blurred images with residual aliasing artifacts (red arrowheads).

Results of trainings from PDw data with additional noise are comparable to results of trainings from PDw FS data.

Results from joint training from both sequences are identical to using the same sequence for training and testing.

Trainings from synthetic k-space data generated from the BSDS database:

Reconstructions show a larger degree of residual aliasing artifacts (red arrowheads) in comparison to trainings with in-vivo knee data. Experiments with deviating SNR levels between training and test data again lead to either noise amplification or blurring and residual artifacts. This demonstrates that the influence of SNR can be reproduced independent of the image content.

Again, training with a range of SNR values leads to results that are comparable to training with data that is consistent to the test data in terms of SNR.

Transfer learning experiments: The hypothesis is that low level image features like edges are independent of the actual image content and can be learned from arbitrary data sets.

For both PDw and PDw FS data, results from fine-tuned networks outperform baseline trainings using only synthetic data in terms of removal of aliasing artifacts, which is also reflected in higher SSIM values. This indicates the possibility of fine-tuning networks that were pre-trained from generic data with only a very small number of training cases for a particular target application where obtaining large numbers of training data is more challenging.

Mean and standard deviation of SSIM to the fully sampled reference over all cases in the test set.

Part 1: Highest SSIM values are achieved when data from the same sequence is used for training and testing. SSIM drops when the SNR level deviates between training and testing. Joint training from multiple contrasts shows comparable performance to using consistent data between training and testing.

Part 2: Trainings with synthetic data show a drop in SSIM compared to trainings with in-vivo data and the same SNR dependency.

Part 3: Fine-tuned SSIM values are close to trainings with consistent in-vivo data.