3373

Parallel Imaging and Convolutional Neural Network Combined Fast Image Reconstruction for Low Latency Accelerated 2D Real-Time Imaging1Radiological Sciences, University of California, Los Angeles, Los Angeles, CA, United States, 2Radiation Oncology, University of California, Los Angeles, Los Angeles, CA, United States

Synopsis

Real-time imaging is a powerful technique to exam multiple physiological motions are the same time. Previous literature has described methods to accelerate the real-time imaging acquisition down to 20ms with the help of compressed sensing. However, reconstruction time remains relatively long, preventing its wide clinical use. Recent developments in deep learning have shown great potential in reconstructing high-quality MR images with low-latency reconstruction. In this work, we proposed a framework that combines the parallel imaging, which is a unique feature in MR imaging, with convolution neural network to reconstruct 2D real-time images with low-latency and high-quality.

Introduction

The desire to visualize noninvasively physiological processes at high temporal resolution has been a driving force for the development of MRI. Real-time imaging techniques that can achieve a temporal resolution of less than 50ms have been proposed (1,2) with the help of compressed sensing (CS) based method. However, CS based method usually suffers from high latency reconstruction that even with parallelized and distributed computing, each frame takes nearly 1s (1,3). This could greatly affects its clinical use, especially for certain applications such as MR guided interventional procedure. Recently, there have been attempts to apply convolutional neural network (CNN) based methods (4) for reconstruction of highly under-sampled MR images with high image quality and more importantly, low latency reconstruction in nearly 20ms per frame (5). However, most of the CNN and deep learning based methods (4-7) only perform single-coil reconstruction and do not utilize acquired multi-coil data. In this work, we propose a parallel imaging (PI) and CNN combined reconstruction that takes the best from both worlds. The power of proposed method is demonstrated at both low and high field (0.35 and 1.5T) and with different applications (abdominal and cardiac imaging).Method

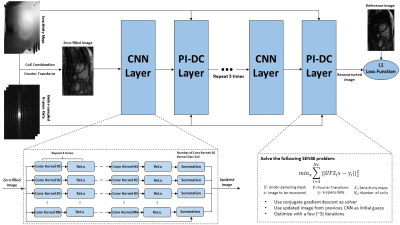

The proposed method is depicted in Figure 1. It cascades several sub-CNN-networks in series with PI data consistency (DC) layer interleaved between consecutive sub-CNN-networks. The sub-CNN-networks take coil-combined single image as input, pass it through four layers of convolutional layers in conjunction with rectified linear unit (ReLu) and one summation layer, and outputs an updated image. The updated image is then fed into the PI-DC layer, and being used as the initialization image for a SENSE optimization problem, which is essentially a PI reconstruction, with few (~3) iterations. The result of the SENSE reconstruction is provided to the next sub-CNN-network, and such process is repeated five times. Inspired by recent work (8,9), we used L1 norm instead of the conventional L2 norm as the loss function of the proposed network. Detailed parameters of the proposed method are listed in Figure 1.

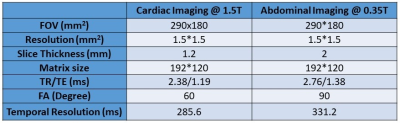

We acquired fully-sampled real-time (4 fps) free-breathing abdominal images in sagittal orientation on a 0.35T scanner (ViewRay, Cleveland) and cardiac images in short-axis on a 1.5T scanner (Siemens, Germany) using bSSFP sequence. For abdominal imaging, 2 healthy volunteers and 3 patients were scanned. For cardiac imaging, 5 healthy volunteers were scanned. 200 images were acquired for each volunteer/patient. Sequence parameters were listed in Table 1. Among the 1000 images, 800 images from the first 4 volunteers/patients were used for training with retrospective under-sampling and the last 200 images were used for testing. The training was done separately for each application using a PC station with Intel i7 3.9GHz CPU, GeForce GTX 780 and 64GB memory. It took 1 day to finish the training. As a comparison, the 200 testing images were also reconstructed with the single-coil deep learning algorithm (5) and L1-ESPIRiT algorithm (10).

Results

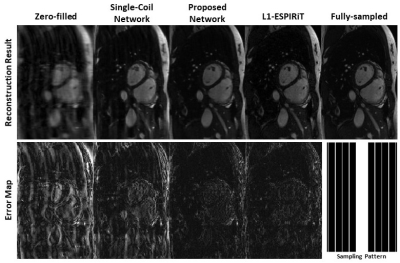

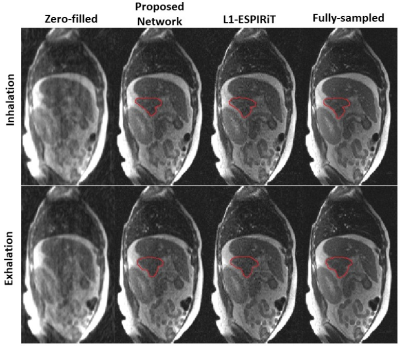

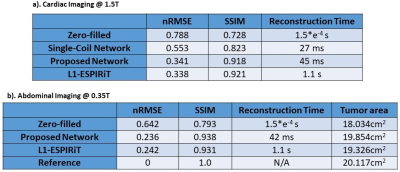

Figure 2 shows the comparison of reconstructions from zero-filled, single-coil network, proposed PI+CNN combined method, L1-ESPIRiT method and reference with acceleration factor 5.5X by 2D Cartesian sampling pattern. As shown in the error map, CNN with single coil reconstruction still contains residual artifacts and blurring while the proposed PI+CNN method and L1-ESPIRiT have similar performance with clean and sharp image. Figure 3 compares the proposed method with zero-fill reconstruction and L1-ESPIRiT method on SNR-limited low field environment, using a similar 2D Cartesian under-sampling pattern with 4X acceleration factor. Both proposed method and L1-EPSIRiT can recover high-quality image with well-delineated tumor region (contoured in red). Quantitatively, we measured both normalized root mean square errors (nRMSE) and structural similarity index (SSIM) between each slice of the reference images and images reconstructed from different strategies, and averaged across all 200 testing images. Average reconstruction time for each method was also recorded. For the patient shown in Figure 3, we additionally measured the averaged tumor area on testing images for each strategy. As shown in Table 2, on both applications, the proposed method has similar performance compared with L1-ESPIRiT, but has a nearly 25X reduction in reconstruction time.Discussion and Conclusion

We demonstrated a new approach to combine parallel imaging with neural network that allows low-latency high-quality reconstruction of real-time images. It leverages the redundancy from multi-coil k-space data within a neural network. This separates the proposed method from the conventional pure image based de-aliasing/super resolution tasks, and is truly applicable for clinical multi-coil data. More importantly, the proposed method is able to reconstruct images in less than 50ms/frame, making it extremely useful for real-time applications.Acknowledgements

No acknowledgement found.References

1. Uecker M, Zhang S, Voit D, Karaus A, Merboldt KD, Frahm J. Real-time MRI at a resolution of 20 ms. NMR Biomed 2010;23:986-94. 10.1002/nbm.1585.

2. Xue H, Kellman P, LaRocca G, Arai A, Hansen M. High spatial and temporal resolution retrospective cine cardiovascular magnetic resonance from shortened free breathing real-time acquisitions. J Cardiovasc Magn Reson 2013;15:102.

3. Xue H, Kellman P, Larocca G, Arai AE, Hansen MS. Distributed MRI reconstruction using Gadgetron-based cloud computing. Magn Reson Med. 2015;73:1015–25.

4. S. Wang, Z. Su, L. Ying et al., "Accelerating magnetic resonance imaging via deep learning", 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), pp. 514-517, 2016.

5. Schlemper, J., Caballero, J., Hajnal, J. V., Price, A., & Rueckert, D. A Deep Cascade of Convolutional Neural Networks for MR Image Reconstruction. Information Processing in Medical Imaging (IPMI), 2017.

6. Yohan Jun, Taejoo Eo, Taeseong Kim, Jingseong Jang, Dosik Hwang. Deep Convolutional Neural Network for Acceleration of Magnetic Resonance Angiography (MRA). ISMRM 2017.

7. Y. Yang, J. Sun, H. Li, and Z. Xu, “Deep ADMM-Net for compressive sensing MRI,” Advances in Neural Information Processing Systems, Vancouver, Canada, Dec. 2016.

8. Kerstin Hammernik, Florian Knoll, Daniel Sodickson, Thomas Pock. L2 or not L2: Impact of Loss Function Design for Deep Learning MRI Reconstruction. ISMRM 2017.

9. Loss Functions for Neural Networks for Image Processing Hang Zhao, Orazio Gallo, Iuri Frosio and Jan Kautz arXiv:1511.08861.

10. Uecker M, Lai P, Murphy MJ, Virtue P, Elad M, Pauly JM, Vasanawala SS, Lustig M. ESPIRiT-an eigenvalue approach to autocalibrating parallel MRI: where SENSE meets GRAPPA. Magn Reson Med 2014;71:990–1001.

Figures