3023

Multi-contrast MRI Enhance Ultra-low-dose PET Reconstruction1Engineering Physics, Tsinghua University, Beijing, China, 2Electrical Engineering, Stanford University, Stanford, CA, United States, 3Radiology, Stanford University, Stanford, CA, United States, 4GE Healthcare, Menlo Park, CA, United States

Synopsis

Simultaneous PET/MRI is a powerful multimodality imaging technique for both anatomical and functional imaging. Here we propose a novel method for high-quality PET image reconstruction from ultra-low-dose (more than 99% reduction compared to current practice) PET scanning by using multi-contrast-MRI. A multi-scale fully convolutional network was developed for solving the reconstruction. The proposed method is compared with other methods on a Glioblastoma(GBM) clinical dataset. Results show that our method achieves superior image quality compared with state-of-the-art methods in low-dose PET reconstruction. Besides, quantitative and qualitative evaluations indicate that multi-contrast MRI significantly improves the reconstruction quality with better structural details.

Purpose

Positron emission tomography (PET) is widely used in various clinical applications visualizing both anatomical and functional information for better pathology identification. However, the use of radioactive tracer in PET imaging raises concerns due to the risk of radiation exposure. To minimize this potential risk in PET imaging, attempts have been made to reduce the injected dose of the radioactive tracer. However, lowering the dose leads to low Signal-to-Noise-Ratio (SNR) PET images, which will hinder clinical diagnosis. Previous methods in low-dose PET denoising are typically complicated and slow, including iterative regularization1 and dictionary learning2.

Compared with PET, MRI can provide more structural information and local details. Recent development in PET/MRI system makes it possible to utilize simultaneously acquired MRI to enhance PET image quality in reconstruction2,3. Besides, benefited from advanced GPUs, deep learning networks also show promising results and speed in low-dose PET reconstruction3.

However, all these algorithms are still limited and only applied to dose reduction factor (DRF) no more than 4. Here we propose a multi-scale deep convolution network which incorporates multi-contrast MRI information and enables ultra-low-dose PET reconstruction with more than 99% dose reduction.

Methods

PET/MRI data in our experiment were acquired on a PET/MRI system (SIGNA, GE Healthcare, Waukesha, WI, USA) with a standard dose (370MBq) of 18F-FDG. To generate the ultra-low-dose (DRF=200) PET images, the PET List-mode data was randomly undersampled with only 0.5% events kept to generate a new sinogram4, and then low-dose images were reconstructed into intermediate low-quality reconstruction using OSEM method (28 subsets, 2 iterations). Full-dose-like reconstruction was then generated by passing through the low-quality reconstruction images and the corresponding multi-contrast MRI into a deep network.

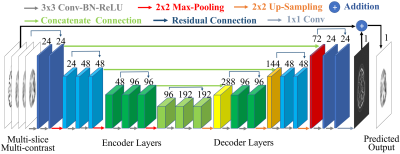

A deep network is trained to generate high-quality PET image, from low-dose PET image and corresponding multi-contrast MR images (T2 and FLAIR) as inputs. The proposed network structure is shown in Fig. 1. Each stage of the N stages is a residual block consisting of M convolution layer with K$$$\times $$$K kernels. In the following experiment, we chose the network structure with N=7, M=2, K=3. Symmetric concatenate connections are used to preserve high-resolution information during the encoding-decoding process. Additionally, the strategy of residual learning is adopted. For evaluation, we tested on a clinical Glioblastoma(GBM) PET/MR datasets consisting 30 PET/MRI scans with leave-one-out cross-validation. The reconstruction results of the proposed method were compared with NLM5, BM3D6 and auto-context network3 (AC-Net), while the full-dose OSEM reconstruction was the ground-truth.

Results

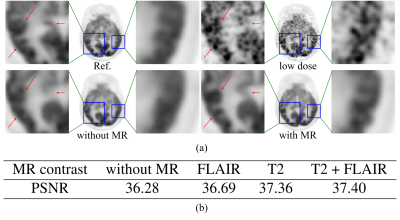

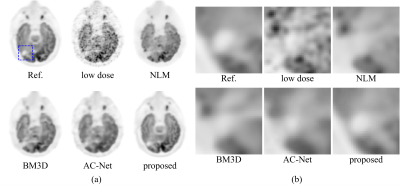

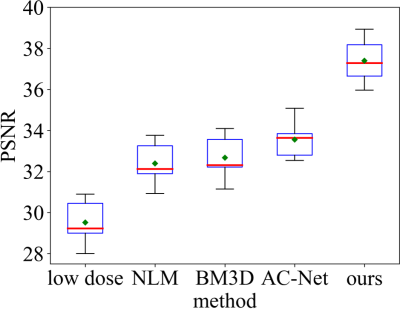

Sample slices of reconstructed images are shown in Fig. 2 (a) and the corresponding zoomed parts are displayed in Fig. 2 (b). Fig. 3 compared the average scores of PSNR of images reconstructed by different methods. To evaluate the contribution of MRI to PET reconstruction, models with and without MRI are trained and results are shown in Fig. 4 (a). The average scores of models trained with different MR contrasts are listed in Fig. 4 (b)

Discussion

Quantitative results in Fig. 3 show that our proposed method achieves superior reconstruction results in the testing data set, compared with other methods that we have tested. From the visual results in Fig 2 (a), it also suggests that the proposed method have highest image quality. In comparison, NLM produces patchy artifacts in the image while both BM3D and AC-Net cannot fully remove the noise in low-dose PET image and tend to over-blur the image without recovering important details. As for the contribution of multi-contrast MRI, Fig. 4 (a) shows that the complementary information from MR images further enhances local details in PET images. In our experiments, we found that T2 and FLAIR provided the most contribution and was selected for the network inputs. In addition, our proposed method shows superior diagnostic quality and improved details the region of GBM, as shown in Fig. 2 (b).Conclusion

By using Deep Learning and multi-contrast MRI information from simultaneous PET/MRI, we showed it is feasible to achieve high-quality reconstruction of 200x low-dose PET, which can lower the radiation exposure significantly for PET scans in clinical practice. Evaluation on GBM clinical datasets shows that our proposed method can not only achieve high PSNR than the state-of-the-art methods but also reconstruct images with high quality with more than 99% reduction of dose in PET. Besides, experiments show that simultaneous acquired multi-contrast MRI significantly improve quality of the reconstructed images for MRI can provide high-quality structural information for reconstruction. Additionally, our proposed method also shows that deep learning method can transfer information from one imaging modality to another without predefined prior.Acknowledgements

No acknowledgement found.References

1. Wang, C., Hu, Z., Shi, P., & Liu, H. (2014, August). Low dose PET reconstruction with total variation regularization. In Engineering in Medicine and Biology Society (EMBC), 2014 36th Annual International Conference of the IEEE (pp. 1917-1920). IEEE.

2. Wang, Y., Ma, G., An, L., Shi, F., Zhang, P., Lalush, D. S., ... & Shen, D. (2017). Semisupervised Tripled Dictionary Learning for Standard-Dose PET Image Prediction Using Low-Dose PET and Multimodal MRI. IEEE Transactions on Biomedical Engineering, 64(3), 569-579.

3. Xiang, L., Qiao, Y., Nie, D., An, L., Lin, W., Wang, Q., & Shen, D. (2017). Deep auto-context convolutional neural networks for standard-dose PET image estimation from low-dose PET/MRI. Neurocomputing, 267, 406-416.

4. MD Dipl-math, S. G., Seith, F., Schäfer, J. F., Christian la Fougère, M. D., Nikolaou, K., & Schwenzer, N. F. (2016). Towards tracer dose reduction in PET studies: Simulation of dose reduction by retrospective randomized undersampling of list-mode data. Hellenic journal of nuclear medicine, 19(1), 15-18.

5. Buades, A., Coll, B., & Morel, J. M. (2005, June). A non-local algorithm for image denoising. In Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on (Vol. 2, pp. 60-65). IEEE.

6. Dabov, K., Foi, A., Katkovnik, V., & Egiazarian, K. (2006, January). Image denoising with block-matching and 3 D filtering. In Proceedings of SPIE (Vol. 6064, No. 30, pp. 606414-606414).

Figures

Figure 3. The average PSNR of different methods.