2802

Machine Learning Using the BART Toolbox - Implementation of a Deep Convolutional Neural Network for Denoising1University Medical Center Göttingen, Göttingen, Germany, 2Partner-site Göttingen, DZHK (German Centre for Cardiovascular Research), Göttingen, Germany

Synopsis

Deep convolutional neural networks (DCNNs) tend to outperfom conventional image processing algorithms in recent benchmarks for classifcation, segmentation, denoising, and many other image processing tasks. Here, we show how DCNNs can be implemented using existing building blocks already provided by the BART image reconstruction toolbox. As proof-of-principle we discuss the implementation of an image denoising tool based on a pre-trained DCNN.

Introduction

Recently developed methods based on deep learning tend to outperform other state-of-the-art algorithms in in classification, denoising, segmentation, and other image processing tasks. Neural networks therefor generate a lot of interest as building blocks of advanced image processing pipelines. The BART Toolbox1,2 is a versatile framework for image processing and reconstruction. In this work, we explored how deep convolutional neural networks can be implemented using the building blocks already provided by the BART toolbox. As an example, we discuss the implementation of a command-line tool for image denoising based on residual learning with a deep convolution neural network.3Theory

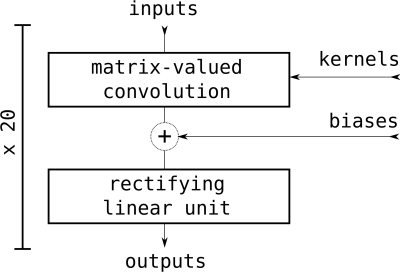

A deep convolutional neural network consists of many alternating layers of matrix-valued convolutions and non-linear activation functions such as rectified linear units. BART directly supports matrix-valued convolutions in arbitrary dimensions and a complete convolutional layer can be implemented with a single call to the 'conv' function. The output of the convolutional layer is fed into the activation function after applying a bias. Addition of a bias to each output can be implemented with a 'md_zadd' function. As activation function we consider rectifying linear units which are simply defined as f (x) = max(x, 0) . In BART this can be implemented as the 'md_smax' function with a zero as last argument. The three required functions can simply be called in a series, or wrapped in the operator interface provided by BART which facilitates the construction of more complicated networks from operations by chaining the corresponding operators together.Methods

As a proof-of-principle, we used a recent BART version2 to implement a pre-trained convolutional neural network. The specific network used here has been trained to remove the image content from images with Gaussian noise (residual learning),3 i.e. for denoising the output of the neural network has to be subtracted from the input in a post-processing step. This specific neural network consists of 20 layers: 18 convolutional layers with 64 input and 64 output channels, one input layer with 1 input and 64 output channels and one output layer with 64 input and 1 output channels. The matrix-valued convolutions have kernels of size 3x3. Between each convolutional layer the biases are added to each channel followed by the application of the rectifying linear unit (see Figure 1). As the neural network considered here is based on almost identical layers, the layers are simple implemented as a loop of identical operations with unused input and output channels for the first and last layer, respectively. For testing, a command-line tool has been implemented which takes the weights, biases, and an input file and returns the denoised image as an output. The kernels for the matrix-valued convolutions are extracted for all layers from the Matlab file 'model/GD_Gray_blind.mat' available at Github4 and then stored in a BART-compatible file 'nn_kernels' with dimensions 3x3x1x64x64x20. The biases are also extracted and stored in a file 'nn_biases' with dimensions 1x1x1x1x64x20. Image denoising can then be performed using the new 'cnn' tool and these data files.Results

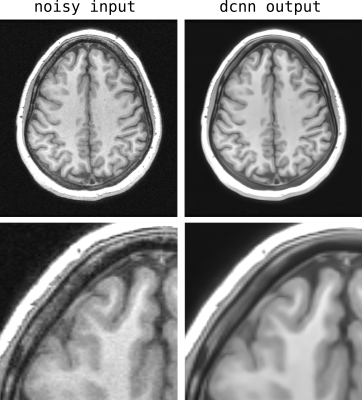

Image denoising was performed on an example brain image (3T, 8 channel, FLASH) stored as magnitude image in the file 'noise_image' using the command:

> bart cnn nn_kernels nn_biases noise_image cnn_output

Figure 2 shows the noisy image 'noise_image' and the output of the neural network in the file 'dcnn_output'. The denoising effect of the neural network is clearly visible.

Conclusion

The BART toolbox is a convenient framework for advanced image processing tasks. Deep convolutional neural networks can easily be implemented in BART based on existing functionality. A denoising tool based on residual learning has been implemented as a proof-of-principle application.

Acknowledgements

No acknowledgement found.References

1. Uecker M, Ong F, Tamir JI, et al. Berkeley Advanced Reconstruction Toolbox. Annual Meeting ISMRM, Toronto 2015, In: Proc. Intl. Soc. Mag. Reson. Med 2015;23:2486.

2. https://github.com/mrirecon/bart

3. Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans Image Process 2017;26:3142 - 3155.

4. https://github.com/cszn/DnCNN (commit 95ce6fe898224ca882d00c9419764a98ef8fcaf4)

Figures