2731

2D Single Plane Big Data Convolutional Neural Network for Skull-Stripping1University of Campinas, Campinas, Brazil, 2University of Calgary, Calgary, AB, Canada

Synopsis

Convolutional neural networks for MR image segmentation require a large amount of

Introduction

Segmenting brain tissue from non-brain tissue is known as skull-stripping (SS) or brain extraction. In magnetic resonance (MR) imaging, brain extraction is a frequently an essential processing step which facilitates subsequent analysis steps.¹ Convolutional neural networks (CNNs) have become a promising algorithm in medical image processing.² Training a new CNN often requires large amounts of labeled data. Labelling is frequently done manually by experts; a time-consuming and labor intensive task.² A particular solution to generate labeled data for large-scale studies in neuroimage analysis is to use consensus algorithms. These algorithms generate annotated data based on the agreement of automated SS methods.3,4 Moreover, silver standard masks (i.e., masks generated using a consensus algorithms) have been validated in the CNN training stage for SS,⁵ further suggesting a role in data augmentation. Our goal is to show that our single plane 2D CNN, trained with consensus-based augmentation using the silver standard masks, achieve comparable performance metrics but are faster than state-of-the-art SS methods.Materials and Methods

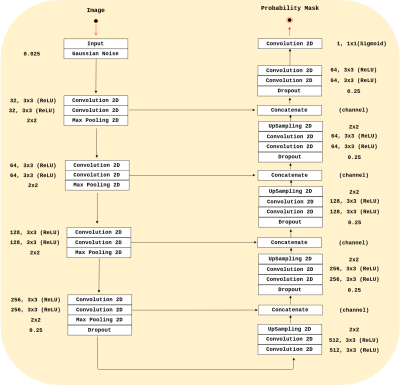

Our analysis were conducted on three publicly available datasets: Calgary-Campinas-359 (CC-359),6 LONI Probabilistic Brain Atlas (LPBA40),⁷ and the Open Access Series of Imaging Studies (OASIS).8 Our method used a modified 2D U-Net⁹ architecture (Figure 1) that was inspired by the RECOD Titans architecture.10 We trained our CNN using the 347 silver standard masks volumes generated by Simultaneous Truth and Performance Level Estimation³ (STAPLE) and provided in the CC-359 dataset. We based our analysis on the sagittal view since it often the raters choice to manually segment a brain image volume.

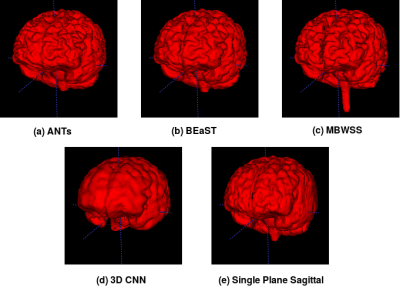

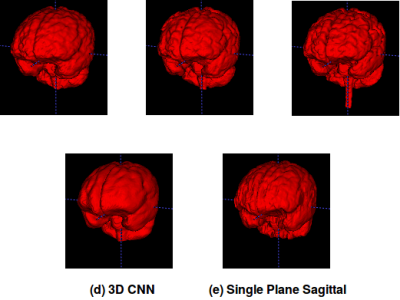

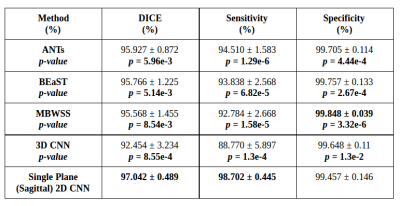

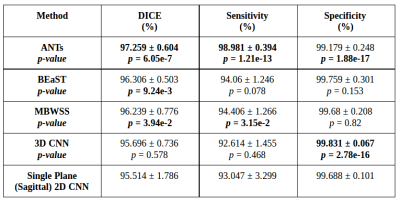

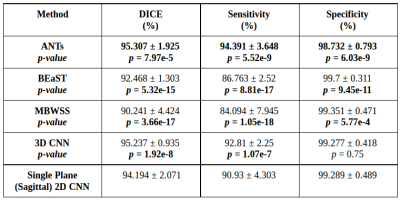

We validated our method against twelve manual segmentations (CC-12 from the CC-359 dataset, which are gold standards masks used for testing) and the manually segmented images from the LPBA40 and OASIS datasets. We used three performance metrics: Dice coefficient, sensitivity, and specificity, and compared our results with four state-of-the-art published methods. Three methods were non-deep learning-based approaches: 1) Advanced Normalization Tools (ANTs),11 2) Brain Extraction Based on Nonlocal Segmentation Techniques (BEaST),12 and 3) Marker-based Watershed Scalper (MBWSS).13 As well, we compared against a recently published 4) deep-learning-based method that will refer to as 3D CNN.14 Paired Student’s t-tests were used to assess statistical significance between our method and the others.

Results and Discussion

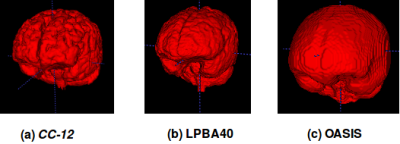

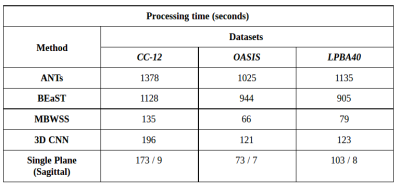

Representative 3D reconstructions of the manual segmentation from each dataset are shown in Figure 2 and 3D reconstructions comparing our method against the four published state-of-the-art methods are shown in Figures 3-5. Tables 1-3 summarize the comparison of our method against the other four methods related to the metrics and Table 4 presents a time processing comparison.

Our 2D CNN single plane method is comparable to the most robust SS algorithms presented in the literature (Tables 1-3) and faster than ANTs, BEaST, and 3D CNN methods (Table 4). Improvement for some metrics were statistical significant (p<0.05) in particular with the CC-12 subset (Table 1). Generalized performance was optimal with the LPBA40 and OASIS datasets (Tables 2 and 3). Our method is limited to the sagittal view of the 3D image volume and a combined approach with the other two orthogonal views (i.e., coronal and axial views) could improve the results. Our method was also trained using the silver standard masks whereas the 3D CNN model was trained using gold standard manual segmentations. Further, the manual segmentation results can vary between and across expert raters (Figure 2), potentially influencing the performance metric evaluation for different datasets. For instance skull-stripping with ANTs had a higher Dice coefficient for the OASIS dataset, which has a spatially smoother manual segmentation compared to the other datasets.

Conclusion

In this work we have proposed single plane 2D CNN method for SS. Our model was trained using silver standard masks but has comparable performance to other state-of-the-art SS methods. With this work, we demonstrate that silver-standard brain masks may be leveraged for large-scale studies in medical image processing. A combined approach involving other (orthogonal) views may make a more robust method.Acknowledgements

Oeslle Lucena thanks FAPESP (2016/18332-8). Roberto Lotufo thanks CNPq (311228/2014-3), Leticia Rittner thanks CNPq (308311/2016-7), Roberto Souza thanks the NSERC CREATE I3T foundation. Richard Frayne is supported by the Canadian Institutes for Health Research (CIHR, MOP-333931).References

1. P. Kalavathi, V. S. Prasath, Methods on skull stripping of mri head scan images a review, Journal of digital imaging 29 (2016) 365–379.

2. H. Greenspan, B. van Ginneken, R. M. Summers, Guest editorial deep learning in medical imaging: Overview and future promise of an exciting new technique, IEEE Trans. Med. Imag. 35(2016) 1153–1159.

3. S. K. Warfield, K. H. Zou, W. M. Wells, Simultaneous truth and performance level estimation(staple): an algorithm for the validation of image segmentation, IEEE Trans. Med. Imag. 23(2004) 903–921.

4. A. J. Asman, B. A. Landman, Robust statistical label fusion through consensus level, labeler accuracy, and truth estimation (collate), IEEE Trans. Med. Imag. 30 (2011) 1779–1794.

5. O. Lucena, R. Souza, L. Rittner, R. Frayne, R. Lotufo, Silver standard masks for data augmentation applied to deep-learning-based skull-stripping, ArXiv e-prints (2017).

6. R. Souza, O. Lucena, J. Garrafa, D. Gobbi, M. Saluzzi, S. Appenzeller, L. Rittner, R. Frayne, R.Lotufo, An open, multi-vendor, multi-field-strength brain mr dataset and analysis of publicly available skull stripping methods agreement, NeuroImage (2017).

7. D. W. Shattuck, M. Mirza, V. Adisetiyo, C. Hojatkashani, G. Salamon, K. L. Narr, R. A.Poldrack, R. M. Bilder, A. W. Toga, Construction of a 3d probabilistic atlas of human cortical structures, Neuroimage 39 (2008) 1064–1080.

8. D. S. Marcus, T. H. Wang, J. Parker, J. G. Csernansky, J. C. Morris, R. L. Buckner, Open access series of imaging studies (oasis): cross-sectional mri data in young, middle aged, nondemented,and demented older adults, Journal of cognitive neuroscience 19 (2007) 1498–1507.

9. O. Ronneberger, P. Fischer, T. Brox, U-net: Convolutional networks for biomedical image segmentation, in: International Conference on Medical Image Computing and Computer-AssistedIntervention, Springer, pp. 234–241.

10. A. Menegola, J. Tavares, M. Fornaciali, L. T. Li, S. Avila, E. Valle, Recod titans at isic challenge2017, arXiv preprint arXiv:1703.04819 (2017).

11. B. B. Avants, N. J. Tustison, G. Song, P. A. Cook, A. Klein, J. C. Gee, A reproducible evaluation of ants similarity metric performance in brain image registration, Neuroimage 54 (2011)2033–2044.

12. S. F. Eskildsen, P. Coupé, V. Fonov, J. V. Manjón, K. K. Leung, N. Guizard, S. N. Wassef, L. R.Østergaard, D. L. Collins, A. D. N. Initiative, et al., Beast: brain extraction based on non-local segmentation technique, NeuroImage 59 (2012) 2362–2373.

13. R. Beare, J. Chen, C. L. Adamson, T. Silk, D. K. Thompson, J. Y. Yang, V. A. Anderson, M. L.Seal, A. G. Wood, Brain extraction using the watershed transform from markers, Frontiers in neuroinformatics 7 (2013).

14. J. Kleesiek, G. Urban, A. Hubert, D. Schwarz, K. Maier-Hein, M. Bendszus, A. Biller, Deep MRI brain extraction: a 3d convolutional neural network for skull stripping, NeuroImage 129 (2016)460–469.

Figures