2713

MR Image Synthesis For Glioma Segmentation1Athinoula A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital, Boston, MA, United States

Synopsis

Deep learning has become the method of choice for tumor segmentation. Most deep learning algorithms incorporate a multi-modal approach, as different MR modalities are optimized to detect different aspects of tumor. However, modalities are often missing or unusable due to artifacts. In such cases, it is difficult to perform robust automatic tumor segmentation. We demonstrate that a convolutional neural network can be used to synthesize FLAIR MR images that have high similarity with real FLAIR images. Furthermore, we show that the use of these synthetic images can improve segmentation performance.

Introduction

Segmentation of glioma regions within MR imaging is important for the assessment of tumor volumes and quantification of imaging features.1 Because manual delineation of tumor regions is labor intensive, challenging, and subject to inter-rater variability, there has been high interest in automatic approaches. With advances in graphics processing units, deep learning has become the method of choice for robust tumor segmentation.2 Most deep learning algorithms incorporate a multi-modal approach, as different MR modalities are optimized to detect different aspects of tumor. For example, edema is best seen on a FLAIR MR image while contrast-enhancing tumor best seen on T1 post-contrast MR image. Unfortunately, modalities are often missing due to differences in imaging protocol across institutions. Furthermore, modalities may be unusable due to imaging artifacts or excessive patient motion. In such scenarios, there is a need for a robust segmentation method for those patient cases with missing modalities. Previous approaches include mean-filling, embedding of input images into a latent space, and use of a multi-layer perceptron to synthesize the image.3 Here, we demonstrate that a convolutional neural network can be used to synthesize missing modalities, and that those synthetic images can be used to create more accurate automatic segmentation tools than could otherwise be achieved.Methods

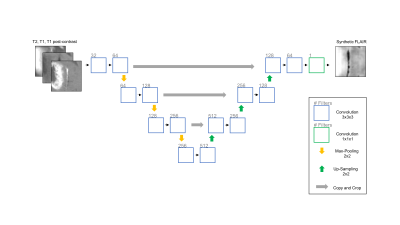

We utilized the BRATS 2017 dataset, which contains MR imaging (T2, T1 pre-contrast, T1 post-contrast, FLAIR) of low- and high-grade gliomas with expert manual tumor segmentations. The dataset was divided into training (n = 171), validation (n = 57), and testing sets (n = 57). We trained a 3D U-Net to synthesize the FLAIR image, using T2, T1, and T1 post-contrast as input, for 30 epochs.4 To train the 3D U-Net, 50 random 32x32x32 voxel patches were extracted from each patient in the training set and augmented by means of sagittal flipping. All data was normalized to zero mean and unit variance with respect to the whole brain. The tanh activation was used with mean squared error as the objective function. We then trained 3 3D U-Nets to perform whole tumor segmentation: 1) Using 3 modalities as input (T2, T1, T1 post-contrast) 2) Using all 4 modalities as input 3) Using 3 modalities and synthetic FLAIR as input. For these networks, we used the ReLU activation instead of tanh. The performance of the networks were evaluated using the dice similarity coefficient.Results

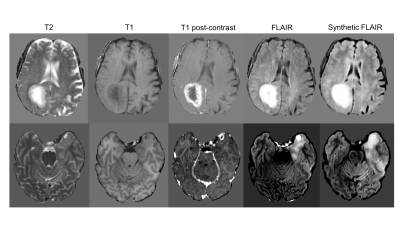

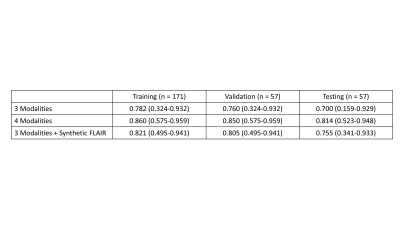

The synthesized FLAIR images had high similarity with real FLAIR images, with a root-mean-squared-error of 0.302, 0.297, and 0.310 within the training, validation, and testing sets, respectively. When trained with 3 modalities, automatic segmentation had poor performance with dice similarity coefficients of 0.782 (95% Confidence Interval, 0.324-0.932), 0.760 (0.324-0.932), and 0.700 (0.159-0.929) within the training, validation, and testing sets. When trained with 4 modalities, automatic segmentation had high performance with dice of 0.860 (0.575-0.959), 0.850 (0.575-0.959), and 0.814 (0.523-0.948). When trained with 3 modalities + the synthetic FLAIR, performance was improved from the network trained with 3 modalities alone. The training, validation, and testing dice was 0.821 (0.495-0.941), 0.805 (0.495-0.941), and 0.755 (0.341-0.933).Discussion

We show that segmentation performance is compromised with a missing FLAIR modality. To resolve this, we propose using a 3D U-Net for synthesis of MR images. We show that FLAIR can be synthesized from other MR modalities and it provides information that is useful for multi-modal automatic segmentation. Specifically, the use of 3 modalities + synthetic FLAIR images for tumor segmentation has markedly improved performance over using 3 modalities alone. This is a surprising result considering that all information within the synthetic FLAIR image was learned from non-FLAIR modalities. This may be useful within clinical practice as well as neuroradiologists may prefer to have all modalities on hand when dictating a patient case. Future work can extend this for other missing modalities as well and evaluate the use of synthetic components for segmentation of tumor subcomponents, such as necrosis and enhancing tumor. Furthermore, this work can be extended to other imaging modalities, such as CT and PET. Overall, this work demonstrates the potential of neural networks for image synthesis.Conclusion

We demonstrate the capacity of a neural network to synthesize FLAIR images from non-FLAIR images. Furthermore, we demonstrate that synthetic FLAIR images are useful for multi-modal automatic segmentation.Acknowledgements

We would like to acknowledge the GPU computing resources provided by the MGH and BWH Center for Clinical Data Science.References

1. Rios Velazquez E, Meier R, Dunn Jr WD, et al. Fully automatic GBM segmentation in the TCGA-GBM dataset: Prognosis and correlation with VASARI features. Sci Rep. 2015;5(1):16822. doi:10.1038/srep16822.

2. Kamnitsas K, Ledig C, Newcombe VFJ, et al. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal. 2017;36:61-78. doi:10.1016/j.media.2016.10.004.

3. Havaei M, Guizard N, Chapados N, Bengio Y. HeMIS: Hetero-Modal Image Segmentation. July 2016. http://arxiv.org/abs/1607.05194. Accessed November 6, 2017.

4. Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. 3D U-net: Learning dense volumetric segmentation from sparse annotation. In: Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Vol 9901 LNCS. ; 2016:424-432. doi:10.1007/978-3-319-46723-8_49.

Figures