2420

Automatic Breast and Fibroglandular Tissue Segmentation Using Deep Learning by A Fully-Convolutional Residual Neural NetworkYang Zhang1, Vivian Youngjean Park2, Min Jung Kim2, Peter Chang3, Melissa Khy1, Daniel Chow1, Jeon-Hor Chen1, Alex Luk1, and Min-Ying Su1

1Department of Radiological Sciences, University of California, Irvine, CA, United States, 2Department of Radiology and Research Institute of Radiological Science, Severance Hospital, Yonsei University College of Medicine, Seoul, Republic of Korea, 3Department of Radiology, University of California, San Francisco, CA, United States

Synopsis

A deep learning method using the fully-convolutional residual neural network (FCR-NN) was applied to segment the whole breast and fibroglandular tissue in 289 patients. The Dice similarity coefficient (DSC) value and accuracy were calculated as evaluation metrics. For breast segmentation, the mean DSC was 0.85 with an accuracy of 0.93; for fibroglandular tissue segmentation, the mean DSC was 0.67 with an accuracy of 0.75. The percent density calculated from ground truth and network segmentations were correlated, and showed a high coefficient of r=0.9. The initial results are promising, suggesting deep learning has a potential to provide an efficient and reliable breast density segmentation tool.

Introduction:

Many studies have shown that breast density is an independent risk factor for developing breast cancer [1]. MRI acquires 3D images with a high tissue contrast, allowing for volumetric measurements and characterization of morphological distribution patterns [2]. There is evidence suggesting that morphological distribution of adipose and fibroglandular tissue may affect the cancer risk [3,4]. For women with an extremely high risk or women diagnosed with hormonal receptor positive breast cancer, hormonal therapy, such as tamoxifen or aromatase inhibitors, is used for treatment to decrease cancer risk, and the reduction of breast density has been shown as a promising response predictor [5]. In the past 2 decades, many computer-aided breast density segmentation methods have been developed. Although the results are promising, some operator intervention is often needed, and thus cannot be fully automatic. Deep learning is a novel method that can be applied to perform breast and density segmentation, as demonstrated in a recent study by Dalmış et al. [6]. The purpose of this study is to implement the deep learning methodology to analyze a large breast MRI dataset to test the accuracy, and also to investigate limitations.Methods:

The breast MRI from 289 consecutive patients diagnosed with breast cancer (30-80 years, median 49) was analyzed. MRIs were performed for either diagnosis or pre-operative staging. For this study, regions contain breast cancer were considered to be contiguous and part of the adjacent fibroglandular tissue. The MRI was performed on a 3T Siemens scanner. Pre-contrast T1w images were identified from the DCE acquisition and used for analysis. For segmentation of the breast, an well-established template-based method [7] was used, example shown in Figure 1. The next step was to differentiate fibroglandular tissue from fat. The bias-field correction was done by using combined Nonparametric Nonuniformity Normalization (N3) and FCM algorithms [8], and then the K-means clustering was used to separate fibroglandular from fatty tissues on a voxel level. Segmentation results were inspected by an experienced radiologist, and manually corrected as necessary. A fully-convolutional residual neural network (FCR-NN) was constructed to segment the breast and fibroglandular tissue [6,9]. This “U-net” architecture is shown in Figure 2, which consists of a combination of convolution and max-pooling layers in the collapsing arm, followed up a series of up-sampling operations implemented by convolutional transpose operations in the expanding arm. The arrows between the two parts show the incorporation of the information available at the down-sampling steps into the up-sampling operations performed in the ascending part of the network. In this way, the fine-detail information captured in descending part of the network is used at the ascending part. The algorithm is implemented using a cross entropy loss function and Adam optimizer with an initial learning rate of 0.001 [10]. Using this architecture, a network was first trained to segment the whole breast. Then within the segmented breast area, the second network was used to segment the fibroglandular tissue and fatty tissue. The outputs of the two networks were probability maps. A threshold of 0.5 was used to generate the final segmentation results. Dice similarity coefficient (DSC) value and accuracy (percentage of correctly segmented pixels) were calculated as the evaluation metrics. In addition, the percent density calculated from the ground truth and network segmentations were correlated.Results:

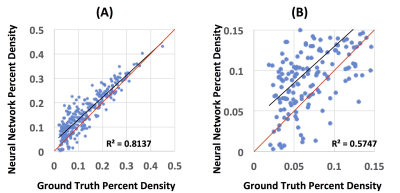

A 10-fold cross validation scheme was used to evaluate network performance. For whole breast segmentation, the DSC range was 0.61-0.98 (mean 0.85) with an accuracy range of 0.80-0.99 (mean 0.93). For fibroglandular tissue segmentation, the DSC range was 0.13-0.87 (mean 0.67 with an accuracy range of 0.42-0.95 (mean 0.75). One good and one bad segmentation case are shown each in Figure 3 and Figure 4, respectively. The Person correlation coefficient of the percent density calculated from ground truth and network segmentations was r=0.90, shown in Figure 5.Conclusions:

Developing an efficient and reliable breast density segmentation method may provide helpful information for a woman to assess her cancer risk more accurately for choosing an optimal screening and management strategy. Also for patients taking hormonal therapy, it may be used to evaluate whether the drug is working. In this study we tested a deep-learning method by using the Fully-convolutional Residual Neural Network (FCR-NN) previously reported by Dalmış et al. [6]. One great advantage of the U-net architecture is its capability to analyze entire images of arbitrary sizes, without dividing them into patches, thus it is suitable for segmentation of large structures like the breast. Although the results are promising, the segmentation in fatty breasts is challenging, which is true for all segmentation methods. Whether the deep learning may provide a clinically acceptable breast density segmentation tool for patient management needs to be further investigated.Acknowledgements

This study is supported in part by NIH R01 CA127927 and a Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2017R1D1A1B03035995).References

[1] Santen RJ, Boyd NF, Chlebowski RT, Cummings S, Cuzick J, Dowsett M, Easton D, Forbes JF, Key T, Hankinson SE, Howell A, Ingle J; Breast Cancer Prevention Collaborative Group. Critical assessment of new risk factors for breast cancer: considerations for development of an improved risk prediction model. Endocr Relat Cancer. 2007;14(2):169-187. [2] Nie K, Chang D, Chen JH, Hsu CC, Nalcioglu O, Su MY. Quantitative analysis of breast parenchymal patterns using 3D fibroglandular tissues segmented based on MRI. Med Phys. 2010 Jan;37(1):217-26. [3] Schäffler A, Schölmerich J, Buechler C. Mechanisms of disease: adipokines and breast cancer - endocrine and paracrine mechanisms that connect adiposity and breast cancer. Nat Clin Pract Endocrinol Metab. 2007 Apr;3(4):345-354. [4] Boyd NF, Guo H, Martin LJ, et al. Mammographic density and the risk and detection of breast cancer. N Engl J Med. 2007 Jan 18;356(3):227-236. [5] Cuzick J, Warwick J, Pinney E, et al. Tamoxifen-induced reduction in mammographic density and breast cancer risk reduction: a nested case-control study. J Natl Cancer Inst, 2011, 103(9): 744-752. [6] Dalmış MU, Litjens G, Holland K, Setio A, Mann R, Karssemeijer N, Gubern-Mérida A. Using deep learning to segment breast and fibroglandular tissue in MRI volumes. Med Phys. 2017 Feb;44(2):533-546. doi: 10.1002/mp.12079. [7] Lin M, Chen JH, Wang X, Chan S, Chen S, Su MY. Template-based automatic breast segmentation on MRI by excluding the chest region. Med Phys. 2013 Dec;40(12):122301. doi: 10.1118/1.4828837. [8] Lin M, Chan S, Chen JH, Chang D, Nie K, Chen ST, Lin CJ, Shih TC, Nalcioglu O, Su MY. A new bias field correction method combining N3 and FCM for improved segmentation of breast density on MRI. Med Phys. 2011 Jan;38(1):5-14. [9] Chang P.D. (2016) Fully Convolutional Deep Residual Neural Networks for Brain Tumor Segmentation. In: Crimi A., Menze B., Maier O., Reyes M., Winzeck S., Handels H. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2016. Lecture Notes in Computer Science, vol 10154. Springer, Cham (DOI: https://doi.org/10.1007/978-3-319-55524-9_11) [10] Kingma D, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. 2014 Dec 22.Figures

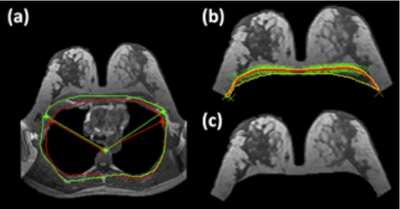

Figure

1: The

template-based breast segmentation. (a) A chest template is generated from a

subject, with three body landmarks: the thoracic spine and the lateral margins

of the bilateral pectoralis muscles (contour shown in green). This chest

template is co-registered to each subject’s chest region to obtain a

subject-specific chest model, and the three mapped landmarks are used to

perform the initial V-shape cut to define the lateral boundaries (contour shown

in red, used to exclude the chest region on this slice). (b) Chest-wall mussel is

identified using computer algorithms and excluded. (c) The final segmented

breast. Detailed methods are described in [7].

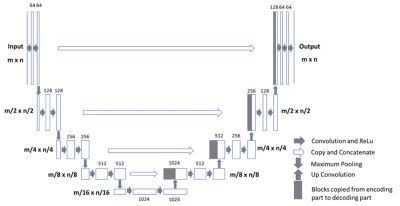

Figure

2: Architecture

of the Fully-convolutional Residual Neural Network (FCR-NN) [6]. The input of

the network is the normalized image and the output is the probability map of

the segmentation result. This “U-net” consists of

convolution and max-pooling layers at the descending phase (the initial part of

the U). This part can be seen as down-sampling stage. At the ascending part of

the U network, up-sampling operations are performed which are also implemented

by convolutions, where kernel weights are learned during training. The arrows

between the two parts show the incorporation of the information available at the

down-sampling steps into the up-sampling operations performed in the ascending

part of the network.

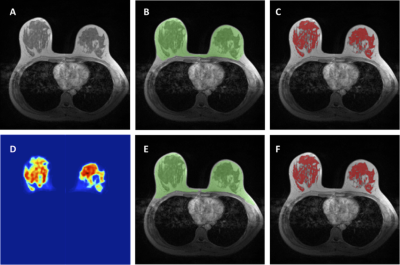

Figure

3: An example to illustrate satisfactory

segmentation results. For breast segmentation, DSC is 0.89 and accuracy is

0.93. For fibroglandular tissue segmentation, DSC is 0.82 and accuracy is 0.79.

A: The original T1-weighted, not-fat-suppressed, image. B: The breast segmentation

result from template-based segmentation, shown in green. C: The fibroglandular

tissue segmentation results within the breast by using K-mean clustering, after

the bias-field correction (shown in red). D: The generated fibroglandular

tissue probability map by the FCR-NN. E: The breast segmentation result from FCR-NN

(green). F: The fibroglandular tissue segmentation result from FCR-NN (red).

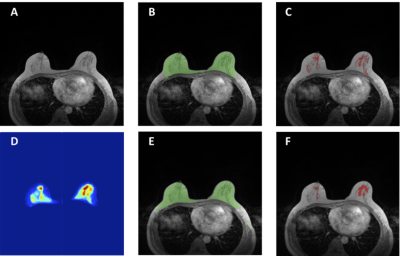

Figure

4: An example to illustrate the limitation of

fibroglandular tissue segmentation in fatty breasts using neural network. For

breast segmentation, DSC is 0.83 and accuracy is 0.94. For fibroglandular

tissue segmentation, DSC is 0.23 and accuracy is 0.77. A: The original T1-weighted,

not-fat-suppressed, image. B: The breast segmentation result from template-based

segmentation, shown in green. C: The fibroglandular tissue segmentation results

within the breast by using K-mean clustering, after the bias-field correction

(shown in red). D: The generated fibroglandular tissue probability map by the FCR-NN.

E: The breast segmentation result from FCR-NN (green). F: The fibroglandular

tissue segmentation result from FCR-NN (red). For this case, the fibroglandular

tissue is under-estimated, especially in the right breast.

Figure

5: The

Pearson correlation between the percent density calculated from the ground

truth segmentation results and the segmentation generated by the neural

network. (A): The correlation from all 289 subjects, with the correlation

coefficient r = 0.9 (R2 = 0.81). However, it is apparent that the scattering in

the low density cases is worse. The red unity line is shown as the reference,

indicating that the neural work has a higher density compared to ground truth. (B):

The correlation in fatty breasts with percent density ≤

0.15. The correlation coefficient r = 0.76 (R2 = 0.57), which is much worse

compared to the correlation in all subjects.