1652

A supervised learning approach for diffusion MRI quality control with minimal training data1Centre for Medical Image Computing & Department of Computer Science, University College London, London, United Kingdom

Synopsis

Quality control (QC) in diffusion-weighted MRI (DW-MRI) involves identifying problematic volumes in datasets. The current gold standard involves time-consuming manual inspection of data, and even supervised learning techniques that aim to replace the gold standard require manually labelled datasets for training. In this work we show the need for manual labelling can be greatly reduced by training a supervised classifier on realistic simulated data, and using a small amount of labelled data for a final calibration step. Such an approach may have applications in other image analysis tasks where labelled datasets are expensive or difficult to acquire.

Introduction

Quality control (QC) is a key part of the processing pipeline in DW-MRI. The gold standard is visual inspection of the data but this can prove extremely time consuming. It has recently been shown1 that supervised learning approaches based on convolutional neural networks (CNNs) can achieve human levels of accuracy for the key task of identifying motion-corrupted volumes, but this approach still requires that a subset of the datasets are manually QCed for use as training data. In this work, we show the need for manual labelling can be greatly reduced by training a classifier to detect motion artefacts in simulated data, and using a small amount of real data for calibration.Methods

Data - real

Ten real subjects were taken from the developing HCP (dHCP)2, a dataset that contains large amounts of intra-volume movement. We removed the b=2600s/mm2 volumes as they contained very little signal, making QC impractical, leaving 172 volumes per subject. Manual QC was performed by visual inspection, with one rater assigning a label to each volume: 0 for acceptable, 1 for intra-volume movement. Seven subjects were used as training and validation data as in1, three were reserved for testing.

Data – simulated

We used a realistic DW-MR simulator3 based on the POSSUM software4,5. Data was simulated for 7 subjects using the dHCP protocol. For each subject a motion-trace was fed to the simulator producing volumes corrupted by intra-volume movement. A volume was labelled as containing motion if the subject moved more than 2.5mm along any axis or rotated more than 2.5 degrees about any axis during the simulation.

Classifier

Our classifier is a streamlined version of that described in1. We used the pre-trained InceptionV3 network6 and fine-tuned it by removing the top layer of the network and replacing it with a fully connected layer with 16 neurons, followed by a prediction layer. All parameters were fixed during training apart from those in the newly added layers. We trained the classifier on sagittal slices from each volume. Two classifiers were trained: one on real data, and one on simulated, in both cases using 5 subjects as training data and two for validation. Each classifier was trained for 30 epochs using the Adam optimizer for 30 epochs with a learning rate of 0.001.

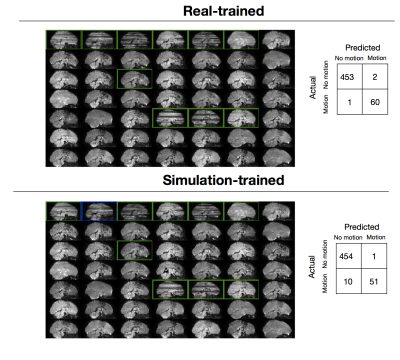

Testing was performed on the three reserved dHCP subjects. To assign a label to each volume, 30 saggital slices from the volume were extracted and classified; if the mean of these 30 scores was greater than a certain threshold, the volume was labeled as containing motion. For the real-trained classifier we used the natural threshold of 0.5. Due to the shift from the simulated to real domain, the threshold for the simulation-trained classifier had to be calibrated using a single subject from the real training set: the threshold that maximized the F1-score between the true and predicted labels for this subject was chosen for use at test-time.

Results

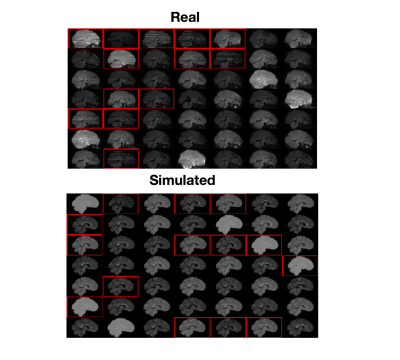

Figure 1 shows both real and simulated training data along with their labels. Results for the trained classifiers on the test set are shown in Figure 2. The real-trained classifier achieved precision and recall of 97% and 98% for classification of corrupted volumes, results comparable to the state-of-the-art results reported in1. Intra-rater agreement on the test set was 99%, showing this classifier approaches human level performance. The simulation-trained classifier achieved precision and recall of 98% and 84%.

We investigated how sensitive the simulation-trained classifier was to the choice of real subject used for determining the threshold, by determining the optimal thresholds for each of the seven real subjects in the train set. Optimal thresholds varied from 0.95-0.98, leading to variations in precision and recall of 90-98% and 72-85%, respectively.

Discussion

Our simulation-trained classifier showed precision similar to the real-trained classifier, though reduced sensitivity. It required just one real subject for optimal threshold determination, compared to the 7 required for training the real-trained, representing a large drop in the amount of manual QC required. The false negatives are mostly examples that fall outside the range of simulated artefacts (very large signal dropouts, or signal dropout with little other obvious signs of motion) and future work will improve the simulated data to ensure these cases are not missed. Future work will also incorporate domain-adaption techniques7,8 to circumvent the need for real data for threshold calibration.Conclusion

We demonstrate supervised learning approaches for data QC may be trained on simulated, rather than manually labelled, data. This will likely have many applications in other image analysis tasks where supervised learning shows excellent performance but training data is expensive or difficult to obtain.Acknowledgements

No acknowledgement found.References

[1] Christopher Kelly, Max Pietsch, Serena Counsell, and J-donald Tournier. Transfer learning and convolutional neural net fusion for motion artefact detection. Proc. Intl. Soc. Mag. Reson. Med., pages 1–2, 2016.

[2] Emer J Hughes, Tobias Winchman, Francesco Padormo, Rui Teixeira, Julia Wurie, Maryanne Sharma, Matthew Fox, Jana Hutter, Lucilio Cordero-Grande, Anthony N Price, et al. A dedicated neonatal brain imaging system. Magnetic Resonance in Medicine, 2016.

[3] M.S Graham, I. Drobnjak, H. Zhang. Realistic simulation of artefacts in diffusion MRI for validation post-processing correction techniques. NeuroImage (2016) 125:1079–1094

[4] I Drobnjak, D Gavaghan, E Süli, J Pitt-Francis and M Jenkinson. Development of an FMRI Simulator for Modelling Realistic Rigid-Body Motion Artifacts. Magnetic Resonance in Medicine, 56(2), 364-380, 2006.

[5] I Drobnjak, G Pell and M Jenkinson. Simulating the effects of time-varying magnetic fields with a realistic simulated scanner. Magnetic Resonance Imaging, 28(7), 1014-21, 2010.

[6] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jonathon Shlens, and Zbigniew Wojna. Rethinking the Inception Architecture for Computer Vision. arXiv preprint, 2015.

[7] K. Kamnitsas, et. al Unsupervised domain adaptation in brain lesion segmentation with adversarial networks, IPMI 2017

[8] K. Bousmalis, et. al, Simulation and Domain Adaptation to Improve Efficiency of Deep Robotic Grasping, arXiv 2017

Figures