1481

Marker-less co-registration of MRI data to a subject’s head via a mixed reality device1Stanford, Stanford, CA, United States

Synopsis

Many medical applications such as brain surgery or stimulation require the clinician to identify an internal target location. Mixed reality see-through displays that enable a holographic visualization of brain MRI superimposed on a subject’s head can help clinicians identify internal target locations but require tracking methods that keep the holographic brain MRI aligned with the subject’s head as they move. We present a method for marker-less tracking of a subject’s using a depth-sensing camera, which tracks facial features and sends location and rotation information to a see-through display to update the location in space of the MRI holograms.

Introduction

Many medical applications such as brain surgery or stimulation require the clinician to identify an internal target location. This can be achieved by locating the target location in an individual’s brain MRI and relating the position of that brain region to externally visible landmarks. The advent of mixed reality see-through displays such as the Microsoft Hololens has made it possible to directly project volumetric MRI data as holograms overlaid on the subject in the real world, facilitating targeting by providing the clinician with the possibility to directly “look into” the patient [1]. To align holograms with real world objects, traditionally requires estimation of the pose of an object using well-defined markers attached to the object. Here we present a method that measures the head pose relative to the world coordinates of facial features. Since the Hololens room tracking depth stream does not have sufficient resolution for accurate face tracking, we use an external RGBD camera attached to the Hololens and transformed the coordinate system between RGBD camera and the Hololens.Methods

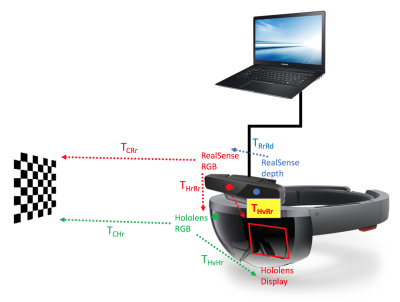

An Intel RealSense SR300 RGBD (red-green-blue-depth) camera was attached to the Microsoft Hololens and connected via USB to an external laptop (Fig.1). The room tracking infrared lasers of the Hololens were covered to minimize interference with the RealSense depth camera (Fig.2). We developed custom software that uses the Intel RealSense SDK to track the world coordinates of a subject’s facial features and uses these coordinates to update the pose of a holographic head rendering in the Hololens display according to the following steps:

- Calibration 1: Perform camera calibration to find transformation from RealSense camera space to Hololens display space (Fig.1). The transformation was measured according to [2], with THvRr=THvHrTHrRr where THvHr (provided by Hololens API) is the transformation from Hololens RGB camera to Hololens virtual camera (the user’s POV in the virtual world). THrRr was measured by performing a camera calibration for the RealSense RGB and the Hololens RGB camera using a checkerboard that was attached in 12 room locations completely visible by both cameras. THrRr was then acquired by THrRr=(TCHr)-1TCRr where TCHr and TCRr are the Hololens and RealSense camera calibration matrices.

- Calibration 2: Manually align the location of the rotation axes in the holographic head to match the rotation axes in the real head.

- Tracking: Measure head pose of the real head with the RealSense camera in the RealSense RGB space. This was done by tracking facial landmarks and measuring the pose of the facial landmark point cloud. The transformation TRrRd from RealSense depth to RGB camera, necessary to measure the landmark world coordinates, was provided by the RealSense API.

- Rendering: Transform the tracked pose to the Hololens display space using the transform measured in calibration step 1. These values are then used to rotate and translate the holographic head around the rotation axes center adjusted in calibration step 2.

Results

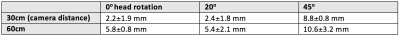

The transformation measured in calibration step 1 consisted of a 3cm translation from the RealSense RGB camera to the Hololens display space. The center of the rotation axes of the holographic head was translated in calibration step 2 from the center of mass of the holographic head model 9cm to the back of the head where the head meets the spine. Figure 3a&b show a surface rendering of the head overlaid on the subject viewed through the Hololens and a volumetric rendering that allows brain visualization. Latency of the head hologram pose update was less than 50ms and only noticeable for quick head movements. Our measurements in Table 1 quantify how the head tracking accuracy for distances depends on 1) camera distance and 2) head rotation with respect to the camera.Discussion & Conclusion:

We have presented a method to update an MRI rendering of a subject’s head in real-time by performing marker-less tracking using an RGBD camera. Instead of pose estimation from the facial landmark point cloud, a probabilistic model that creates pose hypotheses and evaluates them on the observed RGBD data could be used if the shape of the head is known [3]. Additional measurements of head to hologram accuracy, which depend on perception of the individual user, still need to be performed. To improve this display accuracy an individual tracker based calibration procedure such as presented in [4] is necessary to minimize the perception error for each user. A stick PC connected to the RealSense camera could enable a completely wireless device, increasing the freedom of movement of the user. Our method to project and track brain MRI holograms on a subject based on facial feature tracking will allow clinicians in the future a high flexibility and simple localization of internal targets by eye.Acknowledgements

No acknowledgement found.References

[1] Leuze C. et al., Holographic visualization of MRI data aligned to the human subject, ISMRM 2017 [2] Garon et al. Real-time High Resolution 3D Data on the HoloLens, ISMAR 2016 [3] Ren C. et al. STAR3D: Simultaneous Tracking And Reconstruction of 3D Objects Using RGB-D Data, IEEE International Conference on Computer Vision 2013 [4] Long Q. et al. Comprehensive Tracker Based Display Calibration for Holographic Optical See-Through Head-Mounted Display, arxiv 2017Figures