1175

Correction of motion artifacts using a multi-resolution fully convolutional neural network1Philips GmbH Innovative Technologies, Hamburg, Germany, 2Philips Radiology Solutions, Seattle, WA, United States, 3Department of Radiology, University of Washington, Seattle, WA, United States

Synopsis

Motion artifacts are a frequent source of image degradation in clinical practice. Here we demonstrate the feasibility of correcting motion artifacts in magnitude-only MR images using a multi-resolution fully convolutional neural network. Training and testing datasets were generated using artificially created artifacts introduced onto in vivo clinical brain scans. Both the corrupted input and filtered output images were rated by an experienced neuroradiologist.

Introduction

Methods

Training of the FCN was accomplished using a dataset with artificially created artifacts introduced onto in vivo, clinical brain scans. Generation of training and testing datasets was based on 16 T2-weighted (multi-2D spin echo, magnitude data only) whole-brain scans obtained in 16 patients. Following informed patient consent, all data was anonymized, and all images were deemed to be artifact-free by a reviewing experienced neuroradiologist. Artifacts simulating bulk translational motion were introduced into each slice via an additional phase that was applied to the Fourier transformed data. Three different translational trajectories (sudden, oscillating, and continuous motion) were simulated with varying amplitudes in the range of 2-12 pixels (0.9-5.4 mm). Artifacts due to bulk rotational motion were simulated for each slice by replacing parts of the Fourier transformed input by the Fourier transform of a rotated version of the input, where the axis of rotation was the image center. Two different rotational trajectories (sudden and oscillating motion) were simulated with amplitudes in the range of 1.0° to 2.5°. To increase the variability of the data, data augmentation was realized via random deformation, translation and rotation that were applied to the artifact-free input images. As suggested for undersampling artifacts [2], artifact-only images were obtained by subtraction of the input images from the artifact-corrupted images and used as the target for the FCN. The training dataset was generated using 14 of the 16 whole-brain scans, resulting in 91,492 image pairs (artifact-corrupted and artifact-only images). The testing dataset was derived from the 2 remaining whole-brain scans, and consisted of 115 image pairs (no data augmentation).

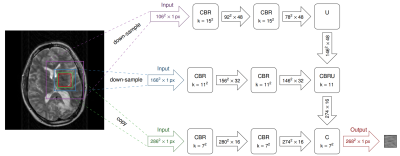

The FCN was implemented in the Microsoft Cognitive Toolkit (CNTK) framework, with a structure as depicted in Fig. 1, and relied on a multi-resolution approach such that 2 down-sampled variants of the input image were used as additional inputs to the network. Each resolution level consisted of two convolutional layers, each followed by batch normalization and a rectified linear unit. The different levels were then combined using average-unpooling and shortcut connections. The network was trained to minimize the mean squared error between predicted and simulated motion artifacts. Training was carried out during 32 epochs using the Adam optimization method [3].

The trained network was subsequently applied to the testing dataset (2 remaining whole-brain scans), in which the network’s estimate of artifacts was subtracted from the artifact-corrupted input on a per-image basis. All artifact-corrupted input and artifact-filtered output images (230 in total) were rated by the neuroradiologist using a previously-defined clinical five-point modified Likert scale (0: no artifact, 4: severe motion artifact) [4].

Results

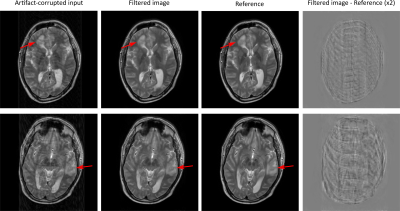

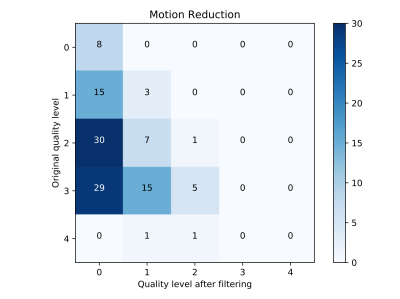

Exemplary artifact-corrupted input, artifact-filtered output, and original reference images of the testing dataset are depicted in Figure 2. In both cases, the filtered output images are virtually free of visible motion artifacts. Importantly, regional pathology (arrows) was unaltered by the FCN despite not being contained in the training dataset. The last column in Fig. 2 depicts the difference between the filtered and the reference images (note: increased contrast for improved visibility). While no clearly discernable anatomical structures are visible, a small amount of apparent artifact energy remained in the images after filtering. Quantitative neuroradiological ratings of the images are depicted as a confusion matrix in Figure 3. The network-based filtering achieved an improvement in mean motion-artifact score, with reduction from 2.2 to 0.3, without any observed degradation in image quality.Discussion

This contribution demonstrates the potential of deep learning in correcting for motion artifacts. The presented results support the recent hypothesis [2] that learning to mimic artifacts is effective at differentiating and removing those artifacts, while preserving underlying normal tissue structures. This approach appears to have the added benefit that pathological or anatomical structures that were not contained in the training dataset are not affected by the network-based filtering. The applicability of this technique to in vivo motion artifacts encountered during clinical imaging may be limited due to restrictions within the employed training dataset. Future work will include artifacts due to bulk through-plane motion and additional, complex physiological motion (swallowing, coughing).Conclusion

Our work demonstrates the feasibility of correcting motion artifacts in magnitude-only MR images using a multi-resolution fully convolutional neural network.Acknowledgements

No acknowledgement found.References

1. Zaitsev M, et al. Motion artifacts in MRI: A complex problem with many partial solutions: Motion Artifacts and Correction. J Magn Reson Imaging 2015;42(4):887-901.

2. Lee D, et al. Compressed sensing and Parallel MRI using deep residual learning. Proc. Intl. Soc. Mag. Reson. Med. 25, #0641, 2017.

3. Kingma D, et al. Adam: A Method for Stochastic Optimization. Proc ICLR 2014.

4. Andre JB, et al. Toward Quantifying the Prevalence, Severity, and Cost Associated With Patient Motion During Clinical MR Examinations. J Am Coll Radiol 2015:12(7),689-695 .

Figures