0795

MRI2MRI: A deep convolutional network that accurately transforms between brain MRI contrasts1Ophthalmology, The University of Washington, Seattle, WA, United States, 2eScience Institute, The University of Washington, Seattle, WA, United States

Synopsis

Different brain MRI contrasts represent different tissue properties and are sensitive to different artifacts. The relationship between different contrasts is therefore complex and nonlinear. We developed a deep convolutional network that learns the mapping between different MRI contrasts. Using a publicly available dataset, we demonstrate that this algorithm accurately transforms between T1- and T2-weighted images, proton density images, time-of-flight angiograms, and diffusion MRI images. We demonstrate that these transformed images can be used to improve spatial registration between MR images of different contrasts.

Introduction

MRI creates images that are sensitive to different aspects of the tissue, and susceptible to different imaging artifacts. The relationships between different imaging contrasts are nonlinear and spatially- and tissue-dependent (1). This poses several difficulties in the interpretation of multi-modal MRI. For example, analysis that requires accurate registration of images into the same coordinate frame currently requires the use of algorithms that can match images with different contrasts (2). While this often works well, these algorithms can be over-sensitive to large, prominent features, such as edges of the tissue, and are more error-prone when images have low SNR, or represent very different features. Moreover, a better understanding of complementary information provided in different contrasts will allow better characterization of tissue properties.

We used deep learning (DL) algorithm to learn the complex mappings between different MRI contrasts. DL has been successful in many different domains (3), and there are several previous applications of DL in biomedical imaging (4), including classification of disease states (5) and segmentation of tissue-type (6) lesions (7) and tumors (8). DL algorithms that take an image as input and generate another image as output have been used for style-transfer: retaining the content of an image, while altering its style to match the style of another image (9). For example, an image of a summer scene used to synthesize the same scene in winter (10). Here, we rely on some of the principles underlying style transfer, to transform brain MR images with one contrast into images of the same brain in other contrasts.

Methods

We used the IXI dataset (http://brain-development.org/ixi-dataset/): T1w, T2w, PD, MRA, and DWI (16 directions, at b-value of 1000, one b=0) for 567 healthy participants are available. A convolutional neural network was trained to learn the mapping between different MRI images in a training set (n=338). We used a U-net architecture (7), with loss evaluated on “perceptual loss” (9): the activation of the first layer of a pretrained VGG16 network (11), a loss function that induces image similarity and prevents over-smoothing. Training used the Adam optimizer (learning rate: 0.0002) was implemented using Pytorch (https://pytorch.org).

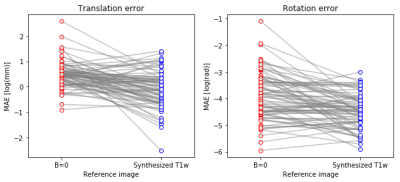

A group of participants (n=79), set aside as a test set (not shown to the algorithm during training) was used to evaluate registration. We used a state-of-the-art registration algorithm (12), implemented in DIPY (13) to register DWI b=0 to corresponding T1w images, using a mutual information metric to find the best affine transformation for registration. To simulate participant motion, known rotation and translation were applied to the b=0 image and the T1w was registered to the b=0. For comparison, we also synthesized a T1w-analog from the b=0 image using the DL network and used the synthesized image with the same registration algorithm. Registration errors were calculated relative to known ground truth as mean absolute error (MAE), for translation and rotation components of the registration.

Results

The DL algorithm learns the mapping between different contrasts (Figure 1). It can be trained for either one-to-one mappings (e.g, T1w => PD, Figure 1A, or T1w => T2w, Figure 1B), or many-to-one mappings (e.g., T1w + T2w + PD => MRA, Figure 1B). High accuracy is achieved by learning both mappings on the individual voxel level, as well as overall global structure. For example, the algorithm learns to disregard the ventricles in the mapping to MRA, despite the fact that the ventricles have similar pixel values in T1w, T2w and PD images to blood vessels.

The algorithm has practical application in analysis of multi-modal MRI. The algorithm was trained to synthesize T1w images from the DWI b=0 images. On a separate set of subjects, not used during training, we demonstrate that registration of T1w to DWI b=0 is more accurate using a synthesized T1w intermediary. This effect is shown here as mean absolute error (MAE; Figure 2) and is consistent both for the translation component (Mann Whitney U test, p < 10e-7) and for rotation (Mann Whitney U test p<10e-4)

Discussion and Conclusion

We present here a deep learning algorithm that can accurately transform between different MRI contrasts. The algorithm can use as input either one image or more, allowing integration of information from several different contrasts, in generating an image contrast. We present an example where this algorithm can improve the analysis of multi-modal MRI data, by improving the ability to register images with different contrasts to each other, by means of an algorithm-synthesized intermediary image. These results demonstrate that the algorithm is capturing complex nonlinear and spatially-varying dependencies between MRI contrasts, suggesting that it could also be used to better understand these dependencies.Acknowledgements

We would like to acknowledge the NVIDIA Corporation for their generous donation of graphics cards for the development of DL algorithms. This work was supported by an unrestricted research grant from Research to Prevent Blindness (AYL, SX, YW) and through a grant from the Gordon & Betty Moore Foundation and the Alfred P. Sloan Foundation to the University of Washington eScience Institute Data Science Environment (AR).

References

1. Vymazal J, Hajek M, Patronas N, Giedd JN, Bulte JW, Baumgarner C, Tran V, Brooks RA. The quantitative relation between T1-weighted and T2-weighted MRI of normal gray matter and iron concentration. J. Magn. Reson. Imaging 1995;5:554–560.

2. Klein A, Andersson J, Ardekani BA, et al. Evaluation of 14 nonlinear deformation algorithms applied to human brain MRI registration. Neuroimage 2009;46:786–802.

3. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436–444.

4. Greenspan H, van Ginneken B, Summers RM. Guest Editorial Deep Learning in Medical Imaging: Overview and Future Promise of an Exciting New Technique. IEEE Trans. Med. Imaging 2016;35:1153–1159.

5. Lee CS, Baughman DM, Lee AY. Deep learning is effective for the classification of OCT images of normal versus Age-related Macular Degeneration. Ophthalmology Retina 2017: 1, 4:322-327

6. Perone CS, Calabrese E, Cohen-Adad J. Spinal cord gray matter segmentation using deep dilated convolutions. arXiv [cs.CV] [Internet] 2017.

7. Lee CS, Tyring AJ, Deruyter NP, Wu Y, Rokem A, Lee AY. Deep-learning based, automated segmentation of macular edema in optical coherence tomography. Biomed. Opt. Express 2017;8:3440–3448.

8. Pereira S, Pinto A, Alves V, Silva CA. Brain Tumor Segmentation using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging [Internet] 2016. doi: 10.1109/TMI.2016.2538465.

9. Johnson J, Alahi A, Fei-Fei L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In: Computer Vision – ECCV 2016. Lecture Notes in Computer Science. Springer, Cham; 2016. pp. 694–711.

10. Zhu J-Y, Park T, Isola P, Efros AA. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. arXiv [cs.CV] [Internet] 2017.

11. Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv [cs.CV] [Internet] 2014.

12. Avants BB, Epstein CL, Grossman M, Gee JC. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 2008;12:26–41.

13. Garyfallidis E, Brett M, Amirbekian B, Rokem A, van der Walt S, Descoteaux M, Nimmo-Smith I, Dipy Contributors. Dipy, a library for the analysis of diffusion MRI data. Front. Neuroinform. 2014;8:8.

Figures