0607

Perceptual Accuracy of a Mixed-Reality System for MR-Guided Breast Surgical Planning in the Operating Room1Radiology, Stanford University, Stanford, CA, United States, 2Bioengineering, Stanford University, Stanford, CA, United States, 3Mechanical Engineering, Stanford University, Stanford, CA, United States, 4Surgery, Stanford University, Stanford, CA, United States, 5Electrical Engineering, Stanford University, Stanford, CA, United States

Synopsis

One quarter of women who undergo lumpectomy to treat early-stage breast cancer in the United States undergo repeat surgery due to concerns that residual tumor was left behind. We have developed a supine breast MRI protocol and a system that projects a 3D “hologram” of the MR data onto a patient using the Microsoft HoloLens. The goal is to reduce the number of repeated surgeries by improving surgeons’ ability to determine tumor extent. We are conducting a pilot study in patients with palpable tumors that tests a surgeon’s ability to accurately identify tumor location via mixed-reality visualization during surgical planning.

Introduction

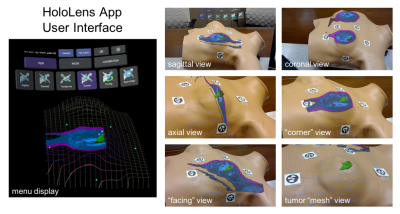

The most common initial treatment option for a woman diagnosed with breast cancer is lumpectomy, which ideally removes only the tumor with a negative margin1. However, in as many as a quarter of cases the patient will have a positive margin on pathology interpretation and be recommended to undergo additional surgery2. Our goal is to enable surgeons to remove the tumor perfectly the first time using augmented-reality visualization. Last year we reported a HoloLens application we developed to align “holograms” from a preoperative supine MRI directly onto the patient (Figure 1), and we measured its 2D perceptual accuracy3. Here we show evaluation of the accuracy of our system for predicting tumor location in patients with palpable tumors.Methods

We are currently conducting a pilot study in ten breast cancer patients with palpable tumors to analyze the perceptual accuracy of our mixed-reality system, and report on data from five subjects.

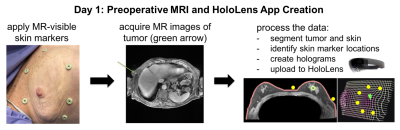

Preoperative MRI (Figure 2)

The

day before surgery, six MR-visible fiducial markers (IZI Medical) are applied

at different positions surrounding the breast, and a preoperative supine breath-held

axial contrast-enhanced 3D SPGR scan is acquired on a GE Discovery MR750 3T

scanner using an 8-channel cardiac coil. During post-processing, the locations

of the fiducial markers are recorded and the tumor and skin are segmented out using

ITK-SNAP4. The MR images, meshes of the segmented skin and tumor,

and marker locations are imported into a Unity 3D project, which is then

compiled and uploaded to the HoloLens as an “app”.

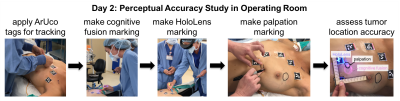

Surgical Planning (Figure 3)

On the day of surgery, the patient is positioned on the

operating table and surgeons draw markings of tumor location as identified via

three different techniques:

- The “cognitive fusion” marking is made by the first surgeon, who consults standard medical images of the patient on a conventional computer monitor to estimate tumor location without touching the patient.

- The “HoloLens” marking is made by the same surgeon after she dons the HoloLens and aligns the holograms to the patient. For the registration, ArUco tags are placed at the same locations as the MR-visible fiducial markers and are recognized via computer vision5.

- The “palpation” marking (ground truth) is made by a second surgeon who is blinded to the imaging information and estimates the tumor location through palpation.

The first two markings are drawn in different colors of UV-visible ink that is invisible under normal lighting conditions to avoid biasing the later markings. Data is acquired by taking a picture using an iPhone camera while the UV light source is turned on, so that all markings are visible.

Results and Discussion

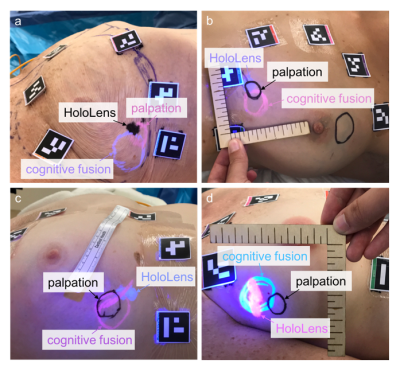

We have acquired data in five patients so far; preliminary results in four example patients show the tumor location markings made using the three different techniques (Figure 4). The size of the HoloLens markings appears to correspond better to the size of the palpation markings relative to the cognitive fusion markings.

In two cases, the markings correspond fairly well, but not completely (Figure 4a,b). Although we showed small errors when superimposing holograms on a 2D plane3, these errors are larger when operating in 3D space. Registration using rigid square markers and a monocular RGB camera to identify a plane has the worst error in the depth dimension, despite proper calibration. User biometric calibration errors, such as imprecise interpupillary distance or lateral and vertical misalignment of the displays with respect to the user’s eyes, can also generate errors. Finally, the HoloLens has a fixed display focus at approximately 2 meters, which can create relative depth perception difficulty at arm’s-length distance.

The locations of the HoloLens and palpation markings do not always correspond. Surgeons prefer to operate with the arm extended out 90°, which is not feasible inside the MRI scanner bore during imaging. This change in patient arm position affects breast deformation, and thus the location of the HoloLens marking relative to the palpation marking (Figure 4c); the discrepancy may be improved by incorporating a deformable model of the breast. HoloLens room tracking errors can also affect the location of HoloLens rendering and cause it to move (Figure 4d).

Conclusion

We have demonstrated mixed-reality visualization and alignment, in which projected MR images aid a surgeon in localizing a tumor during surgical planning for breast lumpectomy. Preliminary results are promising that MR-guided mixed reality could improve the surgeon’s ability to accurately identify tumor location. We are currently working to better register holograms to the body via alternative calibration and alignment methods, and by incorporating a deformable model of the breast.Acknowledgements

CBCRP IDEA Award 22IB-0006, GE Healthcare, NIH P41 EB015891, NIH R01 EB009055, Stanford Bio-XReferences

- Fisher B, Anderson S, Bryant J, Margolese RG, Deutsch M, Fisher ER, Jeong J-H, Wolmark N. Twenty-year follow-up of a randomized trial comparing total mastectomy, lumpectomy, and lumpectomy plus irradiation for the treatment of invasive breast cancer. The New England Journal of Medicine. 2002;347(16):1233-1241.

- Wilke LG, Czechura T, Wang C, Lapin B, Liederbach E, Winchester DP, Yao K. Repeat surgery after breast conservation for treatment of Stage 0 to II breast carcinoma. Journal of the American Medical Association Surgery. 2014;149(12):1296-1305.

- Srinivasan S, Wheeler A, Hargreaves B, Daniel B. MR-guided mixed-reality for surgical planning: Set-up and perceptual accuracy. ISMRM 2017 #5545.

- Yushkevich PA, Piven J, Hazlett HC, Smith RG, Ho S, Gee JC, Gerig G. User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. Neuroimage. 2006;31(3):1116-1128.

- Garrido-Jurado S, Muñoz-Salinas R, Madrid-Cuevas FJ, Marín-Jiménez MJ. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognition. 2014;47(6):2280-2292.

Figures