0573

Deep Generative Adversarial Neural Networks for Compressed Sensing (GANCS) Automates MRI1Electrical Engineering, Stanford University, Stanford, CA, United States, 2Electrical Engineering, Stanford University, stanford, CA, United States, 3Stanford University, stanford, CA, United States, 4Radiology, Stanford University, Stanford, CA, United States, 5Radiation Oncology, Stanford University, Stanford, CA, United States, 6Stanford University, Stanford, CA, United States

Synopsis

MRI suffers from aliasing artifacts when undersampled for real-time imaging. Conventional compressed sensing (CS) is not however cognizant of image diagnostic quality, and substantially trade-off accuracy for speed in real-time imaging. To cope with these challenges we put forth a novel CS framework that permeates benefits from generative adversarial networks (GAN) to modeling a manifold of MR images from historical patients. Evaluations on a large abdominal MRI dataset of pediatric patients by expert radiologists corroborate that GANCS retrieves improved images with finer details relative to CS-MRI and deep learning schemes with pixel-wise costs, at 100 times faster speed than CS-MRI.

Introduction

Real-time MRI is of paramount importance for diagnostic and therapeutic guidance in next generation visualization platforms. The slow acquisition process and motion, especially for pediatric patients, leads the image reconstruction an ill-posed linear inverse task [1,2]. Consider k-space data $$$\mathbf{y}=\boldsymbol{\Phi} \mathbf{x} + \mathbf{v}$$$ with $$$\boldsymbol{\Phi} \in \mathbb{C}^{M \times N}$$$ capturing the Fourier transform and coil maps, with $$$M \ll N$$$, noise $$$\mathbf{v}$$$, and image of interest $$$\mathbf{x}$$$. To retrieve $$$\mathbf{x}$$$ from $$$\mathbf{y}$$$, conventional CS uses sparsity regularization in a proper transform domain e.g., Wavelet (WV) [1]. It however demands running several iterations of non-smooth optimization algorithms e.g., FISTA with manual hyper-parameter tunning to optimize the performance, which hinders real-time MRI. Moreover, CS-MRI is oblivious to perceptual quality of images. With the abundance of historical scans $$$\mathcal{X}:=\{\mathbf{x}_k\}_{k=1}^K$$$, and the corresponding observations $$$\mathcal{Y}:=\{\mathbf{y}_k\}_{k=1}^K$$$ we propose a deep generative adversarial network (GAN) to model a manifold of high quality MR images consistent with the data.

Recent attempts exist to automate medical image reconstruction by leveraging historical data; see e.g., [3,4,5,6,7,8]. By training a neural network to map out aliased images to the gold-standard ones, they gain speed up, but suffer from blurring artifacts and possible hallucinations. This is mainly due to i) adopting pixel-wise costs that are oblivious to high-frequency texture details, which is crucial for drawing diagnostic decisions; and ii) lack of data consistency.

Method

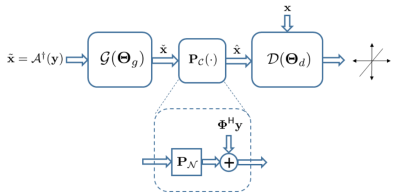

Reconstruction approach. To effectively learn the manifold from training samples, one needs to ensure c1) manifold contains plausible images; that are c2) data consistent. To address c1) we adopt GANs, that are very powerful in modeling manifold of perceptually appealing natural images [9,10,11,12,13,14,15,16]. A tandem network of generator (G) and discriminator (D) networks is considered (see Fig. 1), with a zero-filled estimate $$$\tilde{\mathbf{x}}$$$ as the input for G. The G network then projects $$$\tilde{\mathbf{x}}$$$ onto $$$\mathcal{M}$$$ to remove the aliasing artifacts, and subsequently G's output, say $$$\check{\mathbf{x}}$$$, passes through D net to score $$$1$$$ if $$$\check{\mathbf{x}} \in \mathcal{X}$$$, and $$$0$$$ otherwise. As per c2, G's output, i.e., $$$\check{\mathbf{x}}$$$, may be inconsistent with the data $$$\mathbf{y}$$$. Thus, $$$\check{\mathbf{x}}$$$ is projected onto the feasible set $$$\mathbf{y}=\boldsymbol{\Phi}\mathbf{x}$$$ through the affine projection $$$\hat{\mathbf{x}}=\boldsymbol{\Phi}^{\dagger} \mathbf{y} + (\mathbf{I}-\boldsymbol{\Phi}^{\dagger}\boldsymbol{\Phi}) \check{\mathbf{x}}$$$. Using GANs alone however may hallucinate the images. We thus adopt a mixture of least-squares GAN [18] and pixel-wise $$$\ell_1$$$ cost, weighted by $$$\lambda$$$ and $$$\eta$$$, respectively, to train the generator [19].

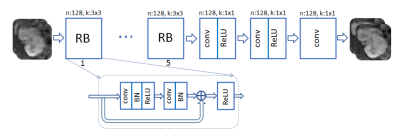

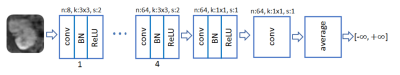

A ResNet with 5 residual blocks (RB) and skip connections is adopted for G (Fig. 2). The input and output are complex-valued images of size 256x128, with real and imaginary components considered as separate channels. Each RB consists of two convolutional layers with small 3x3 kernels and 128 feature maps that are followed by batch normalization (BN) and rectified linear unit (ReLU) activation. The D network takes the magnitude of the complex-valued output of the G net and data consistency projection as an input (Fig. 3). It composes 8 convolutional layers, where the last layer averages out the seventh-layer features to form a decision variable for classification.

Data. Abdominal image volumes are acquired for 350 pediatric patients after contrast enhancement. Each 3D volume includes 150-220 axial slices of size 256x128 with voxel resolution 1.07x1.12x2.4 mm. Axial slices of 340 patients (50,000 slices) are used for training, and 10 patients (1,920 slices) for testing. All in vivo scans were acquired on a 3T MRI scanner.

Results

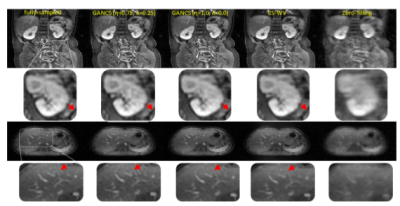

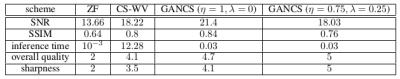

The network is trained to map 5-fold undersampled (variable density with radial view ordering) images to fully sampled ones. For a random test patient, representative slices from axial and coronal orientations, retrieved by various methods, are shown in Fig. 4. Note, CS-WV is optimized for the best performance using the FISTA algorithm of BART [20]. It is apparent that GANCS ($$$\eta=0.75,~\lambda=0.25$$$) reveals the details of liver vessels with more realistic texture and contrast, while they look blurry in other schemes. More quantitative results such as the average SNR (dB), SSIM, inference time (sec), and radiologists opinion score (ROS), ranged from 0 (poor) to 5 (excellent), about quality and sharpness is also listed in Fig. 5. Notice particularly 100 times speed up, and ROS improvement relative to CS-WVConclusions

This

study advocates GANCS for rapid and high quality reconstruction of

undersampled MR images. Adopting a deep ResNet for the generator, and

a deep CNN for the discriminator, abdominal images of pediatric

patients are used for training, which

can retrieve

higher quality images than the deep-learning based schemes with

pixel-wise costs, under 20-30

msec. Our

ongoing research involves

validating the performance with more radiologists and a better

diagnostic quality assessment strategy.Acknowledgements

Work in this paper was supported by the NIH T32121940 award, and NIH R01EB009690.References

[1] Michael Lustig, David Donoho, and John M Pauly. Sparse MRI: The application of compressed sensingfor rapid MR imaging. Magnetic Resonance in Medicine, 58(6):1182–1195, 2007.

[2] Morteza Mardani, Georgios B Giannakis, and Kamil Ugurbil. Tracking tensor subspaces with informative random sampling for real-time MR imaging. arXiv preprint, arXiv:1609.04104, 2016.

[3] Hu Chen, Yi Zhang, Mannudeep K Kalra, Feng Lin, Peixi Liao, Jiliu Zhou, and Ge Wang. Low-dose CT with a residual encoder-decoder convolutional neural network (RED-CNN). arXiv preprint,arXiv:1702.00288, 2017.

[4] Bo Zhu, Jeremiah Liu, Bruce Rosen, and Matthew Rosen. Neural network MR image reconstruction with AUTOMAP: Automated transform by manifold approximation. In Proceedings of the 25st Annual Meeting of ISMRM, Honolulu, HI, USA, 2017.

[5] Shanshan Wang, Ningbo Huang, Tao Zhao, Yong Yang, Leslie Ying, and Dong Liang. 1D partial fourier parallel MR imaging with deep convolutional neural network. In Proceedings of the 25st Annual Meetingof ISMRM, Honolulu, HI, USA, 2017.

[6] Jo Schlemper, Jose Caballero, Joseph V. Hajnal, Anthony Price, and Daniel Rueckert. A deep cascade of convolutional neural networks for MR image reconstruction. In Proceedings of the 25st Annual Meeting ofISMRM, Honolulu, HI, USA, 2017.

[7] Angshul Majumdar. Real-time dynamic MRI reconstruction using stacked denoising autoencoder. arXiv preprint, arXiv:1503.06383 [cs.CV], Mar. 2015.

[8] Ashish Bora, Ajil Jalal, Eric Price, and Alexandros G Dimakis. Compressed sensing using generative models. arXiv preprint, arXiv:1703.03208, 2017.

[9] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Advances in Neural Information Processing Systems, pages 2672–2680, 2014.

[10] Alec Radford, Luke Metz, and Soumith Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint, arXiv:1511.06434, 2015.

[11] Sanjeev Arora, Rong Ge, Yingyu Liang, Tengyu Ma, and Yi Zhang. Generalization and equilibrium in generative adversarial networks (GANs). arXiv preprint, arXiv:1703.00573v2, Mar. 2017.

[12] Jun-Yan Zhu, Philipp Krähenbühl, Eli Shechtman, and Alexei A Efros. Generative visual manipulation onthe natural image manifold. In European Conference on Computer Vision, pages 597–613. Springer, 2016.

[13] Justin Johnson, Alexandre Alahi, and Fei-Fei Li. Perceptual losses for real-time style transfer and super-resolution. In European Conference on Computer Vision, pages 694–711. Springer, Mar. 2016.

[14] Christian Ledig, Lucas Theis, Ferenc Huszár, Jose Caballero, Andrew Cunningham, Alejandro Acosta,Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. Photo-realistic single image super-resolution using a generative adversarial network. arXiv preprint arXiv:1609.04802, 2016.

[15] Casper Kaae Sonderby, Jose Caballero, Lucas Theis, Wenzhe Shi, and Ferenc Huszar. Amortised MAP inference for image super-resolution. arXiv preprint, arXiv:1610.04490, Oct. 2016.

[16] Raymond Yeh, Chen Chen, Teck Yian Lim, Mark Hasegawa-Johnson, and Minh N Do. Semantic image inpainting with perceptual and contextual losses. arXiv preprint, arXiv:1607.07539, 2016.

[17] Dimitri P Bertsekas. Nonlinear programming. Athena scientific Belmont, 1999.

[18] Xudong Mao, Qing Li, Haoran Xie, Raymond YK Lau, Zhen Wang, and Stephen Paul Smolley. Least squares generative adversarial networks. arXiv preprint, ArXiv:1611.04076, Apr. 2016.

[19] Hang Zhao, Orazio Gallo, Iuri Frosio, and Jan Kautz. Loss functions for image restoration with neural networks. IEEE Transactions on Computational Imaging, 3(1):47–57, 2017.

[20] Jonathan I. Tamir, Frank Ong, Joseph Y. Cheng, Martin Uecker, and Michael Lustig. Generalizedmagnetic resonance image reconstruction using the Berkeley Advanced Reconstruction Toolbox. In ISMRM Workshop on Data Sampling and Image Reconstruction, Sedona, 2016.

Figures