0357

Creating Standardized MR Images with Deep Learning to Improve Cross-Vendor Comparability1Electrical Engineering, Stanford University, Stanford, CA, United States, 2Radiology, Stanford University, Stanford, CA, United States

Synopsis

A very common task for radiologists is to compare sequential imaging studies that were acquired on different MR hardware systems, which can be difficult and inaccurate because of different designs across scanners and vendors. Cross-vendor standardization and transformation is valuable for more quantitative analysis in clinical exams and trials. With in-vivo multi-vendor datasets, we show that it is possible to achieve accurate cross-vendor transformation using the state-of-art Deep Learning Style-transfer algorithm. The method preserves anatomical information while transferring the vendor specific contrast "style". The usage of unsupervised training enable the method to further train and apply on all existing large scale MRI datasets. This technique can lead to a universal MRI style which benefits patients by improving inter-subject reproducibility, enabling quantifiable comparison and pushing MRI to be more quantitative and standardized.

Introduction

A very common task for radiologists is to compare sequential imaging studies that were acquired on different MR hardware systems. Because each manufacturer’s images show slightly different contrast or distortions due to different design considerations, this can make this seemingly simple task challenging. Clinical imaging trials can be more challenging if multiple vendor scanners are involved.

Therefore, it is highly valuable if images could be transformed from the appearance of one vendor to another vendor, or to a standardized MR style. Having a universal MRI style could benefit both patients and radiologists by improving inter-subject reproducibility and enabling more quantitative analysis.

Deep learning methods have proven adept at synthesizing new image contrasts [1,2,3], using both paired and unpaired datasets for training, and may be well-suited to perform “style” changes corresponding to different MR vendor implementations.

Method

1. Deep-Learning approach

Recently, there has been research using deep learning algorithm for style transformation[1], which can generate an image/photo ensuring content/structure information from one image but style/color information from another. Faster inference network [2] and Cycle Generative Adversarial Network (Cycle-GAN) [3] further enables improved performance and more flexible training on both paired datasets and un-paired datasets.

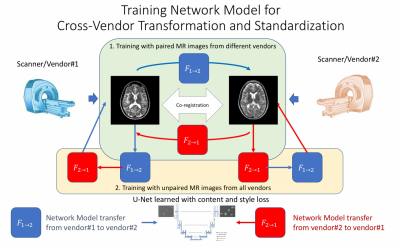

The entire workflow is shown in Figure 1. The U-net neural network structures, which have been widely used for medical tasks such as segmentations and image enhancement, were used in this networks for image-to-image regression tasks. We trained network models both on paired datasets and on un-paired datasets, which gave us more flexibility in data collection.

2. Datasets

Here we collected and co-registered multi-vendor MRI datasets from 7 subjects on different 3T scanners (GE MR750, Philips Ingenia, Siemens Skyra). There are co-registered datasets on 3 subjects collected with similar settings using both GE MR750 and Philips Ingenia, while another 4 subjects collected using both GE MR750 and Siemens Skyra. Additionally, there are 25 un-co-registered samples from different subjects that can be used for unpaired training with Cycle-GAN to ensure robust training and avoid over-fitting.

We explored on the performance of standardizing common contrast-weighted sequences: 1) Axial-2D-T1w, 2) Axial-2D-T2w, 3) Axial-2D-GRE and 4) Sagittal-3D-T1-FLAIR. For each dataset, there are around 28~32 slices for 2D images and around 200~300 planes for high resolution 3D images.

3. Evaluation

We evaluated contrast standardization results for T1w, T2w, GRE and FLAIR. For evaluation metrics, we used Peak-Signal-to-Noise-Ratio (PSNR), normalized Root-Mean-Squared-Error (RMSE) and Structural Similarity Index (SSIM) to compare the real acquired images on different scanners with the results of cross-vendor transforms.

Results

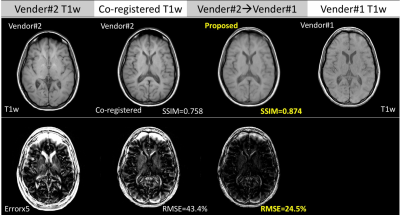

Figure 2 shows the proposed method generates accurate cross-vendor transformation (T1w, vendor#2 to vendor#1 shown as example ). The inter-vendor differences between the two images are reduced after transformation while preserving the diagnostic quality as well as original anatomical information from the acquired T1w image.

As shown in Figure 3, the similarity metrics (average statistics for T1w, T2w and GRE) improves significantly (p<0.0001) by using the proposed cross-vendor standardization: over 5.0dB PSNR gain, around 30% reduction in RMSE and over 0.15 improvements for SSIM.

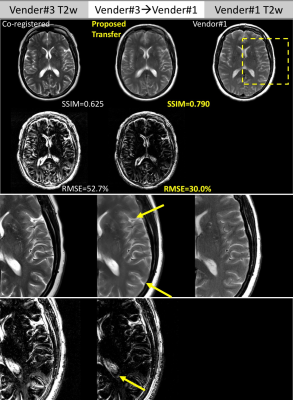

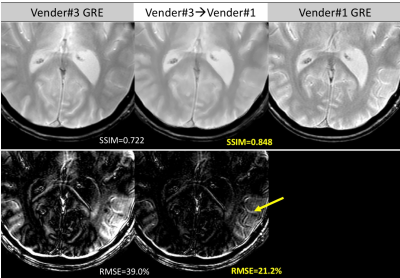

Figure 4 and Figure 5 further compares the detailed residual errors of cross-vendor transformation (T2w, GRE, vendor#3 to vendor#1) with zoom-in visualizations of the results and errors.

Discussion

Deep-learning has previously been applied to synthesize image contrasts, such as the creation of CT from MRI for PET/MRI attenuation correction[4] and synthesizing 7T-like images from 3T MR images[5]. Given this, it should be feasible to take MR images from one scanner and synthesize the appearance of a different scanner. In the current work, we demonstrate this capability. Such an application is essential and valuable for clinical radiologists, who often have to compare patients’ scans with prior studies done on other scanner types. With the proposed technique, we can standardize the specific vendor’s MRI images into a common MRI-contrast-space, which enables easier longitudinal and cross-sectional analysis.

The proposed method uses supervised training with paired and co-registered cross-vendor images, which are not commonly available. Thus, we also applied unsupervised training strategy which can uses larger multi-vendor contrast-weighted MR images datasets.

In addition, this technique can further push MRI to be a more standardized and quantitative imaging modality with better quantification and repeatability. It is also a complementary technology to direct parameter mapping techniques (MRF etc.) and achieve standardized imaging directly from routine MRI sequences.

Conclusion

Accuracy cross-vendor transforms for different types of contrast weighting for MRI were achieved by using deep learning methods. The proposed technique can improve the common clinical workflow of comparing sequential scans taken on different scanners. This work will push MRI closer to become a standardized and quantitative imaging modality.Acknowledgements

No acknowledgement found.References

[1] Gatys, Leon A., Alexander S. Ecker, and Matthias Bethge. "Image style transfer using convolutional neural networks." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016.APA

[2] Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. "Perceptual losses for real-time style transfer and super-resolution." European Conference on Computer Vision. Springer International Publishing, 2016.

[3] Zhu, Jun-Yan, et al. "Unpaired image-to-image translation using cycle-consistent adversarial networks." arXiv preprint arXiv:1703.10593 (2017).

[4] Liu, Fang, et al. "Deep Learning MR Imaging–based Attenuation Correction for PET/MR Imaging." Radiology (2017): 170700.

[5] Bahrami, Khosro, et al. "Reconstruction of 7T-like images from 3T MRI." IEEE transactions on medical imaging 35.9 (2016): 2085-2097.

Figures