5657

Improved Multi-echo Water-fat Separation Using Deep Learning1Electrical Engineering, Stanford University, Stanford, CA, United States, 2Radiology, Stanford University, Stanford, CA, United States

Synopsis

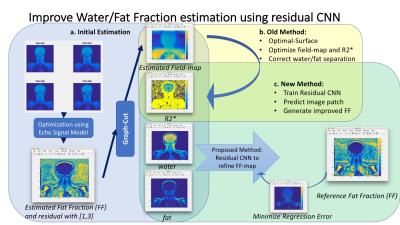

Multi-echo water/fat separation may fail on cases due to noise, inaccurate estimation of water/fat signal and inhomogeneous $$$B_0$$$ field. Here we developed novel data-driven method to improve water/fat separation using Deep Learning. A Residual-Convolutional-Neural-Network model was trained on image patches of multi-contrast information (from initial estimation of water/fat signal, R2* map and field map), to generate better estimation of Fat-Fraction (FF) image patches and entire FF image. The proposed approach was validated and demonstrated improvement from existing methods on ISMRM datasets with variable anatomies. This method can handle flexible echo times in acquisition and is efficient and effective.

Target Audience

Researchers and clinicians working with applications using fat-water separation in MRI data.Introduction

Water/fat separation has wide applications in many regions of the body. Current MRI techniques, specifically with the multi-echo Dixon type acquisition, tend to work well in most of the time. However, they may still fail in ways that are detrimental to the clinical interpretation. Failures such as water/fat swaps are mainly due to the inaccurate estimation of inhomogeneous $$$B_0$$$ field which affect the estimation of $$$R_2^*$$$ and water/fat signals.

This work focuses on developing a novel water/fat separation method using deep learning. The proposed approach was developed and validated on variable dataset of different anatomic and clinical cases released in 2012 ISMRM challenge. Results demonstrate the significant improvement from existing methods. This method can handle flexible echo times in acquisition and is an efficient and effective addition to current methods.

This work uses the data from ISMRM 2012 challenge which contains the ground-truth water-fat separation for training, testing and evaluating our method.

Theory

The basic model of water/fat separation in multi-echo acquisition is mainly based on the Dixon model modeled using different echo time (TE) shifts.

$$S(\mathbf{r},TE_n) = \left(\rho_{water}(\mathbf{r})+\rho_{fat}(\mathbf{r})\left [ \sum_{i=1}^{M}\beta_i e ^{j2\pi\delta_iTE_n}\right ] \right) e^{-R_2^*(\mathbf{r})TE_n+j2\pi \Delta B_0(\mathbf{r})TE_n}$$

Several methods [1-3] have been proposed to improve the estimation based on the joint estimation of regularized $$$B_0$$$ field with inhomogeneity, $$$R_2^*$$$ map and the water/fat signals. Typically, smoothness penalties are utilized to further regularize the nonlinear optimization. To better model the variation and possible non-smoothness of field map, here we extend the signal model and propose a data driven approach to further improve the estimation.

Method

Since there are smoothness properties and redundant similarity between the structure of water/fat signal, $$$R_2^*$$$ map and field map, they can be modeled with a sparse representation using deep network. We designed and trained a Convolutional Neural Network [4] with ResNet-ish bypasses [5] and Residual Convolution-Deconvolution layers [6], which takes 16x16 multi-contrast patches (as shown in figure, including initial estimation of water/fat signal, $$$R_2^*$$$, $$$B_0$$$ and residual signal) as input and generate a prediction of water-fat fraction patch which is trained to approximate the ground-truth estimation.

The implementation uses the six spectral fat peaks commonly used at δ = [-3.80, -3.40, -2.60, -1.94, -0.39, 0.60] ppm, with relative weight β = [0.0870, 0.6930, 0.1280, 0.0040, 0.0390, 0.0480]. The method first takes the regularized least-square based estimation [1] and corresponding residual [3]. Then from each 2D image, a stack of multi-contrast patches is generated in each position. Using the trained model, an improved version of water-fat fraction patch is generated from the stack of patches for each position. Then the final water-fat fraction image is synthesized from all the patches. The main framework is shown in the Figure1.

Dataset and Results

In 2012, ISMRM prepared a challenge [7] on water-fat separation, providing real-life cases of multi-echo MRI data from different anatomical regions at 1.5T and 3T. In the data, the ground-truth water/fat fraction was obtained using an expanded data set with additional echo times (typically 12-16 echoes with expensive iterative graph cut algorithm). We used part of the data for training, a different part for validation, and yet another part of the data for testing the model.

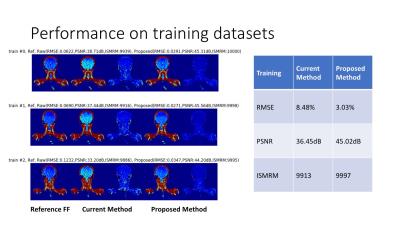

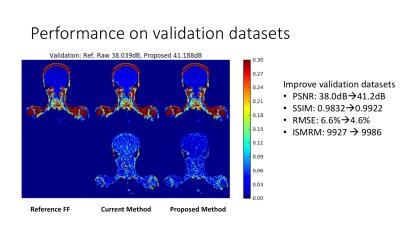

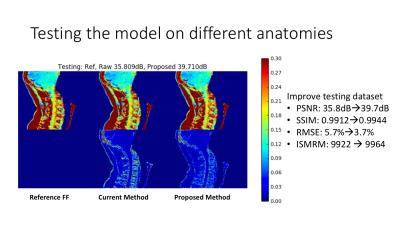

Here we show results on several challenging cases and the overall metrics (figure 2-4). Compared with some of the winning methods in this prior challenge, the proposed method achieves superior performance in estimating water/fat fraction, based on many metrics, including PSNR, SSIM, RMSE and the score used in ISMRM challenge (which considers +-0.1 error as correct).

Discussion

Overfitting is prevented since the number of training samples (~10000 patches per image and more using flip/rotation augmentation) is greater than the model complexity (~10000 parameters). Also, related work [8] has validated similar SRCNN models can generalize well for local image enhancement (training using 91 images had similar performance than training using millions of images) since the training data includes enough variability of local patches. Specifically, our model generalizes well on the testing data from the ISMRM challenge which include different anatomical regions and performs well on data it has not seen in training.

Faster acquisition can be achieved using the proposed method to significantly reduce scan time, since it can achieve robust and accurate estimation with much fewer measurements and echoes. Also the proposed method is very efficient since it only requires a forward-passing step of neural network and patch synthesis.

Acknowledgements

Here we acknowledge GE healthcare for supporting this work.References

- Huh, W., J. A. Fessler, and A. A. Samsonov. “Water-fat decomposition with regularized field map.” Proc. Intl. Soc. Mag. Reson. Med. Vol. 16. 2008.

- Hernando, Diego, et al. “Robust water/fat separation in the presence of large field inhomogeneities using a graph cut algorithm.” Magnetic resonance in medicine 63.1 (2010): 79-90.

- Cui, Chen, et al. “Fat water decomposition using globally optimal surface estimation (GOOSE) algorithm.” Magnetic resonance in medicine 73.3 (2015): 1289-1299.

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks." Advances in neural information processing systems. 2012.

- He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016.

- Masci, Jonathan, et al. "Stacked convolutional auto-encoders for hierarchical feature extraction." International Conference on Artificial Neural Networks. Springer Berlin Heidelberg, 2011.

- http://challenge.ismrm.org/node/18

- Dong, Chao, et al. "Learning a deep convolutional network for image super-resolution." European Conference on Computer Vision. Springer International Publishing, 2014.

Figures