4344

Automated quality control of MR images using deep convolutional neural networks1Department of Radiology, Stanford University, Stanford, CA, United States

Synopsis

The complexity of MR scanners results in significant variability in the quality of images produced, in some cases requiring clinical expertise to recognize suboptimal images. Deep convolutional neural networks are an emerging technique with potential clinical applications. We aim to investigate whether deep convolutional neural networks could be trained for three MR image quality control classification tasks: 1) Recognize adequacy of MR elastography wave propagation, 2) determine whether rectal gas susceptibility artifact obscuring the prostate is present, and 3) determine scan technique in unlabeled images. Using the Inception v4 deep convolutional neural network we found high classification accuracy for two out of these three problems suggesting the potential to automate certain aspects of MR quality control.

Purpose

Acquiring MR images is complex, requiring significant operator training which limits adoption of the technology. While much complexity has been removed in the daily operation of CT and radiography, significant decisions about acquisition parameters have to be made by the MRI operator. Systematic evaluation of MR image quality has shown suboptimal images in up to 78% of 1. These quality issues may not be readily apparent requiring clinical expertise to recognize and often additional scans and patient callbacks. Recently significant attention has been drawn to potential diagnostic applications for deep convolutional neural networks with some initial success for simple classification 2. We aim to evaluate whether supervised deep learning techniques can automate the quality control process and provide feedback to scanner operators before patient departure. We investigated this with three experiments to 1) determine diagnostic adequacy of elastography wave images, 2) presence of rectal gas susceptibility artifact in prostate diffusion weighted images, and 3) determine the MR sequence for incorrectly or unlabeled labeled images as is sometimes encountered during import of outside exams.Methods

The Google TensorFlow framework was used to implement an Inception v4 convolutional neural network architecture pretrained on the ImageNet 3.Images were obtained and new classification categories were defined for three applications:

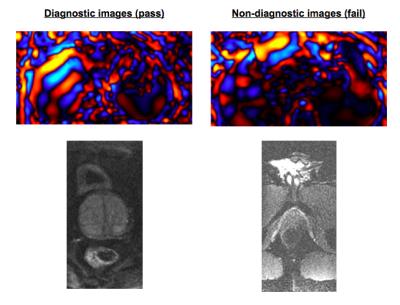

Adequacy of elastography waveforms: Color wave images from General Electric MR Touch acquisitions were collected from 94 acquisitions and the images categorized by a board-eligible radiologist experienced in elastography according to adequacy for diagnostic purposes.

Presence of rectal gas susceptibility artifact in prostate diffusion weight images: Small field of view diffusion weighted images from 382 prostate MR exams were collected and categorized by a board-eligible radiologist for presence or absence of susceptibility artifact from rectal gas limiting visualization of the prostate.

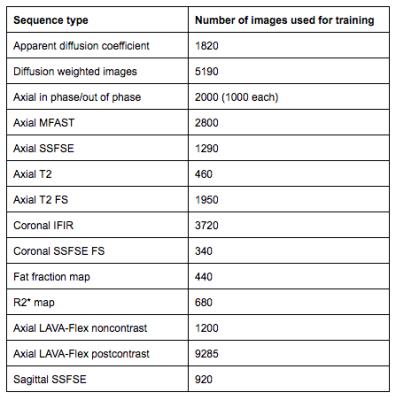

Image type recognition for labeling: MR images were obtained for different pulse sequence categories frequently acquired at our institution. The image totals and categories are detailed in figure 1.

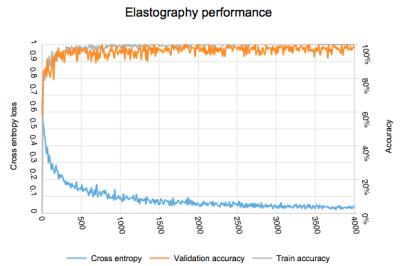

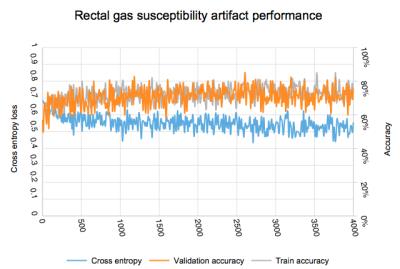

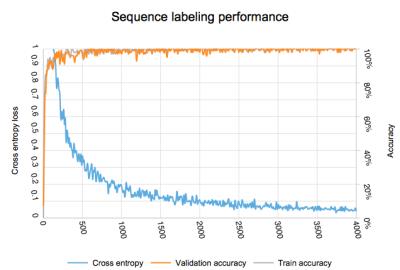

Each image set was divided randomly into three categories for training, validation and testing purposes. Transfer learning was used to retrain the deep convolutional neural network for the new categories over 4000 steps. Fine tuning of the neural network configuration was performed through learning rate optimization. Values of accuracy and loss rate were recorded for each training step for each application and the ROC area under the curve calculated.

Results

After completion of the computationally intensive task of transfer learning for each category, the actual image classification could be performed within seconds.

Adequacy of elastography waveforms: 59 exams with 11,800 images were classified as adequate and 35 exams with 7,210 images were classified as inadequate. Evolution of accuracy and loss weights is found in figure 3. Final accuracy at training step 4000 was 98% with cross entropy of 0.038. Calculated ROC area under the curve was 0.92.

Presence of rectal gas susceptibility artifact in prostate diffusion weight images: 270 exams with 24,222 images were classified as adequate and 149 exams with 13,368 images were classified as inadequate. Evolution of accuracy and loss weights is found in figure 4. Final accuracy at training step 4000 was 68.2% with cross entropy of 0.55. Calculated ROC area under the curve was 0.62.

Image type recognition for labeling: Evolution of accuracy and loss weights is found in figure 5. Final accuracy at training step 4000 was 100% with cross entropy of 0.023. Calculated ROC area under the curve was 1.0.

Discussion

Adequacy of elastography waveforms: The high accuracy and area under curve results demonstrate that deep learning may aid quality control for MR elastography images. Inadequate wave propagations can be automatically detected with high accuracy and the MR operator could be immediately alerted that a repeat examination is required.

Presence of rectal gas susceptibility artifact in prostate diffusion weight images: Detection of clinically significant rectal gas susceptibility artifact proved challenging in our experiments. We postulate that this is due to the small region affected by this type of artifact. Other types of machine learning algorithms may be better suited for this task.

Image type recognition for labeling: Given the significant differences in features in this image set, the perfect classification is expected. Using this process, uniform labeling could be instituted for clinical images which would simplify image management in a heterogenous environment.

While some of these early results are encouraging, further investigation will be needed to determine how these classifiers could be implemented in a production environment.

Conclusion

Automated quality control of MR images using deep convolutional neural networks is feasible for several applications.Acknowledgements

No acknowledgement found.References

1. Koller CJ, Eatough JP, Mountford PJ, Frain G. A survey of MRI quality assurance programmes. Br J Radiol. 2006;79(943):592-596. doi:10.1259/bjr/67655734.

2. Yan Z, Zhan Y, Peng Z, et al. Bodypart Recognition Using Multi-stage Deep Learning. Inf Process Med Imaging. 2015;24:449-461. http://www.ncbi.nlm.nih.gov/pubmed/26221694. Accessed November 6, 2016.

3. 1. Szegedy C, Ioffe S, Vanhoucke V, Alemi A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. February 2016. http://arxiv.org/abs/1602.07261. Accessed November 6, 2016.

Figures