3936

Optical Motion Monitoring for Abdominal and Lung Imaging1Department of Radiology, Stanford University, Stanford, CA, United States, 2Department of Electrical Engineering, Stanford University, Stanford, CA, United States

Synopsis

The accuracy to compensate or correct for MR image artifacts from motion depends on the ability to precisely measure patient motion. Optical cameras are a compelling solution as they simplify patient setup and do not negatively impact MR data acquisition. However, most efforts have been focused on integrating and applying optical cameras to neuroimaging. In this work, setup and methods for video processing were developed for lung and abdominal MRI where MRI acquisition is especially sensitive to motion. The proposed solution had high correlation with conventional approaches of respiratory bellows and MRI navigators; also, comparable image quality was achieved.

Purpose

The utility of MRI is limited by its sensitivity to patient motion. Many acquisition and reconstruction methods to address motion have been developed. The accuracy of these different techniques rely on the ability to precisely measure motion. Conventional techniques include MR navigators, respiratory bellows, and cardiac leads. Another alternative is optical camera which can measure patient motion with high spatiotemporal resolutions and with no impact on the MRI data acquisition. Furthermore, cameras can provide contact-free physiological monitoring1. However, efforts in leveraging optical cameras have been heavily focused on neuroimaging where the same patient setup is consistently used and the camera view can be easily positioned between the head-coil rungs. Therefore, the purpose of this work is to adapt the use of optical cameras as an external motion monitoring device for lung and abdominal imaging and to evaluate the performance of this approach.Method

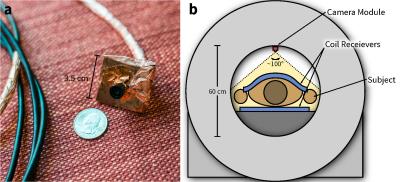

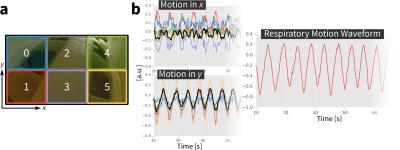

A 100°-wide-angle camera module (ELP, Ailipu Technology, Guangdong, China) was modified to be compatible in the MRI bore (Figure 1). The camera (1280x720 images, 30 fps) was linked via USB to a computer. Because the camera viewed both static (e.g. scanner bore) and dynamic objects (e.g. coil on subjects, subjects themselves), each frame of the recorded video was divided into smaller blocks (Figure 2a). In each block, localized linear translations were estimated (Figure 2b). As the MRI acquisition may induce noise into the video, the derived waveforms were first filtered using a median filter. Since the low-lighting of many setups may decrease video SNR, a low-pass filter (cutoff at 2.4 Hz) was also applied. The dominant motion waveform was then extracted with a clustering algorithm2.

Three male volunteers were scanned in a 1.5T scanner (GE Signa EXCITE) with an 8-channel cardiac coil. Two volumetric acquisitions were performed on each subject in random order: lung and abdominal imaging. Lung images were acquired using an ultra-short-echo time (UTE) sequence (TE of 32 us) with three-dimensional cones k-space trajectory3 and scan durations of 4.6–5.4 min. With each cone interleaf sampling the k-space center, a self-navigator was extracted4,5. Abdomen images were acquired using an RF-spoiled GRE sequence that was modified to include Butterfly navigators to measure three-dimensional translations6. This sequence used Cartesian variable-density sampling and radial view-ordering7 and scan durations of 1.9–2.1 min. During both of these scans, video from the optical camera and waveforms from the respiratory bellows were simultaneously recorded. Multiple sources enabled the evaluation of waveforms measured from the optical camera. No light sources in the scanner bore were used to evaluate the robustness of the video recordings to low-light settings.

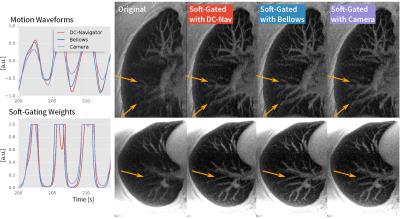

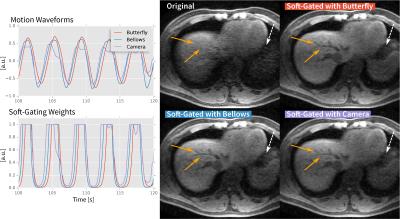

Video images were processed using OpenCV in Python8. Images were reconstructed using parallel imaging and compressed sensing with BART9. Soft-gating weights derived from motion waveforms were applied to suppress image artifacts from motion7,10.

Results

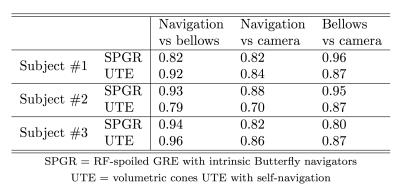

Despite low lighting, motion waveforms derived from the optical camera were comparable to the motion measured through the MR navigators and respiratory bellows. All waveforms had high correlation (≥0.7) with p<0.001 (Table 1). Example respiratory motion waveforms from all sources are shown in Figures 3 and 4. Since the waveforms were all highly correlated, the computed soft-gating weights were also highly correlated. As a result, the soft-gated image reconstructions were also comparable in image quality. From incorporating the soft-gating weights derived from the optical camera data, sharpening of pulmonary vessels in the lung images (Figure 3) and recovery of hepatic vessels (Figure 4) were observed.

Discussion

Preliminary results suggest that conventional approaches can be replaced by the use of an optical camera. Furthermore, images from the camera have the potential to provide additional information for improving MR image quality. Respiratory bellows produce a single measurement for each time point. MR navigators provide more accurate measurements, but resolution and accuracy are at the cost of additional scan time. Optical cameras measure entire images at high frame rates, and these images can be further processed to measure bulk patient motion. For demonstration of feasibility, images were divided to only 6 blocks. The videos can be processed with more divisions to provide better localized motion or with more sophisticated methods to estimate motion fields. Future work will include evaluating the proposed method for different patient ages and sizes that require different setups.

Conclusion

A method to measure respiratory motion with an optical camera has been developed and demonstrated to yield comparable results to conventional approaches of respiratory bellows and MR navigators. The proposed approach has the potential to simplify patient setup and to provide an independent source of data to improve the robustness and accuracy of MRI.

Acknowledgements

NIH R01-EB009690, NIH R01-EB019241, NIH P41-EB015891, Tashia and John Morgridge Faculty Scholars Fund, and GE Healthcare.References

[1] Maclaren J., Aksoy M., Bammer R. Contact-free physiological monitoring using a markerless optical system. Magn Reson Med 2015;74:571–577.

[2] Zhang T., Cheng JY., Chen Y., Nishimura DG., Pauly JM., Vasanawala SS. Robust self-navigated body MRI using dense coil arrays. Magn Reson Med 2016;76:197–205.

[3] Carl M., Bydder GM., Du J. UTE imaging with simultaneous water and fat signal suppression using a time-efficient multispoke inversion recovery pulse sequence. Magn Reson Med 2016;76:577–582.

[4] Brau AC., Brittain JH. Generalized self-navigated motion detection technique: preliminary investigation in abdominal imaging. Magn Reson Med 2006;55:263–270.

[5] Larson AC., White RD., Laub G., McVeigh ER., Li D., Simonetti OP. Self-gated cardiac cine MRI. Magn Reson Med 2004;51:93–102.

[6] Cheng JY., Alley MT., Cunningham CH., Vasanawala SS., Pauly JM., Lustig M. Nonrigid motion correction in 3D using autofocusing with localized linear translations. Magn Reson Med 2012;68:1785–1797.

[7] Cheng JY., Zhang T., Ruangwattanapaisarn N., Alley MT., Uecker M., Pauly JM., et al. Free-breathing pediatric MRI with nonrigid motion correction and acceleration. J Magn Reson Imaging 2015;42:407–420.

[8] http://opencv.org

[9] Uecker M., Ong F., Tamir JI., Bahri D., Virtue P., Cheng JY., et al. Berkeley Advanced Reconstruction Toolbox. In: Proceedings of the 23rd Annual Meeting of ISMRM, Toronto, Ontario, Canada, 2015. (abstract 2486).

[10] Johnson KM., Block WF., Reeder SB., Samsonov A. Improved least squares MR image reconstruction using estimates of k-Space data consistency. Magn Reson Med 2012;67:1600–1608.

Figures