3929

PET motion correction by co-registering multiple MR contrasts in simultaneous brain MR-PET1Monash Biomedical Imaging, Monash University, Melbourne, Australia, 2Monash Medical Centre, Clayton, Australia, 3Forschungszentrum Jülich GmbH, Institute of Neuroscience and Medicine, Jülich, Germany, 4Department of Electrical and Computer Systems Engineering, Monash University, Monash University, Melbourne, Australia, 5Australian Research Council Centre of Excellence for Integrative Brain Function, Monash University, Melbourne, Australia, 6Monash Institute of Cognitive and Clinical Neuroscience, Monash University, Melbourne, Australia

Synopsis

Head movement is a major issue in dynamic brain PET imaging. The introduction of a simultaneous MR-PET scanner enabled new opportunities to use MR information for PET motion correction. Here we present a novel method based on routinely acquired MRI sequences. Multi-contrast MR images are co-registered to extract motion parameters. These motion parameters are then used to guide PET image reconstruction. Results on both phantom and human data provide evidences that this method can significantly enhance image contrast and reduce motion artefact in brain PET images, without affecting MR protocol.

Introduction

Simultaneous MR-PET enables multi-parametric imaging of structural, functional and metabolic changes in the human brain. A dynamic brain MR-PET scan can take more than one hour to acquire and head movement can significantly degrade the PET image quality. Research has been undertaken to investigate MR guided PET motion correction. For instance, EPI images from MRI have been used to correct head movement in PET data1 . Sparsely sampled MR motion navigator echoes have also been introduced between MR sequences, which are on average 3 mins apart2. One major consideration in utilising MR to aid PET image motion correction is to not significantly reduce the MR image data acquisition time. Therefore, we propose a motion correction method for PET brain images based on existing simultaneously acquired MRI sequences. Structural, functional and diffusion MRI have been co-registered to extract motion parameters, and to subsequently correct the motion artefacts in the PET data. The method has been demonstrated to significantly improve PET image quality in both phantom and human MR-PET scans.Methods

Data acquisition:

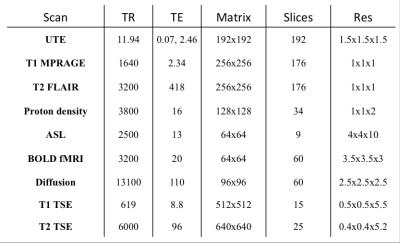

The IIDA brain phantom3 and a healthy human subject were scanned on a Siemens Biograph mMR, equipped with a 20-channel head and neck coil. The human subject scan was approved by the Monash University Human Ethics. PET list-mode data were continuously acquired for 60 minutes (dose ~37 MBq and ~110 MBq for phantom and human respectively). Simultaneously, multiple MR scans were collected as shown in Table 1. Head movements were introduced during the dynamic fMRI and diffusion scans as well as during the structural scans for both the phantom and volunteer studies.

Motion parameter extraction:

The T2 FLAIR image was chosen as a reference. EPI distortion was corrected with an opposite phase encoding scan using FSL Topup4. Brain extraction was first performed. All scans were resampled, using information stored in the T2 Qform matrix, which is available at the scanner. Then, structural T1 and PD MR scans were registered to the reference using FSL FLIRT4 with 6 degrees of freedom. For dynamic MRI scans (i.e. BOLD fMRI, Diffusion and ASL) all volumes were initially registered to their first volumes using MCFLIRT4 for BOLD fMRI and ASL, and EDDY4 for DWI. BOLD fMRI and ASL registration was improved using white matter boundaries obtained from the T2 image using FEAT4. Motion parameters including translation, rotation and mean displacement were obtained from the co-registration.

Motion corrected PET image reconstruction:

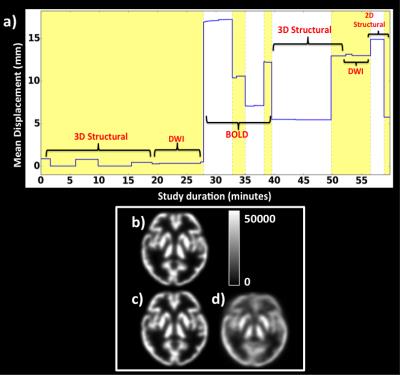

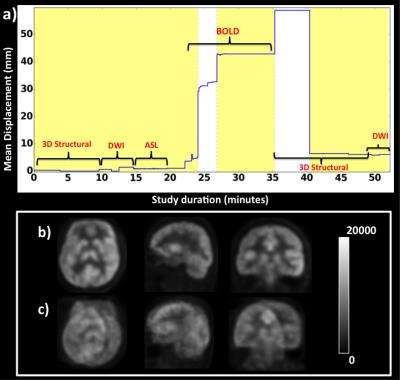

PET list-mode data were divided into multiple frames based on the mean displacement calculated from MR, i.e. whenever the mean displacement was changed over 2mm, a new PET frame was formed (e.g. Fig. 1a). PET images of each frame were then reconstructed using the following parameters (OP-OSEM: 24 subsets, 3 iterations and point spread function correction). Multiple frames were then corrected for motion using the derived MR motion parameters. Finally, a 60-minutes PET image was obtained by combining the motion corrected PET individual frames.

Results

Visual inspection revealed that the motion correction improved the PET image quality significantly in both the phantom and in-vivo datasets. Figure 1 shows the results for the brain phantom. The mean displacement parameter derived from the MR images (Fig 1a) shows alternating yellow and white bands when a new frame was formed (i.e. mean displacement is >2mm). A motion free reference PET image is shown (Fig. 1b), together with a 60-minutes motion corrected PET image (Fig. 1c) showing higher grey matter/white matter contrast and less blurriness in comparison to the non-motion corrected image (Fig. 1d). The volunteer results corroborate these findings with the motion corrected image (Fig. 2b) shows significantly reduced image artefacts compared with the non-motion corrected PET image (Fig. 2c).Discussion and conclusion

Results from both the brain phantom and the in-vivo PET data have demonstrated the feasibility of using multiple MR images to calculate PET motion correction. The proposed method has demonstrated robustness to differences in image contrast, resolution, in the field of view orientation and to image artefacts (e.g. EPI). The motion parameters provide an effective motion correction method for motion artefacts during dynamic PET image reconstruction. Overall, the approach offers a good motion sampling and correction method without changing the MR acquisition protocol, which may be useful for clinical and research usage of simultaneous MR-PET scanning. The method is completely based on FSL4 and is therefore readily available to the community. In the future we plan to extend the method to include intra-scan motion navigator echoes in structural MRI sequences.Acknowledgements

We acknowledge Thomas Close, Jakub Baran, Jason Bradley and Alexandra Carey for their help in facilitating this research.References

1. M. G. Ullisch, J. J. Scheins, C. Weirich, E. R. Kops, A. Celik, L. Tellmann, T. Stöcker, H. Herzog, and N. J. Shah, “MR-Based PET Motion Correction Procedure for Simultaneous MR-PET Neuroimaging of Human Brain,” PLoS One, vol. 7, no. 11, p. e48149, Nov. 2012.

2. S. H. Keller, C. Hansen, C. Hansen, F. L. Andersen, C. Ladefoged, C. Svarer, A. Kjaer, L. Hojgaard, I. Law, O. M. Henriksen, and A. E. Hansen, “Motion correction in simultaneous PET/MR brain imaging using sparsely sampled MR navigators: a clinically feasible tool.,” EJNMMI Phys., vol. 2, no. 1, p. 14, Dec. 2015.

3. H. Iida, Y. Hori, K. Ishida, E. Imabayashi, H. Matsuda, M. Takahashi, H. Maruno, A. Yamamoto, K. Koshino, J. Enmi, S. Iguchi, T. Moriguchi, H. Kawashima, and T. Zeniya, “Three-dimensional brain phantom containing bone and grey matter structures with a realistic head contour.,” Ann. Nucl. Med., vol. 27, no. 1, pp. 25–36, Jan. 2013.

4.

http://fsl.fmrib.ox.ac.uk/fsl/

Figures