2351

Deep Cross-Modal Feature Learning and Fusion for Early Dementia Diagnosis1Department of Radiology and BRIC, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States

Synopsis

Studies have shown that neuroimaging data (e.g., MRI, PET) and genetic data (e.g., SNP) are associated with the Alzheimer’s Disease (AD). However, to achieve a more accurate AD diagnosis model using these data is challenging, as these data are heterogeneous and high-dimensional. Thus, we first used region-of-interest based features and deep feature learning to reduce the dimension of the neuroimaging and SNP data, respectively. Then we proposed a deep cross-modal feature learning and fusion framework to fuse the high-level features of these data. Experimental results show that our method using MRI+PET+SNP data outperforms other comparison methods.

Introduction

Alzheimer's disease (AD) is the most common form of dementia1. The detection of its prodromal stage, i.e., mild cognitive impairment (MCI), and further detection of its subtypes, i.e., progressive MCI (pMCI) and stable MCI (sMCI), that respectively will progress to AD and remain as MCI within a certain period of time, are highly desirable in practice. Neuroimaging techniques such as magnetic resonance imaging (MRI), which provides brain structural information, and Positron Emission Tomography (PET), which provides brain metabolic information, have been successfully used to investigate the disease progression in the spectrum between cognitive normal (CN) and AD subjects. Besides, studies also show that genetic data like single nucleotide polymorphism (SNP) is associated with the neuroimaging data2-3 and AD4. These neuroimaging and SNP data are heterogeneous, providing us complementary phenotype and genotype information about the disease. We aim to devise an accurate MCI/CN and pMCI/sMCI classification model using these multimodal data, by proposing a novel deep-learning-based cross-modal feature learning and fusion framework.Methods

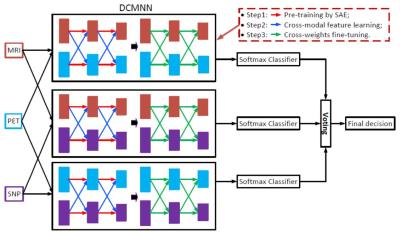

It is challenging to fuse heterogeneous multimodal data for disease diagnosis. For instance, we use Region-Of-Interest (ROI) based features for MRI and PET data, which are continuous and low dimensional (i.e., 93), while SNP data are discrete and high dimensional (i.e.,3123). Using these data directly by concatenation will result in an inaccurate detection model, as SNP data, in its raw form, is ineffective in disease diagnosis. Moreover, since these data are heterogeneous, fusing these data directly will also be problematic. To address these issues, we propose a deep learning framework to learn high-level features (HLFs, i.e., more abstract attributes to the disease labels) and cross-modal features (CMFs, i.e., abstract attributes from the view of other modality) for each modality, and fuse them together via activation function at each node of the network. Fig. 1 illustrates our framework, where we predict a disease label using each possible pair of modalities via the proposed deep cross-modal neural network (DCMNN), and fuse the final result using majority voting. There are four main steps in the proposed DCMNN. First, we use stacked auto-encoder (SAE) to pre-train part of the network (i.e., red arrows) to learn HLFs (i.e., output of each layer of neural network) for each modality. Second, using the learned HLFs as guidance, we perform cross-modal feature learning (i.e., blue arrows) to learn the CMFs of each modality using lower level features of other modalities (i.e., other views). After these two steps, we obtain the projection matrices for all layers of the network, where HLFs and CMFs computed at each node are fused together via an activation function. Third, we fine-tune all the projection matrices (i.e., green arrows) by using the learned projection matrices as initialization and a softmax layer as classifier’s output. Finally, a decision is made by fusing multiple classification results via majority voting.Results

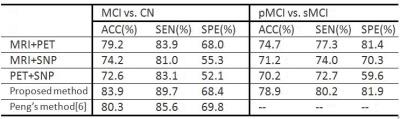

We evaluated our method using a subset of the ADNI dataset5. We used all subjects with complete MRI, PET and SNP data, including 93 MCI (39-pMCI and 54-sMCI), and 47 CN subjects. We reduced the dimensionality of SNP, by learning a deep neural network that regards SNP data as input and MRI data as target. Thus, the final input feature dimension for each modality in Fig.1 is 93. Fig.2 reports the performance comparison of the proposed method using different modalities, in terms of accuracy (ACC), sensitivity (SEN) and specificity (SPE), for MCI/CN and pMCI/sMCI classification tasks. From Fig.2, we can see that the proposed method using the three modalities (MRI+PET+SNP) outperforming any combination of two modalities, achieving ACC of 83.9% and 78.9%, for MCI/CN and pMCI/sMCI classification tasks, respectively. This shows the advantage of combining phenotype and genotype data in the detection of MCI and pMCI. Our method also outperforms Peng’s method6, a state-of-the-art method that used the same subset of ADNI data.Discussion and Conclusion

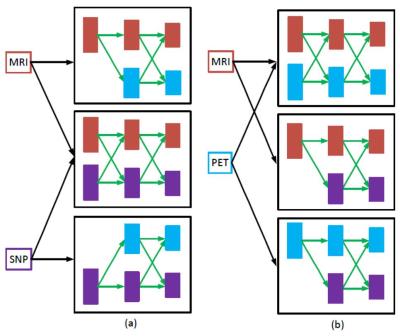

We present a novel deep learning framework, DCMNN, for MCI/CN and pMCI/sMCI classification using heterogeneous multimodal neuroimaging and genetic data. Our framework learns HLFs and CMFs, i.e., higher level abstraction of the disease labels from the original and other modalities, through SAE and deep cross-modal feature learning, respectively. Experimental results show that our method using three modalities (MRI+PET+SNP) outperforms other methods. In future work, we will include testing samples with possible missing PET or/and SNP data, where the CMFs from non-missing modality can be used to replace the HLFs for the missing modality via the learned deep cross-modal parameters (see Fig.3). Fig.3 shows the flow diagram for a testing sample with one missing modality.

Acknowledgements

This work was supported in part by NIH grants EB006733, EB008374, EB009634, MH100217, AG041721 and AG042599.References

1. A. s. Association, “2013 Alzheimer’s disease facts and figures,” Alzheimers Dementia, vol. 9, pp. 208–245, 2013.

2. Lin, D., Cao, H., Calhoun, V.D., Wang, Y.P.: Sparse models for correlative and integrative analysis of imaging and genetic data. J. Neurosci. Methods 237 (2014) 69-78.

3. Zhang, Z., Huang, H., Shen, D.: Integrative analysis of multi-dimensional imaging genomics data for Alzheimer’s disease prediction. Front. Aging Neurosci. 6(260), 2014.

4. Gaiteri, C., Mostafavi, S., Honey, C.J., De Jager, P.L., Bennett, D. A.: Genetic variants in Alzheimer disease - molecular and brain network approaches. Nat. Rev. Neurol. (2016) 1–15.

5. http://adni.loni.usc.edu

6. J. Peng, L. An, X. Zhu, Y. Jin, D. Shen. “Structured Sparse Kernel Learning for Imaging Genetics based AD Diagnosis”, MICCAI 2016, Athens, Greece, Oct. 17–21, 2016.

Figures