2087

Region-adaptive Deformable Registration for MRI/CT Pelvic Images via Bi-directional Image Synthesis1School of Automation, Northwestern Polytechnical University, Xi'an, People's Republic of China, 2Department of Radiology and BRIC, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States

Synopsis

Registering pelvic CT and MRI can help propagate accurate delineation of pelvic organs (prostate, bladder and rectum) from MRI to CT, since it is difficult to directly obtain accurate organ labels from CT due to its low soft-tissue contrast. We propose to use image synthesis to first eliminate the appearance gap between modalities by performing image synthesis in bi-directions in order to provide more anatomical information for guiding the registration. Then, a hierarchical region-adaptive registration framework is proposed to utilize the significant anatomical information from each modality to guide accurate MRI/CT deformable registration.

Purpose

In Prostate Cancer Radiation Therapy (PCRT), CT is of great importance for dose planning. But CT has low soft-tissue contrast, which makes it difficult for segmenting pelvic organs, i.e., prostate, bladder and rectum. While, for some patients, they are scanned with MRI, for better delineating pelvic organs and also potentially identifying cancer regions using high soft-tissue contrast. In this application, MRI and CT need to be co-registered accurately in order to transfer pelvic delineations from MRI to CT to facilitate PCRT.Method

There are two main challenges for the registration of pelvic MRI and CT: 1) highly non-linear appearance relationship between MRI and CT; 2) large local deformation due to possible bladder filling and emptying, rectum movement, and bowel gas. So, deformable registration is required to estimate large local deformation. However, because of appearance dissimilarity in MRI and CT, it is quite difficult to define a common similarity metric for guiding the registration. To this end, we propose using image synthesis to first eliminate the appearance difference between modalities. Then, a hierarchical region-adaptive deformable registration is proposed by considering significant anatomical information in each modality to improve the registration.

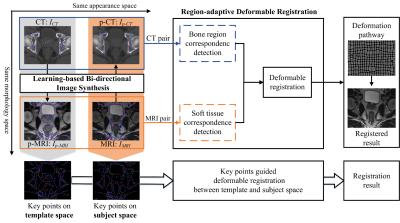

The framework of our proposed method is shown in Fig.1. Specifically, to use the complimentary anatomical information from both modalities, we first perform image synthesis in bi-directions for synthesizing not only CT from MRI, but also MRI from CT. To improve the quality of synthesized images, based on the classical random forest1,2, a novel Multi-target Patch-wise Regression Forest (MP-RF) with auto-context model3,4 is proposed to learn the appearance mapping.

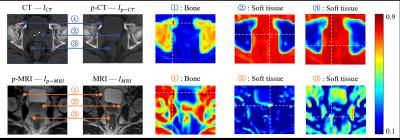

After bi-directional image synthesis, we will have a pseudo-CT (p-CT) $$$I_{p-CT}$$$ , which has similar appearance with the real CT $$$I_{CT}$$$ , but still share the same structure space as original MRI $$$I_{MRI}$$$ . Also, we will have a pseudo-MRI (p-MRI) $$$I_{p-MRI}$$$, which has similar appearance with real MRI $$$I_{MRI}$$$, but still share the same structure space as original CT $$$I_{CT}$$$ . Obviously, CT has high contrast in bone, while MRI has high contrast in soft-tissues, e.g., prostate, bladder, and rectum. It is easy to find accurate bone correspondence in CT and soft-tissue correspondence in MRI, as shown in Fig. 2. Therefore, we propose using key-points guided region-adaptive registration where key points on bone are extracted from CT pair ($$$I_{CT}$$$ and $$$I_{p-CT}$$$) while key points on soft-tissue are selected from MRI pair ($$$I_{MRI}$$$ and $$$I_{p-MRI}$$$). In this way, we can make good use of significant anatomical information from each modality. That is, accurate correspondences obtained from one modality can help another modality, where the respective correspondence detection is difficult. Then, a hierarchical key point selection and matching mechanism is also developed to guide deformable registration. To further improve the robustness of registration, especially for the case with large local deformation between MRI and CT, we extend the symmetric diffeomorphic scheme5,6 in our proposed region-adaptive registration, which can also preserve the inverse consistency.

Results

We validated our proposed method on 20 subjects, each with a pair of CT and MRI. Since the quality of synthesized images influences registration, we first evaluate our proposed bi-directional image synthesis method (MT-RF). For CT synthesis from MRI, the PSNR is 32.04±0.89, which offers 0.36dB improvement compared with traditional single-target regression forest (ST-RF). Also, for MRI synthesis from CT, the PSNR is 25.87±0.89, which offers 0.53dB improvement compared with ST-RF. These performances are further improved by using auto-context model, such as the PSNR are improved to 34.03±0.82 and 26.52±0.78, respectively.

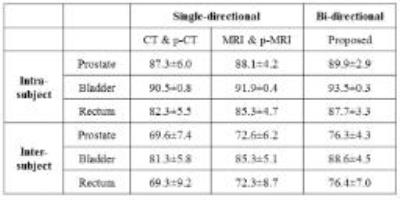

Table 1 shows Dice Similarity Coefficient (DSC) after deformable registration. Here, we further compare single-directional image synthesis and our bi-directional image synthesis, using the same registration module, i.e., our proposed hierarchical key-points guided deformable registration. The use of bi-directional image synthesis produces the best performance, compare with the case of using single-directional image synthesis (where the registration is performed on either CT pair or MRI pair).

Discussion and Conclusion

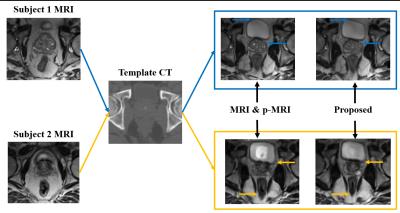

To better guide multimodal image registration, we proposed synthesizing image in bi-directions and then using more distinctive information in each specific modality to guide the estimation of large local deformation. This is significantly different from the conventional image synthesis based registration methods which are often based on single-directional image synthesis, i.e., synthesizing CT from MRI. Also, we tackle the challenging problem of synthesizing MRI from CT, where CT with limited soft tissue information is used to predict MRI with rich soft tissue information. Finally, we proposed a novel hierarchical region-adaptive registration framework by using distinctive information in each specific modality to help guide deformable registration. Experimental results show that our proposed method outperforms the state-of-the-art methods.Acknowledgements

No acknowledgement found.References

[1] Y. Amit and D. Geman, "Shape quantization and recognition with randomized trees," Neural computation, vol. 9, pp. 1545-1588, 1997.

[2] A. Liaw and M. Wiener, "Classification and regression by randomForest," R news,vol. 2, pp. 18-22, 2002.

[3] Y. Gao, Y. Shao, J. Lian, A. Z. Wang, R. C. Chen, and D. Shen, "Accurate Segmentation of CT Male Pelvic Organs via Regression-Based Deformable Models and Multi-Task Random Forests," IEEE transactions on medical imaging, vol. 35, pp. 1532-1543, 2016.

[4] P. Viola and M. J. Jones, "Robust real-time face detection," International journal of computer vision, vol. 57, pp. 137-154, 2004.

[5] G. Wu, M. Kim, Q. Wang, and D. Shen, "S-HAMMER: Hierarchical attribute-guided, symmetric diffeomorphic registration for MR brain images," Human brain mapping, vol. 35, pp. 1044-1060, 2014.

[6] B. B. Avants, C. L. Epstein, M. Grossman, and J. C. Gee, "Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain," Medical image analysis, vol. 12, pp. 26-41, 2008.

Figures