1346

Eye Tracking System for Prostate Cancer Diagnosis Using Multi-Parametric MRI1Clinical Center, National Institutes of Health, Bethesda, MD, United States, 2SZI, Children's National Health System, Washington, DC, United States, 3Pediatrics, George Washington University, Washington, DC, United States, 4NCI, National Institutes of Health, Bethesda, MD, United States, 5CIT, National Institutes of Health, Bethesda, MD, United States, 6Center for Research in Computer Vision, University of Central Florida, Orlando, FL, United States

Synopsis

Medical images have been studied using eye tracker systems from visual search and perception perspectives since 1960’s. However number of studies for the multi slice imaging is very limited due to the technical challenges. We developed a software to overcome the difficulties, and enable visual search/perception studies using multi-parametric MRI of prostate cancer. Multiparametric MR images (T2w, DWI, ADC map, and DCE) were synchronized with the eye tracker system and visual-attention maps were successfully created for each image types using gaze information. This is the first multiparametric MR study using an eye tracker system.

Background

Eye (gaze) tracking has been used to study visual search on radiological images since the beginning of 1960s1,2. Although eye tracking research on 2D (single slice) radiographical images (x-ray) started almost half a century ago, a limited number of studies have analyzed 3D (multi-slice) datasets, such as CT3-10, CT colonography11-13, PET/CT14 and MR3,15-16. To the best of our knowledge, none of these studies have provided a realistic reading room experience to participating radiologists, such as adjusting window/level, zoom in/out, panning, measuring target length, and using the mouse to scroll up/down. One significant reason for the lack of multi-slice studies is the technical complexity, because 2D-gaze information and multi-slice images require synchronization on the order of tens of milliseconds17. Our group recently proposed a platform for 3D lung CT image analysis using eye gaze tracking technology. That study provided seamless integration of gaze information into automated image analysis tasks that is helpful for diagnostic decisions of the radiologists18.

Purpose

In this study, we introduced a novel platform addressing the challenge of eye tracking integration into multi-parametric prostate MR (mpMR) images by recording crucial interaction of the radiologist with the DICOM viewer: mouse scroll/click, active slice number, user defined window/level values, location and the value of tumor measurements.

Methods

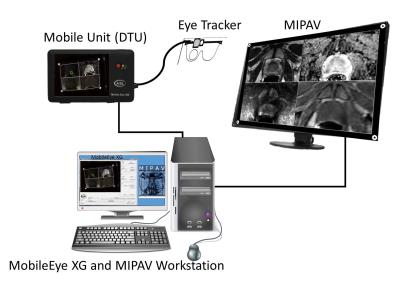

A goggles-type eye trackeing system was used and gaze information was collected by a commercial software, MobileEye XG (ASL, Boston, MA). The system consisted of two cameras, which were adjustable to fit different users and attached to the frame of the goggles (Figure 1). One camera monitored eye motion and the second one recorded scene at 60Hz of data rate.

Before each experiment, the system was calibrated by showing 5-numbered circles on the screen and asking the participants to look at the center of the circles. Once Mobile XG was calibrated, experiment was started by recording the videos and running the custom designed DICOM viewer software. The viewer is an extension of MIPAV (CIT, NIH, Bethesda, MD) and capable of logging the crucial participant inputs including system time stamp on the order of one millisecond.

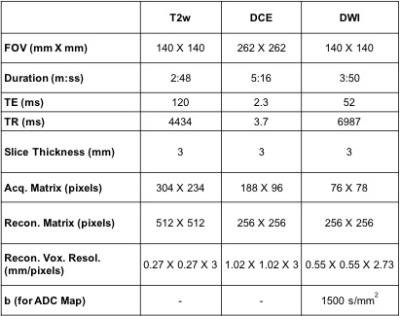

The raw gaze data and the scene video were transferred to a computer using a mobile transmit unit. ASL+ monitor tracking software used four white circles to automatically detect the monitor from scene video and compute gaze information on the viewed stimulus, which were mpMR images (Table 1). DCE images were cropped as the FOV was twice as much of the other images. DCE, ADC map, and DWI images were also oversampled to match to the T2w images.

In this study, the participant radiologist was not constrained by major psychological or environmental rules, such as reading duration and mouse control. The all lights in the room were turned off during the experiment, and height of the monitor and chair were adjusted by the participants (Figure 2). Participants were also asked to narrate what they were seeing during the experiment and their voice was recorded.

Results

Visual attention maps were created using radiologist’s gaze information on the mpMR images (Figure 3). All 4 images were synchronized and DCE phase images for a specific active slice were scanned using mouse scroll. The following data was recorded to a .csv file: System time (hh:mm:ss.sss), active slice number, measured length with start and finish locations, location of mouse click, window/level values of separate images, and zoom/pan (corners of the displayed image).Discussion

This pilot study demonstrated a very important technical advancement for eye tracking research on complex medical images. Perception studies for complex reading room tasks will be feasible with the proposed system. Using the visual search maps, our group aims to develop smart a CAD system for prostate cancer. The study had some technical limitations. First, the scene camera recorded low resolution videos (640x480), which reduced the accuracy of the gaze information. Third, gaze information on stimulus data (images) was extracted using video processing, as the initial gaze information was collected on the scene video. These limitations will be studied as future works. The proposed system will be made public to help researchers to extend their research. Our group aims to unify the eye tracker with the viewer systems to simplify the workflow. Finally, PACS integration of the system will enable this platform to be used in the routine radiology tasks.Conclusion

In this novel study, the eye tracker platform was introduced to enable visual search and perception studies on complex mpMR images of prostate-cancer cases. The radiologist’s gaze information was successfully extracted from multi-window MRI viewer.Acknowledgements

No acknowledgement found.References

1. Tuddenham, W. J., & Calvert, W. P. (1961). Visual Search Patterns in Roentgen Diagnosis. Radiology, 76(2), 255–256. http://doi.org/10.1148/76.2.255

2. Thomas, E. L., & Lansdown, E. L. (1963). Visual Search Patterns of Radiologists in Training. Radiology, 81(2), 288–292. http://doi.org/10.1148/81.2.288

3. Phillips, P. W. (2005). A software framework for diagnostic medical image perception with feedback, and a novel perception visualization technique. Proceedings of SPIE, 5749(April 2005), 572–580. http://doi.org/10.1117/12.595365

4. Ellis, S. M., Hu, X., Dempere-Marco, L., Yang, G. Z., Wells, A. U., & Hansell, D. M. (2006). Thin-section CT of the lungs: Eye-tracking analysis of the visual approach to reading tiled and stacked display formats. European Journal of Radiology, 59(2), 257–264. http://doi.org/10.1016/j.ejrad.2006.05.006

5. Cooper, L., Gale, A., Saada, J., Gedela, S., Scott, H., & Toms, A. (2010). The assessment of stroke multidimensional CT and MR imaging using eye movement analysis: does modality preference enhance observer performance? Progress in Biomedical Optics and Imaging - Proceedings of SPIE, 7627(May), 76270B. http://doi.org/10.1117/12.843680

6. Matsumoto, H., Terao, Y., Yugeta, A., Fukuda, H., Emoto, M., Furubayashi, T., Ugawa, Y. (2011). Where do neurologists look when viewing brain CT images? an eye-tracking study involving stroke cases. PLoS ONE, 6(12). http://doi.org/10.1371/journal.pone.0028928

7. Drew, T., Vo, M. L., Olwal, A., Jacobson, F., Seltzer, S. E., & Wolfe, J. M. (2013). Scanners and drillers: characterizing expert visual search through volumetric images. Journal of Vision, 13(10), 1–13. http://doi.org/10.1167/13.10.3

8. Rubin, G. D., Roos, J. E., Tall, M., Harrawood, B., Bag, S., Ly, D. L., Roy Choudhury, K. (2015). Characterizing Search, Recognition, and Decision in the Detection of Lung Nodules on CT Scans: Elucidation with Eye Tracking. Radiology, 274(1), 276–286. http://doi.org/10.1148/radiol.14132918

9. Bertram, R., Kaakinen, J., Bensch, F., Helle, L., Lantto, E., Niemi, P., & Lundbom, N. (2016). Eye Movements of Radiologists Reflect Expertise in CT Study Interpretation: A Potential Tool to Measure Resident Development. Radiology, 0(0), 1–11. http://doi.org/10.1148/radiol.2016151255

10. Venjakob, A. C., Marnitz, T., Gomes, L., & Mello-Thoms, C. R. (2014). Does preference influence performance when reading different sizes of cranial computed tomography? Journal of Medical Imaging, 1(3), 35503. http://doi.org/10.1117/1.JMI.1.3.035503

11. Phillips, P., Boone, D., Mallett, S., Taylor, S. a, Altman, D. G., Manning, D., Halligan, S. (2013). Method for Tracking Eye Gaze during Interpretation of Endoluminal 3D CT Colonography: Technical Description and Proposed Metrics for Analysis. Radiology, 267(3), 924–931. http://doi.org/10.1148/radiol.12120062

12. Helbren, E., Halligan, S., Phillips, P., Boone, D., Fanshawe, T. R., Taylor, S. A., Mallett, S. (2014). Towards a framework for analysis of eye-tracking studies in the three dimensional environment: A study of visual search by experienced readers of endoluminal CT colonography. British Journal of Radiology, 87(1037). http://doi.org/10.1259/bjr.20130614

13. Mallett, S., Phillips, P., Fanshawe, T. R., Helbren, E., Boone, D., Gale, A., Halligan, S. (2014). Tracking Eye Gaze during Interpretation of Endoluminal Three-dimensional CT Colonography: Visual Perception of Experienced and Inexperienced Readers. Radiology, 273(3), 783–792. http://doi.org/10.1148/radiol.14132896

14. Gegenfurtner, A., & Seppänen, M. (2013). Transfer of expertise: An eye tracking and think aloud study using dynamic medical visualizations. Computers and Education, 63, 393–403. http://doi.org/10.1016/j.compedu.2012.12.021

15. Cooper, L., Gale, A., Darker, I., Toms, A., & Saada, J. (2009). Radiology Image Perception and Observer Performance: How doews expertise and clunical information alter interpretation? Progress in Biomedical Optics and Imaging - Proceedings of SPIE, 7263(May 2010), 72630K–72630K–12. http://doi.org/10.1117/12.811098

16. Venjakob, A. C., Marnitz, T., Phillips, P., & Mello-Thoms, C. R. (2016). Image Size Influences Visual Search and Perception of Hemorrhages When Reading Cranial CT: An Eye-Tracking Study. Human Factors: The Journal of the Human Factors and Ergonomics Society, 58(3), 441–451. http://doi.org/10.1177/0018720816630450

17. Venjakob, A. C., & Mello-Thoms, C. R. (2015). Review of prospects and challenges of eye tracking in volumetric imaging. Journal of Medical Imaging, 3(1), 11002. http://doi.org/10.1117/1.JMI.3.1.011002

18. Khosravan, N., Celik, H., Turkbey, B., Cheng, R., McCreedy, E., McAuliffe, M., … Bagci, U. (2016). Gaze2Segment: A Pilot Study for Integrating Eye-Tracking Technology into Medical Image Segmentation, 1–11. Retrieved from http://arxiv.org/abs/1608.03235

Figures