1278

Automatic reference-free detection and quantification of MR image artifacts in human examinations due to motionThomas Küstner1,2, Annika Liebgott1,2, Lukas Mauch2, Petros Martirosian3, Konstantin Nikolaou1, Fritz Schick3, Bin Yang2, and Sergios Gatidis1

1Department of Radiology, University of Tuebingen, Tuebingen, Germany, 2Institute of Signal Processing and System Theory, University of Stuttgart, Stuttgart, Germany, 3Section on Experimental Radiology, University of Tuebingen, Tuebingen, Germany

Synopsis

MRI has a broad range of applications due to its flexible acquisition capabilities. This demands profound knowledge and careful parameter adjustment to identify stable sets which guarantee a high image quality for various and, especially in examinations of patients, unpredictable conditions. This complex nature and long examination times make it susceptible to artifacts which can markedly reduce the diagnostic image quality. An early detection and possible correction of these artifacts is desired. In this work we propose a convolutional neural network to automatically detect, localize and quantify motion artifacts. Initial results in the head and abdomen demonstrate the method’s potential.

Purpose

MRI is

a versatile imaging modality which allows the precise and non-invasive assessment

of anatomical structures and pathophysiological pathways. A flexible sequence

and reconstruction parametrization make it very tunable to specific

applications to meet the demanded diagnostic requirements. These quality

criteria and the respective parametrizations need to be optimized and ensured

to provide sufficient quality for changing acquisition conditions often without

the availability of reference data. The relatively long examination times and

varying acquisition conditions predispose it to imaging artifacts which may

result in a deterioration of diagnostic image quality. Artifacts can originate

from hardware imperfections, signal processing inaccuracies and, in most cases,

from the compliance and behavior of the patient which can demand a re-scan if

the image is too severely affected by e.g. motion. However, the quality with

respect to the diagnostic application is often just checked retrospectively by

manual reading of a human observer (HO) when the patient has already left the

facility, i.e. a re-scan is just possible with larger effort in an additional

exam. Careful operation together with prospective or retrospective correction

techniques1,2 may reduce artifact burden, but are either not always

applicable and/or cannot correct for all different types of induced artifacts. It

is therefore desired to have an automatic image quality assessment directly

after the acquisition with feedback of the acquired image quality and a suitable

sequence re-parametrization suggestion to the operating technician. We

therefore proposed a non-reference MR image quality assessment (IQA) system

based on a machine-learning approach to predict HO labeling scores of arbitrary

input images corrupted with unknown artifacts3,4,5. A reliable

quality prediction depends on the derived features. In this work, we will therefore

focus on the automatic detection and quantification of motion artifact examples

of rigid head motion and non-rigid respiratory motion in the thorax. Motion

detection methods aim to track the underlying motion by an MR navigator6,7,8

via external sensors9,10,11 or from the acquired time-series images,

e.g. via registration12,13. But no motion quantification or

localization has been conducted up to now. We propose the usage of a

convolutional neural network (CNN) to quantify and localize motion artifacts

for IQA.Material and Methods

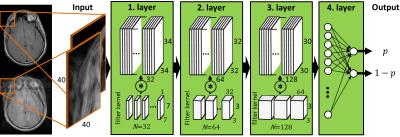

The proposed CNN is shown in Fig.1. The CNN consists of three convolutional layers for the detection and localization of the artifacts. A subsequent fully connected multilayer perceptron with a sigmoid activation function feeds two soft-max output nodes for a probabilistic quantification p of the artifact burden. Input images are patched into sizes of 40x40 overlapping patches to enable the localization and to reduce the computational complexity respectively the number of parameters to be trained. Each convolutional layer consists of N filter kernels with size MxLxB, a rectifying linear unit activation function and a downsampling layer. A dyadic increase in the filter kernel depth N provides a multi-resolution approach. Filter coefficients and bias values are trained via a steepest gradient descent. Parameter optimization and training is conducted by means of a 10-fold cross validation on 10 volunteer datasets (3 female, age 27±4.5 years). Datasets are acquired for each volunteer on a whole-body 3T PET/MR (Biograph mMR, Siemens) with a T1w-TSE in the head and a T1w-GRE in the abdomen. For each region, two datasets are acquired with and without motion artifacts (head: rigid head tilting, abdomen: non-rigid respiratory movement) which serve directly as labels for the supervised training.Results and Discussion

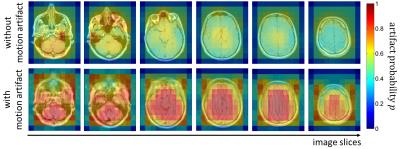

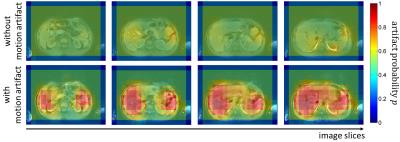

Fig.2 and 3 display the acquired images of one subject with and without motion artifacts in the head and abdomen, respectively, with a colored overlay of the probability map p for the patches. It can be seen that the artifact affected regions are correctly localized and show a high probability of motion artifacts. For all subjects an average test accuracy of 85% in the head and 70% in the abdomen was achieved. A detection of motion is thus possible in both regions with a superior performance in the head region, because of the more systematic artifact manifestation in the image. These initial results indicate the potential feasibility, but the study has its limitations. So far no patch-based labeling (motion-free and motion-affected) was conducted and the artifact detection was just targeted on motion artifacts on a specific sequence of a single anatomic region.Conclusion

We propose an algorithm to detect, localize and quantify motion artifacts in the head and abdominal region for a T1w sequences. Future studies will investigate the reliability and robustness to transfer the results to other sequences, body regions and artifacts. This would enable the usage as a reliable automatic predictor in the context of an automatic IQA.Acknowledgements

No acknowledgement found.References

[1] McClelland et al., Med Image Anal 2013:17(1). [2] Godenschweger et al., Phys Med Biol 2016:61(5). [3] Küstner et al., Proc ISMRM 2015. [4] Küstner et al., Proc ISMRM 2016. [5] Liebgott et al., Proc ICASSP 2016. [6] Ehman and Felmlee, Radiology 1989:173. [7] Fu et al., MRM 1995:34. [8] Ward et al., MRM 2000:43. [9] Derbyshire et al., JMRI 1998:8. [10] Zaitsev et al., Neuroimage 2006:31. [11] Maclaren et al., PLoS One 2012:7. [12] Kim et al., IEEE TMI 2010:29. [13] Metz et al., Med Image Anal 2011:15.Figures

Fig. 1: Proposed

convolutional neural network with three hidden layers and one fully-connected

output layer to estimate artifact probabilities p on a patch-basis.

Fig. 2: Probability

map p for artifact occurrence on

patch-basis overlaid to T1w TSE image of the head in different slice locations. Top

row: images acquired without artifact (lying still). Bottom row: images

acquired with artifact (head tilding in left-right direction).

Fig. 3: Probability

map p for artifact occurrence on

patch-basis overlaid to T1w GRE abdominal image in different slice locations.

Top row: images acquired without artifact (breath-hold). Bottom row: images

acquired with artifact (free-breathing).