0735

MRI-guided Needle Biopsy using Augmented Reality1Mechanical Engineering, Stanford University, Stanford, CA, United States, 2Radiological Sciences, Stanford University, CA, United States, 3Bioengineering, Stanford University, CA, United States, 4Mechanical Engineering, Stanford University, CA, United States

Synopsis

We present an augmented reality system that integrates needle shape sensing technology and a visual tracking system to enable a more effective visualization of preoperative MR images during MR-guided needle biopsy procedure. It allows physicians to view 3D MR images of targets within the patient fused onto the patient anatomy as well as a virtual biopsy needle registered to the real needle. This provides what-you-see-is-what-you-get interaction even after the needle is inserted into the opaque body and can improve on current MR-guided needle biopsy by providing a faster tracking, and by enabling real-time interactive procedures based on pre-acquired scans, thus avoiding costly MRI scans.

Introduction

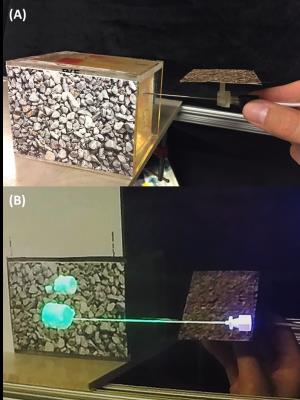

Currently, MRI-guided needle biopsy is performed with two methods: using pre-acquired MR images, or interleaving MRI scans. In the first method, physicians need to create a mental mapping of the current state of the anatomy which is challenging. In the second method, physicians receive continuous updates of the current needle position, but this is time-consuming and requires multiple scans. In this work, we have developed an application for the Microsoft HoloLens (Figure 1 and 2) to: (1) visualize holograms of pre-operative 3D MRI optically registered to the subject, enabling physicians a what-you-see-is-what-you-get interaction; and (2) visualize a hologram of the needle shape and position update in real time [1]. This enables physicians to see both the tip of the needle and the target inside the patient faster than current methods of MRI guidance. We expect this to shorten procedural time, thus, reducing the cost and potentially improve the success rate in needle biopsies.Methods

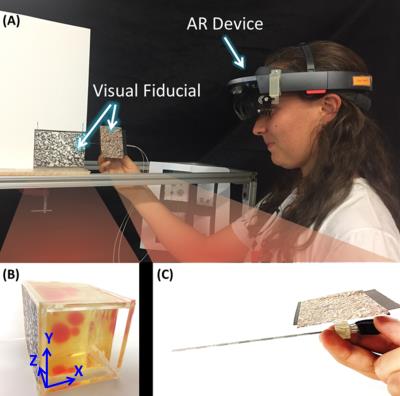

The HoloLens (Figure 3-A) was used to display a hologram of a biopsy needle and phantom tissue targets. Both the needle handle and the target were individually tracked in real-time using fiducials to register (Figure 3-B,C) the holograms to the real objects.

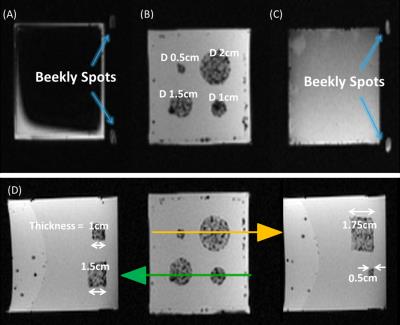

A PVC-based phantom with Gadolinium doped PVC targets of different sizes embedded within (Figure 3-B) were imaged in a 3T MRI scanner using a 3D gradient-echo sequence (Figure 4). The individual targets were then segmented and uploaded into the HoloLens. Six subjects were asked to perform needle punctures on these targets. They were instructed to puncture the center of the target as accurate as possible on a one-shot insertion without retracting the needle to correct the path. Three different conditions were given to each subject in random order.

1. No HoloLens (NH) Control: Puncture guided only by 2D images of pre-acquired MRI scans of the targets inside the phantom tissue.

2. With HoloLens and without shape-sensing (WHNS): Puncture assisted by holographic display of targets (reconstructed from the 3D MR image) and needle assuming a rigid shaft.

3. With HoloLens and with shape-sensing (WHWS): Same as 2) but updating display of needle shaft deflection using optical strain sensor data.

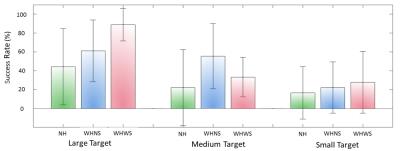

A puncture was only counted as success if the final needle tip position fell within the boundaries of a target. Success rate was calculated for each target size in each condition. At the end of each puncture, subjects were asked to report a confidence level of puncture success from 1-5. This data was collected from 6 subjects of age 23-30 with no medical experience.

Results

Figure 5 shows the success rate of the punctures for each target size. The mean success rate was higher for both WHNS and WHWS compared to NH control condition.

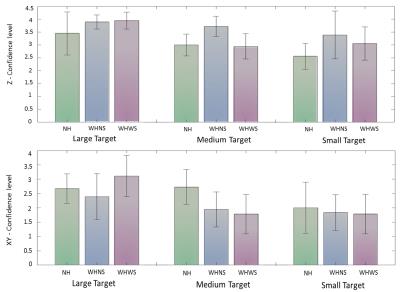

Figure 6 show the subject’s confidence level in accurately hitting the target in the insertion depth (z-axis) and in the x-y dimension. For all target sizes, the mean confidence in the insertion depth (z-axis) was higher (μ = 3.0, 3.7, 3.3) than the mean confidence in the tip’s x-y location (μ = 2.5, 2.1, 2.2). In the x-y dimension, users were more confident in the NH condition. While in the z-dimension, users were more confident in the WHNS and WHWS conditions.

Discussion

Users were more confident and achieved higher success rates when guided with HoloLens both with and without shape sensing. However, the overall success rate for small targets (5 mm diameter) is still below 50% for all conditions. To increase success rate, guiding the initial insertion angle and position of the needle is critical since subjects were not allowed to retract the needle for path correction.

Subjects reported a lower confidence level of the needle tip’s x-y position using the HoloLens. This can be improved in the future by varying the shading, lighting, and depth-of-field blur of the holograms, or perhaps as users gain more experience. On the other hand, users were more confident about the needle insertion depth (z position), due to the visual cue of the holograms occluding the needle tip once inserted.

Conclusion

A HoloLens application has been developed for MR-guided needle biopsy. Our preliminary phantom experiments show that the holograms improve targeting performance. The alignment of the holograms to the real objects should be improved further for increasing the success rate of smaller targets.Acknowledgements

This work was supported by NIH P01 CA159992, NSF Graduate Research Fellow Program and Kwanjeong Scholarship.References

[1] Elayaperumal, Santhi, et al. "Autonomous real-time interventional scan plane control with a 3-D shape-sensing needle." IEEE transactions on medical imaging 33.11 (2014): 2128-2139.Figures