0689

Machine Learning for Intelligent Detection and Quantification of Transplanted Cells in MRI1Michigan State University, East Lansing, MI, United States, 2Michigan State University, MI, United States, 3Radiology, Michigan State University, MI, United States

Synopsis

Cell based therapy (CBT) is promising for treating a number of diseases. The ability to serially and non-invasively measure the number and determine the precise location of cells after delivery would aid both the research and development of CBT and also its clinical implementation. MRI-based cell tracking, employing magnetically labeled cells has been used for the past 20 years to enable detection of transplanted cells, achieving detection limits of individual cells, in vivo.These individual cells can be detected as dark spots in T2* weighted MRI. Manual enumeration of these spots, and hence, counting cells, in an in vivo MRI is a tedious and highly time consuming task that is prone to inconsistency. Therefore, it becomes practically infeasible for an expert to conduct such manual enumeration for a very large scale analysis, consequentially affecting our ability to monitor CBT. To solve this challenge, we have designed a machine learning methodology for automatically quantifying transplanted cells in MRI in an accurate and efficient manner.

Introduction

Cell based therapy (CBT) is promising for treating a number of diseases (1)(2)(3). The ability to serially and non-invasively measure the number and determine the precise location of cells after delivery would aid both the research and development of CBT and also its clinical implementation. MRI-based cell tracking, employing magnetically labeled cells has been used for the past 20 years to enable detection of transplanted cells, achieving detection limits of individual cells, in vivo (4). These individual cells can be detected as dark spots in T2* weighted MRI (see Fig.1). Manual enumeration of these spots, and hence, counting cells, in an in vivo MRI is a tedious and highly time consuming task that is prone to inconsistency. Therefore, it becomes practically infeasible for an expert to conduct such manual enumeration for a very large scale analysis, consequentially affecting our ability to monitor CBT. To solve this challenge, we have designed a machine learning methodology for automatically quantifying transplanted cells in MRI in an accurate and efficient manner. This machine learning methodology has three key innovations: 1) a convolutional neural network (CNN) is designed for automatic feature extraction; 2) a new transfer learning approach is designed to learn with sparse data; and 3) labeling behavior is exploited in a CNN architecture.

Methods

MRI and Datasets:

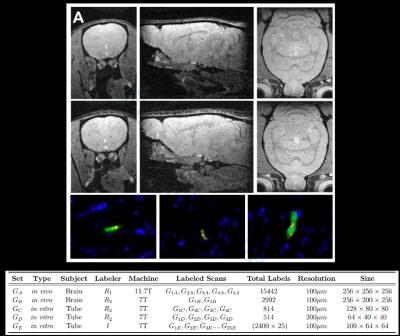

A total of 33 in vitro MRI scans were performed using a FLASH sequence with a diverse set of MRI parameters on agarose phantoms that were constructed with a dilute, known number of 4.5 micron diameter MPIOs from Dynal. Each bead has 10 pg of iron, simulating a labeled cell. In vivo MRI of 7 rat brains was performed similarly. 5 rats were previously injected intra-cardiac with MPIO-labeled MSCs, delivering cells to the brain – 2 rats were naïve. (see Fig.1 for more details).

Machine Learning Methods:

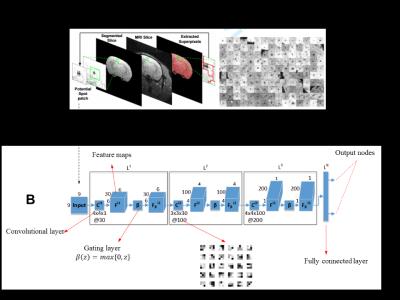

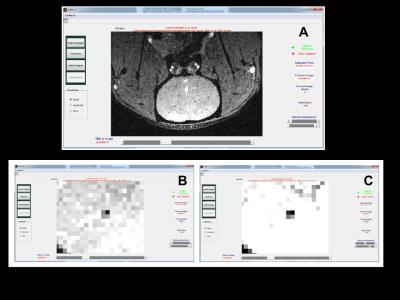

A deep learning CNN architecture was designed for detecting transplanted magnetically labeled cells in MRI (see Fig. 2). Deep learning CNN first requires labeled data to learn the concept of a spot in MRI. Once learned, spots can be detected accurately in any unseen MRI and large scale quantification can be performed efficiently. A customized labeling software was designed and developed for an expert to provide training data on spots in MRI using manual 'mouse clicks' (see Fig. 3).

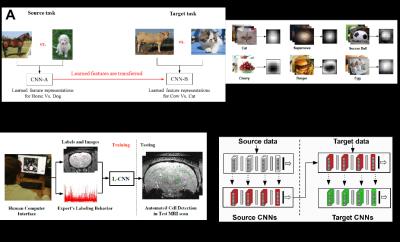

Generally, deep learning requires an enormous amount of labeled data for training, often challenging to obtain in medical applications. Humans, on the other hand, can learn a new visual entity using only few examples, utilizing previously learned concepts. Inspired from this, we re-design the approach to exploit the concept of transfer learning (5). This allows our deep learning approach to first learn about a large number of real world entities such as apples, starts, eyes, etc. and then while learning the concept of spots in MRI utilizes this previous knowledge (see Fig. 4). However, not all the previous knowledge is useful, some can also degrade learning. Therefore, we propose an information theoretic framework to automatically evaluate previous concepts ( previous CNNs) to chose the best source of knowledge prior to conducting transfer. This guarantees the maximum performance increase despite using very small training data.

Moreover, in addition to MRI and labels as training input data, we are the first to demonstrate that the labeling behavior of the medical expert using the software can also be utilized to further improve the performance. The deep learning architecture was further re-designed to exploit labeling behavior as a one-class side information (see Fig.4).

Results and Discussion

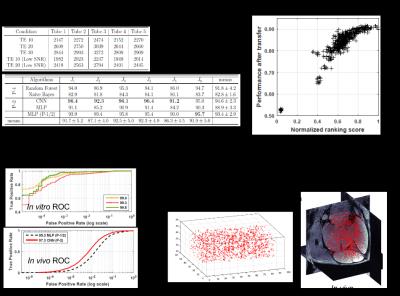

Transplanted magnetically labeled cells appeared as dark contrast spots in MRI throughout the brain of rat. The cells were confirmed using histology (See Fig. 1). Area under the ROC (receiving operating curve) was utilized as a standard measure of accuracy. Separate MRI scans were used for training and testing stages. Experimental results show that deep learning can automatically detect cells in vivo with an accuracy of up to 97.3%. A thorough evaluation was performed using multiple in vivo scans using different machines, in vitro scans with known cell numbers, noise added MRI scans etc. Deep learning shows robustness to noise and data variations (see Fig.5).

We demonstrate, using the proposed transfer learning approach, labeling effort of a medical expert can be reduced by 95%, while still maintaining ~90% accuracy. This can potentially impacting a number of clinical applications where obtaining labeled training data is a challenge.

We present a framework to demonstrate that labeling behavior as additional side-information can also be utilized to further improve the performance, on average, by 1%.

Acknowledgements

We are thankful to the support provided by NIH grantsR21CA185163 (EMS), R01DK107697 (EMS), R21AG041266(EMS).References

(1) Clinical trial: A Study to Evaluate the Safety of Neural Stem Cells in Patients WithParkinson's Disease, Website https://clinicaltrials.gov/ct2/show/NCT02452723 ( Firstreceived: May 18, 2015 Last updated: March 10, 2016 Last veri ed: February 2016).

(2) Clinical trial: Umbilical Cord Tissue-derived Mesenchymal Stem Cells for RheumatoidArthritis, Website https://clinicaltrials.gov/ct2/show/NCT01985464 ( First received:October 31, 2013 Last updated: February 4, 2016 Last veri ed: February 2016).

(3) Clinical trial: Evaluation of Autologous Mesenchymal Stem CellTransplantation (E ects and Side E ects) in Multiple Sclerosis, Web-site https://clinicaltrials.gov/ct2/show/NCT01377870 (First received: June 19,2011 Last updated: April 24, 2014 Last veri ed: August 2010).

(4) Shapiro, E. M., Sharer, K., Skrtic, S., and Koretsky, A. P. In vivo detection of singlecells by MRI. Magnetic Resonance in Medicine, 2006, 55(2), 242-249.

(5) Pan, S.J. and Yang, Q., 2010. A survey on transfer learning. IEEE Transactions on knowledge and data engineering, 22(10), pp.1345-1359.

Figures

(A) Three orthogonal MRI slices extracted from 3D data sets of the brain from animals injected with unlabeled MSCs (top row) and magnetically labeled MSCs (middle row). Note the labeled MSCs appear as distributed dark spots in the brain only. The bottom row shows three different fluorescence histology sections from animals injected with magnetically labeled MSCs confirming that these cells were present in the brain mostly as isolated, single cells. Blue indicates cell nuclei, green is the fluorescent label in the cell, red is the fluorescent label of the magnetic particle.

(B) Characteristics of the collected dataset

(A) MRI is first tessellated into a large number of 9x9 patches that are extracted using a superpixel algorithm. Note that the patches are extracted from the automatically segmented brain region. On the right, several patches corresponding to the spots are concatenated and shown. However, a large number of non-spot patches are also extracted using this process.

(B) Shows the proposed deep learning CNN architecture that can take each patch as an input , process it using the learned convolutional filters and indicate it as a spot or non-spot patch on the output node.

Developed software: (A) Basic view that is used to label easy-to-detect spots. (B) Zoomed-in view to locate a spot. (C) Zoomed-in view with contrast adjustment for detailed contextual observation prior to labeling spots

(A) Basic idea of transfer learning. To perform well on target task, knowledge of other tasks (source) can be utilized.

(B) The target task contains MRI patches as dataset, therefore, hundreds of freely available, labeled images of many entities (~200) are transformed to 9x9 patches making a diverse set of spot and non-spot like distributions. Transformed, average images of few entities are shown.

(C) CNN features are first learned using source data to recognize source entities. The learned source features are then transferred and refined using the MRI patch data.

(D) Overview of proposed framework to utilize labeling behavior.

(A) In vitro evaluation: Detected spot numbers on MRI obtained in different conditions. Known ground truth for each tube is ~2400. In vivo evaluation: On 6 testing scenarios, results of different feature extraction methods that we designed, were compared. CNNs, that automatically learn features performed the best

(B) ROCs for three in vitro testing scenarios and ROC comparison on in vivo MRI obtained from another machine.

(C) Each point is a source CNN concept. We automatically rank them prior to transfer. Using only 5% data a performance of about 90% can be achieved.

(D) Visualization of the detected spots.