0645

Accelerated knee imaging using a deep learning based reconstruction1Radiology, NYU, New York, NY, United States, 2CAI2R, NYU, New York, NY, United States, 3Institute of Computer Graphics and Vision, Graz University of Technology, Graz, Austria, 4Radiology, University Hospital Basel, Basel, Switzerland, 5Austria Safety & Security Department, AIT Austrian Institute of Technology GmbH, Vienna, Austria

Synopsis

The goal of this study is to determine the diagnostic accuracy and image quality of a recently proposed deep learning based image reconstruction for accelerated MR examination of the knee. 25 prospectively accelerated cases were evaluated by three readers and show excellent concordance to the current clinical gold standard in identification of internal derangement.

Purpose

Deep learning1 is an emerging technology that has led to breakthrough improvements in areas as diverse as image classification2 or playing the game of Go3. It was recently shown that it can be used for reconstruction of accelerated MRI data4. An open question is the performance in the presence of pathology that was not present in the learning phase. The goal of this study is to determine if accelerated MR examination of the knee using deep learning has the same diagnostic accuracy and image quality as conventional 2D turbo-spin-echo images for the diagnosis of internal derangement of the knee.Methods

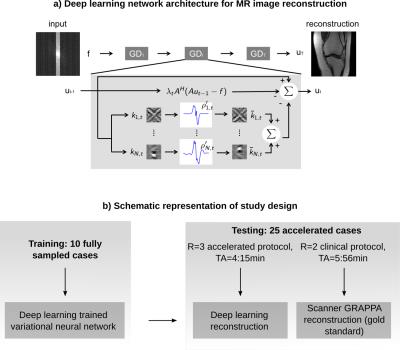

The general architecture of our deep neural network is sketched in Figure 1a. Our deep learning method consists of two phases. In the initial training stage, fully sampled raw data is used to generate artifact-free training images. This raw data is retrospectively undersampled, which introduces aliasing artifacts encountered in an accelerated acquisition. The network is trained to separate between artifacts and true image content, similar to a human radiologist using experience from reading numerous cases in the past to separate anatomical structures and pathologies from imaging artifacts. In the second stage, the trained network can then be used to reconstruct prospectively undersampled data from accelerated acquisitions (Figure 1b).

10 MRIs were obtained for the training stage using an unaccelerated protocol consisting of proton density (PD) weighted coronal and sagittal sequences with the following sequence parameters: TR=2800ms, TE=27ms, TF=4, matrix size 320x288 (coronal) and 384x307 (sagittal), combined time of acquisition (TA)=11:09min on a 3T system (Skyra, Siemens) using a 15 channel knee coil. Coronal and sagittal acquisitions were trained separately. Following training, 25 consecutive patients referred for diagnostic knee MRI to evaluate for internal derangement were enrolled in the study which was approved by the IRB. Images were obtained with the same parameters as above except for the addition of parallel imaging with an acceleration factor of 2 (R=2), which led to a TA=5:56min (our standard clinical imaging sequences). In addition, prospectively accelerated data with R=3, TA=4:15min, were acquired and reconstructed with the proposed deep learning based algorithm.

These 25 MRIs were independently reviewed by three fellowship trained musculoskeletal radiologists (experience ranged from 1–16 years) blinded to MRI acquisition and reconstruction parameters. Cases were reviewed in two different sessions, separated by 2 weeks to minimize recall bias. MRIs were assessed for the presence or absence of meniscal tears, ligament tears, bone marrow signal abnormalities, and articular cartilage defects. In addition, all images were subjectively evaluated for sharpness, signal-to-noise ratio, presence of aliasing artifacts and overall image quality using a 4-point ordinal scale. Logistic regression for correlated data was performed to test protocol interchangeability5 by comparing the rate of concordance of a pair of readers when both readers use the conventional protocol to the rate of concordance when the same two readers use the two different protocols. The hypothesis of interchangeability is that the concordance between two readers would not be impacted if exactly one reader used the new sequence instead of the conventional. Comparisons in terms of image quality scores were made using the Wilcoxon signed-rank test, averaged over the three readers.

Results and Discussion

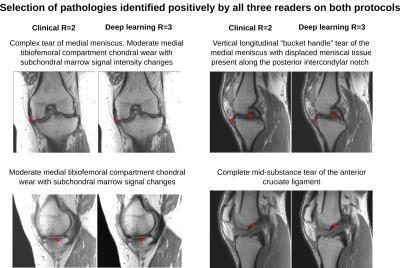

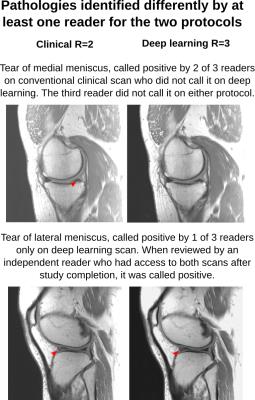

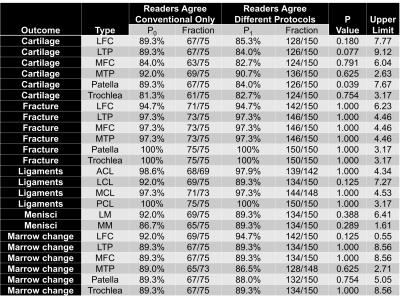

Analysis of protocol interchangeability in Figure 2 shows that averaged reader agreement using the conventional protocol for all anatomical areas was almost identical to average agreement when different readers were using different protocols (92.36% vs 91.51%). The upper limit of a 95% confidence interval for the difference in concordance rates is less than 10% for all evaluated areas, implying that interchanging sequences would reduce reader concordance by less than 10%. Comparison of image quality (Figure 3) showed a significant increase in residual artifacts and signal-to-noise ratio in the deep learning images but no significant differences between the two protocols for image sharpness or overall image quality. A selection of cases where pathologies were called positive in both the standard and deep learning protocols is shown in Figure 4. Figure 5 shows cases where pathology was called positive in only one of the two scans by at least one reader.

Conclusion

The results of our study show excellent concordance in identification of internal derangement between the current clinical gold standard and our accelerated deep learning based reconstruction. Ongoing work includes adding fat-saturated and T2-weighted sequences, thus extending our accelerated deep learning approach to a full clinical knee protocol. If substantiated in larger clinical studies, deep learning reconstruction could lead to significant shortening of exam time, potentially enlarging the indication and utilization of knee MR as well as decreasing its cost.Acknowledgements

NIH P41 EB017183, NIH R01 EB00047, FWF START project BIVISION Y729, NVIDIA corporation.

References

[1] LeCun et al., Nature 521: 436–444 (2015), [2] Krizhevsky, et al., Advances in Neural Information Processing Systems: 1097–1105 (2012), [3] Silver et al. Nature 529: 484–489 (2016), [4] Hammernik et al., Proc ISMRM p1088 (2016), [5] Obuchowski et al., Academic Radiology 21: 1483–1489 (2014).Figures

Figure 1: a) Schematic of our deep learning network for MRI reconstruction. Rawdata f is passed through T=20 stages. A is the operator that maps between rawdata f and reconstructed image u. Filter kernels k and activation functions ρ’ are trained to separate between image content and aliasing artifacts.

b) Study design: 10 unaccelerated cases were obtained for training. The trained network was used to reconstruct data from 25 R=3 accelerated acquisitions. Images with our clinical protocol were obtained for reference. The goal of the study was to compare diagnostic outcome of deep learning to the clinical gold standard.

Figure 2: Percentage and fraction of concordance between two readers evaluating the conventional clinical protocol (P0: 25 patients, 3 reader pairs, 75 possible evaluations), and the same pair evaluating the two different protocols (P1: 150 evaluations, 75 evaluations where each reader uses the conventional sequence for each pair). Correlated-data-logistic-regression tested and provided a 95%-confidence interval for P0-P1 representing concordance reduction by interchanging sequences.

Averaged conventional protocol agreement (92.36%) was almost identical to agreement for different protocols (91.51%). The upper limit was less than 10% for each area implying that interchanging sequences would reduce reader concordance by less than 10%.

Figure 3: Mean, standard deviation (SD), median and inter-quartile range (IQR) of the image quality scores averaged over readers. Residual artifacts (1=none, 4=severe), loss in image sharpness/blurring (1=none, 4=severe), signal-to-noise ratio (1=poor, 4=excellent) and overall image quality (1=poor, 4=excellent) are shown. P values are from the Wilcoxon test to compare sequences in terms of image quality.

Results show a significant increase in residual artifacts and in signal to noise ratio in the deep learning images but no significant differences between the two protocols for image sharpness or overall image quality.