0033

Hybrid MRI-ultrasound acquisitions, and scannerless real-time imaging1Department of Radiology, Brigham and Women's Hospital, Harvard Medical School, Boston, MA, United States, 2The Laboratory for Imagery, Vision and Artificial Intelligence, École de Technologie Supérieure, Montréal, QC, Canada, 3Department of Physics, Soochow University, Taipei, Taiwan, 4Google Inc, New York, NY, United States

Synopsis

The goal of this project was to combine MRI, ultrasound (US) and computer science methodologies toward generating MRI at high frame rates, inside and even outside the bore. A small US transducer, fixed to the abdomen, collected signals during MRI. Based on these signals and correlations with MRI, a machine-learning algorithm created synthetic MR images at up to 100 frames per second. In one particular implementation volunteers were taken out of the MRI bore with US sensor still in place, and MR images were generated on the basis of ultrasound signal and learned correlations alone, in a 'scannerless' manner.

Introduction

In an era of ever increasing computational power, dataset sizes and detector types, machine learning often allows traditional problems to be tackled in new and possibly better ways. Since its inception, acquisition speed and frame rates have typically been considered weaknesses of MRI, especially as compared to ultrasound (US) imaging. The purpose of the present work was to explore the benefits of combining MRI and ultrasound signals through machine-learning algorithms. In contrast to previous work on hybrid US and MRI1-3, our work employs a single-element US transducer and integrates US into the MRI reconstruction directly4,5.

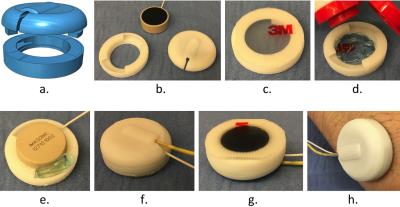

We developed a hybrid-imaging setup that includes a 3D printed capsule and an MR-compatible ultrasound transducer (Fig. 1a,b). The capsule, transducer, US gel and two-way tape are assembled and affixed to the skin as shown in Fig. 1c-h. While Fig. 1 shows the latest version of our setup, results shown here were actually obtained with our previous version, which was similar in spirit but not quite as elegant in pictures, having been carved in parts from a kid’s flip-flops. We named the resulting US assembly an ‘organ-configuration motion’ (OCM) sensor.

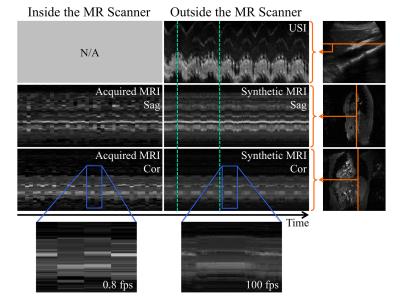

The combination of MRI and US was motivated by their highly complementary strengths: image contrast, speed, patient access, simplicity and cost. Our Bayesian algorithm allowed for two different modes of operation: After the algorithm has learned sufficiently (~1 to 2min), it can predict many intermediate images in-between actually-acquired ones, boosting temporal resolution by up to two orders of magnitude. This mode might be useful in monitoring ablation in moving organs for example, to provide rapid updates on target location. In a second mode of operation, with the subject outside the MRI scanner, the fully-trained algorithm continues predicting MR images based on OCM signals alone. Possible applications to image guided therapies and/or to multi-modality image registration are being evaluated.

Methods

Two hybrid MRI-OCM acquisitions were performed on each of 8 subjects (16 acquisitions). A pulser-receiver (5072PR, Olympus) synced with the MRI scanner fired the OCM sensor once per TR. Data were recorded using a digitizer card (NI5122, National Instruments). MRI was performed either on a 3T GE Signa or a Siemens Verio (TR 10-18ms, flip angle = 30°, matrix size 192x192, FOV 38x38cm2, ~0.6s/image). Volunteers were requested to breathe normally, with occasional (and intentional) coughing and/or gasping to challenge the algorithm. For each TR increment, the algorithm computed a synthetic MR image $$$I_t $$$ through Kernel Density Estimation6,7, using the following weighted sum over all previously acquired MR images $$$I_t$$$:

$$E[I_t | U_t,D] \approx \frac{\sum_i I_i N(U_t;U_i,\Sigma)}{\sum_i N(U_t;U_i,\Sigma)}, \qquad (1) $$

where $$$U_t$$$ is the latest OCM signal, $$$D = \{I_T, U_T\}$$$ the collection of all $$$N_T$$$ MR images acquired up to time $$$t$$$ and their time-matched OCM data, and $$$N(\cdot)$$$ is a Gaussian kernel with covariance matrix $$$\Sigma$$$. The quality of this estimation increases over time as the algorithm keeps learning and $$$N_T$$$ grows.

OCM data were further acquired from 4 subjects outside the scanner room, for 'scannerless' MRI. In both modes of operation, a 'cough-detector' algorithm robustly detected rapid motion, such as that from a cough or a gasp, based on the derivative of the OCM signal. Time points labeled as coughs/gasps were excluded from the learning database, and flags were generated for downstream applications. For example, if our hybrid method was used to track the location of a lesion during ablation, the ablation process should be paused during coughs/gasps and resumed when motion activity returns to normal.

Results

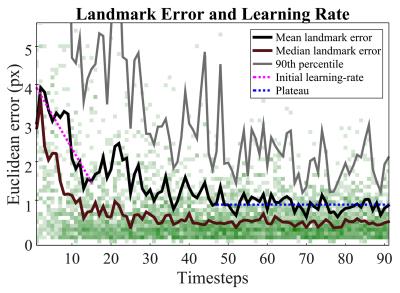

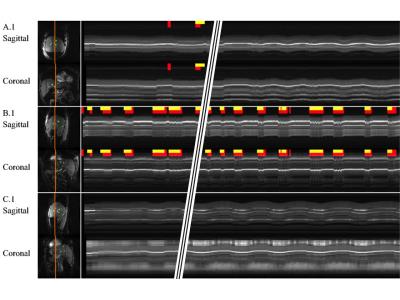

The inside-the-scanner mode was manually validated by a trained radiologist, and results are shown in Fig. 2. A mean error of 1 pixel was found, after learning had converged. Figure 3 shows results from inside the scanner in M-mode format, along with results from cough-detection. The outside-the-scanner mode was qualitatively validated using optically-tracked 2D ultrasound imaging, see Fig. 4. Good agreement of motion between MRI and ultrasound images was found.Discussion and Conclusion

Combining MRI data with ultrasound data, through a machine-learning algorithm, led to vast improvements in temporal resolution compared to MRI alone, by up to two orders of magnitude. Synthetic images from outside the bore might pave the way for interesting image-guided therapy applications. A movie8 and source-code9 have been made available online. Future improvements include the use of several OCM sensors simultaneously (instead of a single one) and additional hardware for this purpose is currently under development. Possible applications such as cardiac gating and image fusion are also being investigated. We believe that OCM sensors might prove valuable enough as an adjunct to MRI, in various applications, to possibly become a standard feature of future MRI systems.Acknowledgements

Financial support from grants NIH R01CA149342, P41EB015898, R21EB019500, and SNSF P2BSP2 155234 is duly acknowledged.References

1. Günther M and Feinberg DA. Ultrasound-guided MRI: Preliminary results using a motion phantom, Magnetic Resonance in Medicine, vol. 52, no. 1, pp. 27–32, Jul. 2004.

2. Feinberg DA, et al. Hybrid ultrasound MRI for improved cardiac imaging and real-time respiration control, Magnetic Resonance in Medicine, vol. 63, no. 2, pp. 290–296, 2010.

3. Petrusca L, et al. Hybrid ultrasound/magnetic resonance simultaneous acquisition and image fusion for motion monitoring in the upper abdomen. Invest Radiol 2013;48:333-340.

4. Preiswerk F, et al. Hybrid MRI-Ultrasound acquisitions, and scannerless real-time imaging, Magnetic Resonance in Medicine, Oct. 2016.

5. Preiswerk F, et al. Speeding-up MR acquisitions using ultrasound signals, and scanner-less real-time MR imaging, in ISMRM 23rd Annual Meeting, Toronto, Canada, 2015, p. 863.

6. Parzen E. On estimation of a probability density function and mode. Ann Math Statist 1962;33:1065-1076.

7. Watson GS. Smooth regression analysis. Sankhya: The Indian Journal of Statistics, Series A 1964;26:359-372.

8. A movie showing one result from inside the scanner has been made available at https://youtu.be/jIiKg09hbBE.

9. Open-source code and sample data has been made available at https://github.com/fpreiswerk/OCMDemo.

Figures